¨In this blog series, we shed some light on our marketing approach at The Next Web through Web analytics, Search Engine Optimization (SEO), Conversion Rate Optimization (CRO), social media, and more. This particular piece focuses on the data we provide to search engines and crawlers through Schema.org in order for them to accurately index the content we publish. Have you ever wondered how Google or other search engines are able to completely understand certain metadata on a Web page? Search engines are generally great at parsing the relevance and content of a page – but sometimes it’s hard for them to interpret the information. So how do you deal with this when you want them to better grasp what your website is about?

Enter Schema.org

Schema.org explains on its site that it is:

a set of extensible schemas that enables webmasters to embed structured data on their Web pages for use by search engines and other applications.

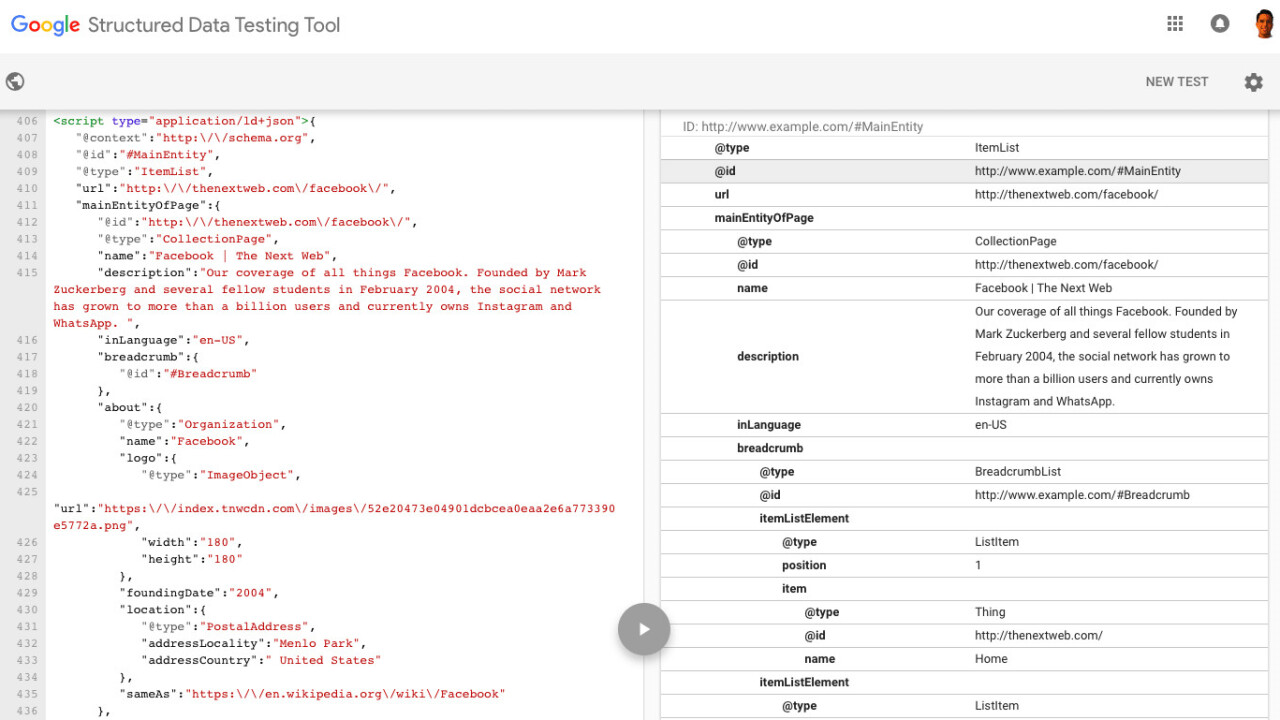

It basically means that you put additional HTML tags (microdata) around your content which explain what kind of data can be found in between them, so search engines can provide more relevant results for their users. Here’s an example: <h1 itemprop=“title”>Title</h1>, instead of <h1>Title</h1> makes sense right? This example is using microdata, but later we’ll explain why we chose to go with the alternative/recommended option: JSON-LD. How do you find out a web page is using structured data? Google provides a tool for testing Structured Data (SDTT) here.

How did we get started?

When Schema.org was introduced a couple of years ago, we started to implement some of its basic principles to make sure that our data was easier to parse. When we noticed that Google and other search engines were weighing structured data, we realized it was time to raise the bar and implement more extensive markups for better results. Looking at one of the biggest structured data implementations in publishing, The New York Times, we made good progress to support the semantic Web even more. Our take on this is quite easy: Our content is openly available anyway (no paywall), so why not make our lives easier and make sure search engines are completely able to understand the content to a maximum?

What did we implement?

Currently there are a couple hundred Schemas available to markup your content with. Since we publish various forms of content, we need to support several of them. This is an overview of the markup we applied:

- Homepage: WebSite, Organization (example SDTT).

- Categories and Sections: ItemList, NewsArticle, CollectionPage, Thing, BreadcrumbList (example SDTT).

- Articles: NewsArticle, WebPage, BreadcrumbList (example SDTT / jsfiddle).

- Single Event listing: ItemList, NewsArticle, CollectionPage, Event, BreadcrumbList (example SDTT).

- Company listing: ItemList, NewsArticle, CollectionPage, Organization, BreadcrumbList (example SDTT).

If you’re aware of the new AMP Project then you also know that AMP pages are duplicates. We marked up these pages for our content identically to make sure they fully support Schema.org.

JSON-LD v.s. Microdata

When we started implementing more detailed markup, we chose to go with Microdata as the basics are easier to work with – all you need to do is implement the itemprop elements around your existing code. Scaling and going into more advanced set-ups – such as adding information that is available in the back-end but doesn’t necessarily needs to be in the front-end – overcomplicates stuff. That’s why we refactored the set-up to JSON-LD. From a development perspective, it’s more scalable to use JSON-LD. It stands alone in the code base instead of being embedded into the content as with Microdata. In addition, Google recommends JSON-LD as their standard format as it’s usually easier to parse than other formats as stated in their own documentation.

Why did we do this?

Help google understand the content even better

As said before, we can’t assume that Google understands all of our content. The clearer we are in declaring various types of content, the smaller the chance of search engines misinterpreting our content. News articles are at the core of our blog, so it’s better to have everything set up according to Google’s guidelines.

Article carousels

Google is still adding a lot of new features that are supported by Schema.org markup. For example, adding rich cards in search results gor things like recipes and movies earlier in May. I don’t think we’re aiming for recipe listings anytime soon, but we’d like to be on the forefronts whenever Google make these kind of changes which can have an effect on our content. We think Google will implement this again in the near future, but for newsworthy articles. For this reason we choose for the current “ItemList” setup.

- Host-specific lists — displays recipe cards from a single site within a specific category. This markup uses a combination of ItemList markup and individual data type markup to enable a carousel of lists to appear in Search.

- Events — enables a carousel of events from authoritative event websites.

Sitelinks searchbox

Do you sometimes see a searchbox, like the image below, in a google search result and wonder where it’s coming from? This is also structured data (“Website”). The search box appears when you search for brand related queries. Searches performed in this box will take you to another set of results from the specific website.

What does the future look like?

As Schema.org is extending its efforts into making content easier to ‘understand’, we’ll keep a close eye on the new releases, checking the semantic search community, and regularly check-in with our external SEO consultants for updates who helped us a lot with the setup (Thanks Jarno!). This also means that for every new feature we release, we’ll make sure to check what capabilities Schema.org has to offer for it. The additional structured data can help to gain new insights in data analysis. The more rich the markup is, the more insights can be gained from it. What do you think could be gained from this data and how do you think this is going to impact the way we use search engines and create content on the web? Leave a comment! If you missed previous posts in this series, don’t forget to check them out: #1: Heat maps , #2: Deep dive on A/B testing and #3: Learnings from our A/B tests, #4: From Marketing Manager to Recruiter, #5: Running ScreamingFrog in the Cloud, #6 What tools do we use?, #7: We track everything!, #8: Google Tag Manager , #9: A/B Testing with Google Tag Manager, #10: Google Search Console, #11: 500 Million Search Results and #12: How are you engaging with this page?

Get the TNW newsletter

Get the most important tech news in your inbox each week.