As a web developer, you may have wanted to generate a PDF file of a web page to share with your clients, use it in presentations, or add it as a new feature in your web app. No matter your reason, Puppeteer, Google’s Node API for headless Chrome and Chromium, makes the task quite simple for you.

In this tutorial, we will see how to convert web pages into PDF with Puppeteer and Node.js. Let’s start the work with a quick introduction to what Puppeteer is.

What is Puppeteer, and why is it awesome?

In Google’s own words, Puppeteer is, “A Node library which provides a high-level API to control headless Chrome or Chromium over the DevTools Protocol.”

[Read: ]

What is a headless browser?

If you are unfamiliar with the term headless browsers, it’s simply a browser without a GUI. In that sense, a headless browser is simply just another browser that understands how to render HTML web pages and process JavaScript. Due to the lack of a GUI, the interactions with a headless browser take place over a command line.

Even though Puppeteer is mainly a headless browser, you can configure and use it as non-headless Chrome or Chromium.

What can you do with Puppeteer?

Puppeteer’s powerful browser-capabilities make it a perfect candidate for web app testing and web scraping.

To name a few use cases where Puppeteer provides the perfect functionalities for web developers,

- Generate PDFs and screenshots of web pages

- Automate form submission

- Scrape web pages

- Perform automated UI tests while keeping the test environment up-to-date.

- Generating pre-rendered content for Single Page Applications (SPAs)

Set up the project environment

You can use Puppeteer on the backend and frontend to generate PDFs. In this tutorial, we are using a Node backend for the task.

Initialize NPM and set up the usual Express server to get started with the tutorial.

Make sure to install the Puppeteer NPM package with the following command before you start.

Convert web pages to PDF

Now we get to the exciting part of the tutorial. With Puppeteer, we only need a few lines of code to convert web pages into PDF.

First, create a browser instance using Puppeteer’s launch function.

Then, we create a new page instance and visit the given page URL using Puppeteer.

We have set the waitUntil option to networkidle0. When we use networkidle0 option, Puppeteer waits until there are no new network connections within the last 500 ms. It is a way to determine whether the site has finished loading. It’s not exact, and Puppeteer offers other options, but it is one of the most reliable for most cases.

Finally, we create the PDF from the crawled page content and save it to our device.

The print to PDF function is quite complicated and allows for a lot of customization, which is fantastic. Here are some of the options we used:

- printBackground: When this option is set to true, Puppeteer prints any background colors or images you have used on the web page to the PDF.

- path: Path specifies where to save the generated PDF file. You can also store it into a memory stream to avoid writing to disk.

- format: You can set the PDF format to one of the given options: Letter, A4, A3, A2, etc.

- margin: You can specify a margin for the generated PDF with this option.

When the PDF creation is over, close the browser connection with browser.close().

Build an API to generate and respond PDFs from URLs

With the knowledge we gather so far, we can now create a new endpoint that will receive a URL as a query string, and then it will stream back to the client the generated PDF.

Here is the code:

If you start the server and visit the /pdf route, with a target query param containing the URL we want to convert. The server will serve the generated PDF directly without ever storing it on disk.

URL example: http://localhost:3000/pdf?target=https://google.com

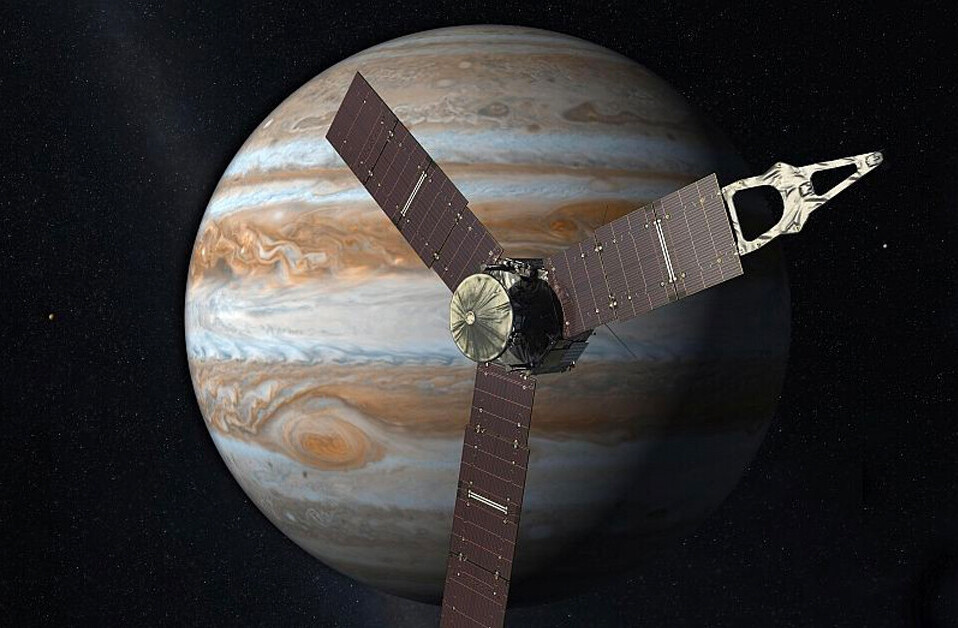

Which will generate the following PDF as it looks on the image:

That’s it! You have completed the conversion of a web page to PDF. Wasn’t that easy?

As mentioned, Puppeteer offers many customization options, so make sure you play around with the opportunities to get different results.

Next, we can change the viewport size to capture websites under different resolutions.

Capture websites with different viewports

In the previously created PDF, we didn’t specify the viewport size for the web page Puppeteer is visiting, instead used the default viewport size, 800×600px.

However, we can precisely set the page’s viewport size before crawling the page.

Conclusion

In today’s tutorial, we used Puppeteer, a Node API for headless Chrome, to generate a PDF of a given web page. Since you are now familiar with the basics of Puppeteer, you can use this knowledge in the future to create PDFs or even for other purposes like web scraping and UI testing.

This article was originally published on Live Code Stream by Juan Cruz Martinez (twitter: @bajcmartinez), founder and publisher of Live Code Stream, entrepreneur, developer, author, speaker, and doer of things.

Live Code Stream is also available as a free weekly newsletter. Sign up for updates on everything related to programming, AI, and computer science in general.

Get the TNW newsletter

Get the most important tech news in your inbox each week.