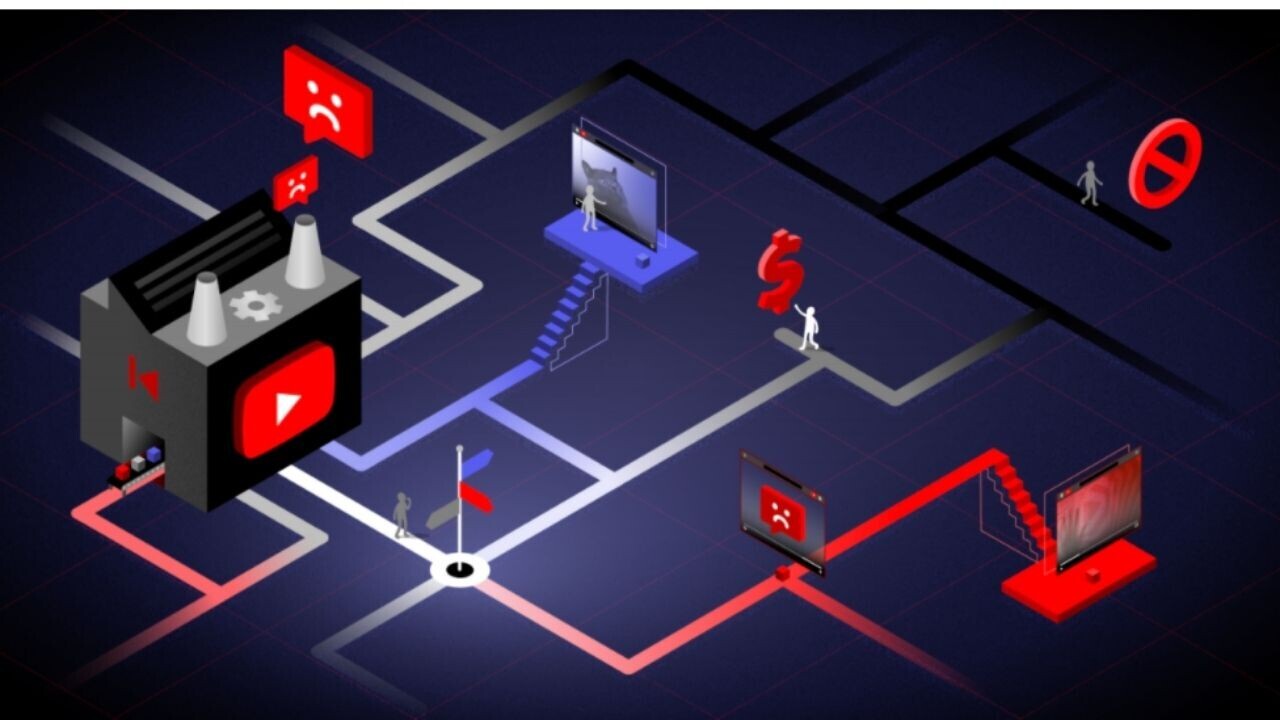

YouTube’s algorithm recommends “regrettable” videos that violate the platform’s own content policies, according to a new investigation by the nonprofit Mozilla Foundation.

The crowd-sourced study found that 71% of the videos that volunteers deemed regrettable were actively recommended by YouTube’s own algorithm.

Non-English speakers were far more likely to find videos that they considered disturbing: the rate of YouTube regrets was 60% higher in countries that don’t have English as a primary language.

Up front: The report is based on data collected through Mozilla’s RegretsReporter, a browser extension that allows users to provide information on harmful videos and the recommendations that led them there.

More than 30,000 YouTube users used the tool to provide data about their experiences. One example involved a volunteer who watched content about the US military. They were then recommended a misogynistic video titled “Man humilitates [sic] feminist in viral video.”

Mozilla says they flagged a total of 3,362 regrettable videos, coming from 91 countries, between July 2020 and May 2021. The most frequent categories of regrettable videos were misinformation, violent or graphic content, hate speech, and spam/scams.

Mozilla also found that recommended videos were 40% more likely to be reported by volunteers than videos for which they searched.

Almost 200 videos recommended by the algorithm (around 9% of the total) have now been removed from YouTube. But by that point, they had already racked up a collective 160 million views.

Brandi Geurkink, Mozilla’s Senior Manager of Advocacy, said YouTube’s algorithm is designed in a way that harms and misinforms people:

Our research confirms that YouTube not only hosts, but actively recommends videos that violate its very own policies. We also now know that people in non-English speaking countries are the most likely to bear the brunt of YouTube’s out-of-control recommendation algorithm. Mozilla hopes that these findings — which are just the tip of the iceberg — will convince the public and lawmakers of the urgent need for better transparency into YouTube’s AI.

Quick take: YouTube’s algorithm drives 70% of watch time on the platform — an estimated 700 million hours every single day. But pushing content that keeps people watching for as long as possible comes with risks.

The system has long been accused of recommending harmful content. Indeed, Mozilla’s study suggests these videos perform well on the platform: reported videos got 70% more views per day than other content watched by the volunteers.

However, that doesn’t mean the recommendations are relevant: in 43.3% of cases where Mozilla has data about trails a volunteer watched before a regret, the recommendation was completely unrelated to the previous videos that they watched.

YouTube has tried to reduce the spread of harmful content by making numerous tweaks, but Mozilla’s study suggests there’s still a lot of work to do.

Update (7:15PM CET, July 7, 2021): A YouTube spokesperson told TNW in a statement:

“The goal of our recommendation system is to connect viewers with content they love and on any given day, more than 200 million videos are recommended on the homepage alone. Over 80 billion pieces of information is used to help inform our systems, including survey responses from viewers on what they want to watch. We constantly work to improve the experience on YouTube and over the past year alone, we’ve launched over 30 different changes to reduce recommendations of harmful content. Thanks to this change, consumption of borderline content that comes from our recommendations is now significantly below 1%.”

The company also said that it welcomes further research on this front and is exploring options to bring in external researchers to study its systems. However, YouTube questioned how is “regrettable” is defined in the research, and added that it can’t review the validity of the study data, as Mozilla hasn’t shared the full data set.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.