We were warned, time and time again, by one expert after another. AI doesn’t play fair, it can’t make moral decisions, and it relies entirely on humans for ethics. When those humans are (allegedly) Alex Nix, Steve Bannon, and Dr. Aleksandr Kogan we’re all in a lot of trouble.

Cambridge Analytica (allegedly) took bad science, stolen data, and machine learning and used it to create a toxic stew designed specifically to radicalize the American people. The company’s success is no more admirable than a serial killer’s bodycount.

And no matter Facebook’s level of involvement, it’s now tainted. Even if it turns out no laws were broken by either company, using weaponized AI against humans and systemically manipulating the populations of several countries is more than just bad business.

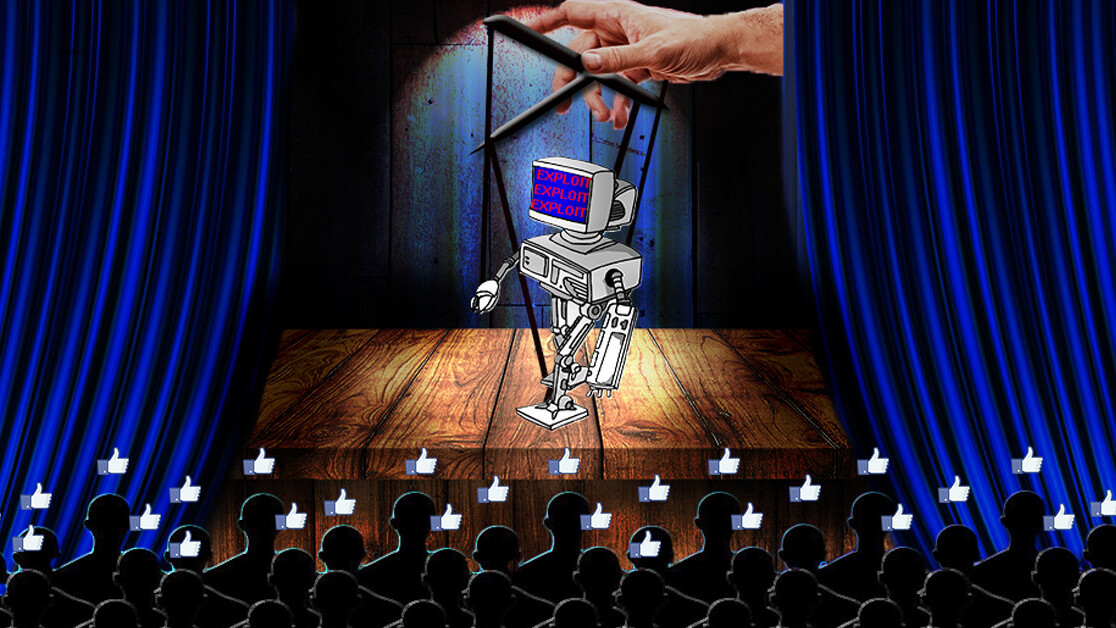

It should shock nobody to learn at the very rotten core of the Cambridge Analytica / Facebook scandal lies the unethical use of artificial intelligence. If you were expecting an army of killer robots, take solace in the knowledge our new overlords are far more villainous: they’re social engineers bent on changing the DNA of our society through the perversion of science for the purpose of propaganda.

In 2013 a Stanford researcher named Michael Kosinski released a popular white paper that ‘demonstrated’ AI could predict a human’s personality traits better than people could, using nothing more than Facebook data.

It’s crucial to understand that “better than humans can” is by no means an empirical measure of an AI’s ability. You can create a paper airplane that flies better than humans can, but that doesn’t make it good.

Given enough information, it’s quite possible an AI can “predict” anything better than a human. If you design a neural network that gets rewarded when it confirms bias, it’s going to confirm bias.

We recently criticized another paper by Kosinksi, in which he claims to have used “the lamest algorithm you can use” to predict whether people are gay or not. It does no such thing, and here’s why.

Kosinski isn’t a bad scientist by any means, and his Facebook paper presents important research. Also, reportedly, he turned down an offer to work with Dr. Kogan: he obviously has a sense of decency.

Because Dr. Kogan almost certainly used Kosinski’s publicly available white paper to at least partially come up with the data-harvesting and processing techniques that Cambridge Analytica used to assault democracy during the Brexit vote, the 2016 Republican primary campaign of Ted Cruz, and The 2016 US presidential election. It’s worth mentioning that Dr. Kogan denies this.

And Facebook, as we’ve pointed out, either stood by and let it all happen out of ignorance, or encouraged it. Whose job was it to protect our democracies from this threat?

It is clear the US is under assault by foreign entities. The mind boggles at the Trump administration’s complete and utter failure to address the weaponization of AI against the American people by organizations tied to Russia’s government.

But worse, the American people have been attacked by enemies domestic. Cambridge Analytica is headquartered in New York and Facebook’s main office is in Menlo Park, California.

From now on, when the sensationalists rant and rave about “killer robots,” just point them in the direction of Cambridge Analytica and explain what the real AI threat is: people without ethics using literal propaganda machines for planet-scale social engineering efforts.

The Next Web’s 2018 conference is just a few months away, and it’ll be ??. Find out all about our tracks here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.