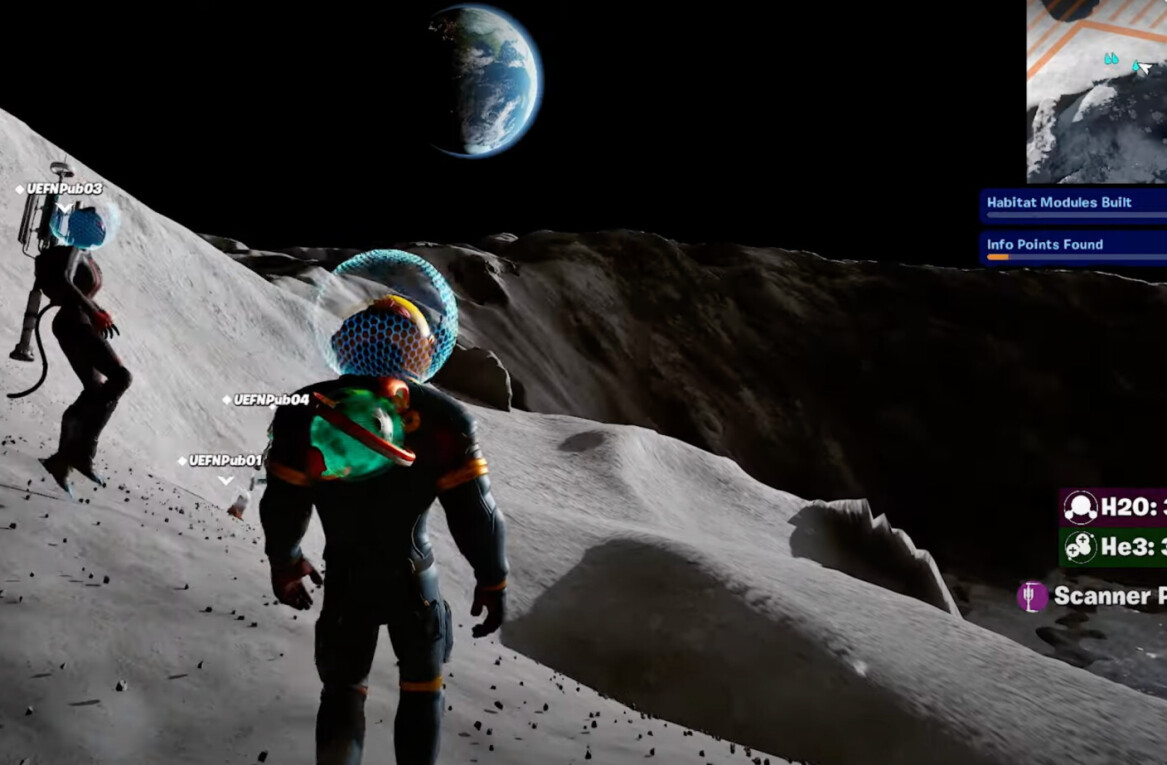

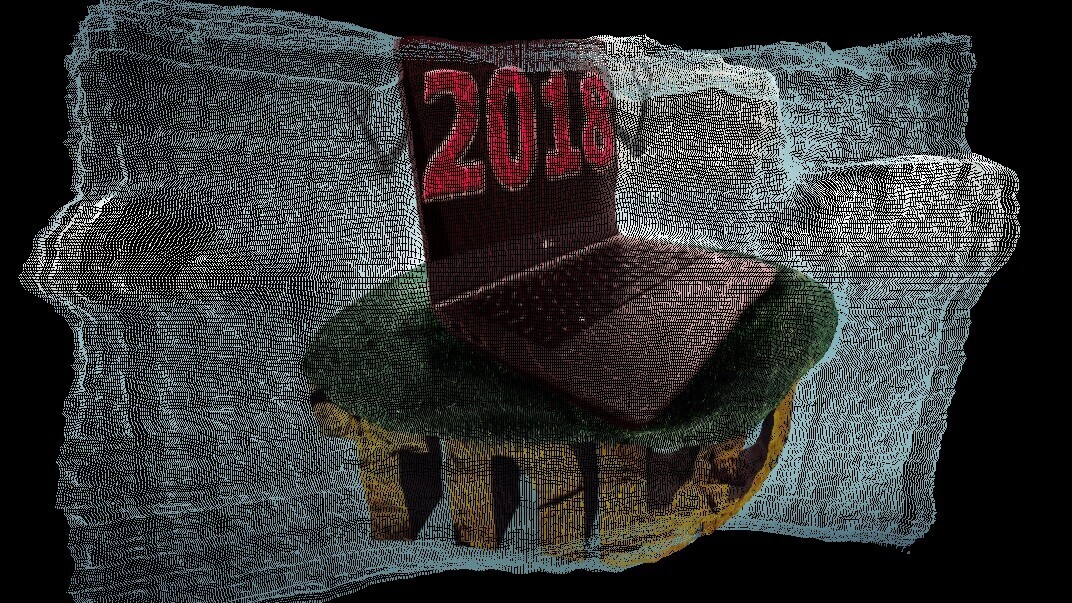

In January we wrote about an AI called Volume that ripped characters out of videos. The developers of that AI, Or Fleisher and Shirin Anlen, recently updated their website with an interactive AI experiment that converts any flat imageto 3D.

We’ve seen similar AI before, but this one does more than facial reconstruction. We asked Fleisher, who you may also remember as the creator of an AI that gives any webcam Kinect-like capabilities, if training an AI to convert any type of image presents a different challenge than training one to convert something specific:

It is different in some ways and similar in others. While the mechanics of constructing and training such deep Neural Networks have similarities, our mission to create a model that understands the human body and environments requires a lot of data… In the background, we are training a few models for different tasks and developing a classification method for identifying the dominant elements in the image. Once we know what to expect, we are able to pick a model which has a more refined understanding of how this should look like in 3D. This is quite different from other approaches that accumulate a lot of knowledge into one single machine learning model. It is still work in progress, but we hope to keep on training the networks to acquire more knowledge required to reconstruct a 3D human figures and environments from 2D images and videos.

Fleisher also told us that users, by trying the experiment for themselves, were providing Volume with valuable feedback:

It is important to say, we do not share any of the data users upload. We do however use it to evaluate the machine learning models to get a better understanding of what works and what doesn’t. Our goal with opening our API to the public via our new website is also learning what are the tendencies, trends, and desires people have for such functionality. We also encourage users to share their Volume reconstructions socially using the share icon at the top. We believe that by reflecting the work we do internally to people online, we will be able to grow (with) this into an effective and innovative tool.

Volume is being developed as an end-to-end solution for converting flat 2D images into 3D figures and environments. While the API is still in early development, it’s pretty easy to see this becoming a go-to application suite for video editors and game developers. And, according to Fleisher, Volume will continue to get new features:

We are also looking into more popular areas such as image augmentation. We experimented and built an application called ReTouch, which enables the use of the depth estimation for retouching images in 2.5D, this was all open-sourced. With that being said, the purpose of the Volume API is to encourage the diverse use of the same technology in different fields and uses. By building Volume as a cloud, computer vision API we are able to provide the same results we use for 3D reconstruction for people who aren’t necessarily using it for the same purpose.

You can check out the Volume API and convert your own images into 3D using AI at the team’s website.

Want to hear more about AI from the world’s leading experts? Join our Machine:Learners track at TNW Conference 2018. Check out info and get your tickets here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.