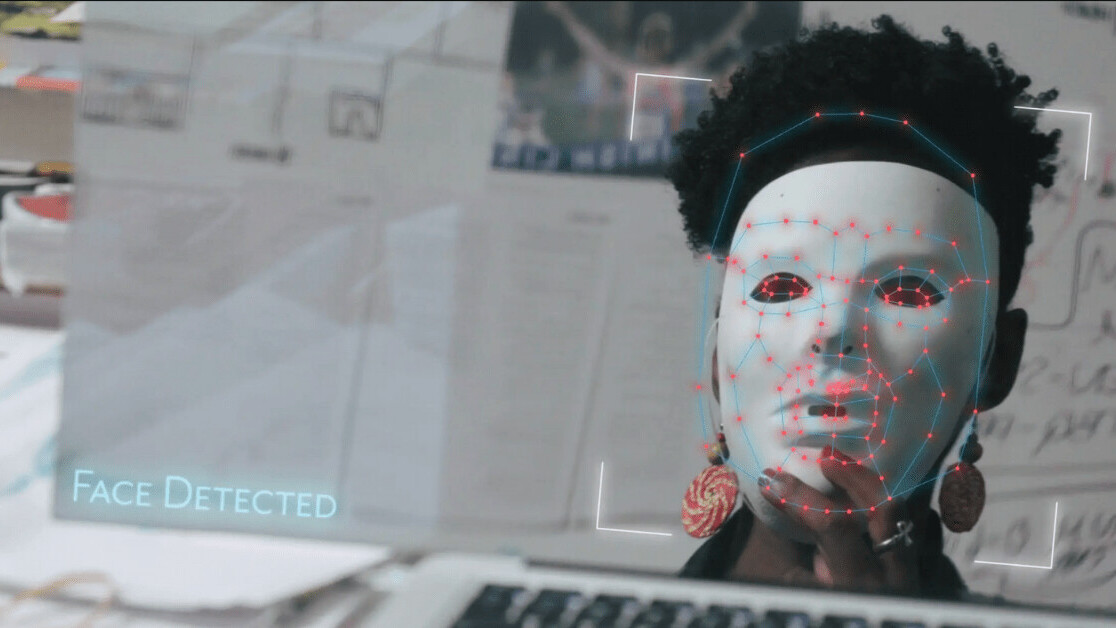

In 2015, MIT researcher Joy Buolamwini was developing a device called the Aspire Mirror. Onlookers would stare into a webcam and then see a reflection on their face of something that inspires them.

At least, that was the plan. But Buolamwini quickly noticed that the facial recognition software was struggling to track her face — until she put on a white mask.

Buolamwini began an investigation into algorithmic injustices that took her all the way to the US Congress. Her journey is the central thread in Coded Bias, a documentary directed by Shalini Kantayya that launched on Netflix this week.

“I didn’t know I actually had a film until Joy testified before Congress,” Kantayya tells TNW. “Then I knew that there was a character arc and an emotional journey there.”

[Read: How to use AI to better serve your customers]

While Buolamwini digs deeper into facial recognition’s biases, viewers are introduced to a range of researchers and activists who are fighting to expose algorithmic injustices.

We meet Daniel Santos, an award-winning teacher whose job is under threat because an algorithm deemed him ineffective; Trane Moran, who’s campaigning to remove a facial recognition system from her Brooklyn housing complex, and data scientist Cathy O’Neil, the author of Weapons of Math Destruction.

The majority of the film’s central characters are women and people of color, two groups who are so often unfairly penalized by biased AI systems.

Kantayya says this wasn’t her initial intention, but her research kept bringing her back to these subjects:

They’re some of the smartest people I’ve ever interviewed. I think there’s something like eight PhDs in the film. But they also had an identity that was marginalized. They were women, they were people of color, they were LGBTQ, they were religious minorities. I think having that identity that was marginalized enabled them to shine a light into biases that Silicon Valley missed.

Global concerns

While the majority of Coded Bias is set in the US, the film traverses across three continents. In London — reportedly the world’s most surveilled city outside China — a Black teenager in his school uniform is stopped by police officers due to a facial recognition match.

He’s searched, fingerprinted, and his details are checked. It’s another false match.

Police use of facial recognition is expanding at an alarming rate in the UK, but at least we can witness some of it on film. Kantayya says this wouldn’t have been possible in her home country:

The US is essentially a lawless country when it comes to data protection. It is a wild wild west. There were some scenes in the film, like the police trial of facial recognition in London, where I literally would not have been able to cover that as a journalist in the US, because there were no laws in place that would make that process transparent to me.

Kantayya wants the US to put a pause on facial recognition, and pass data protection regulation laws that resemble the EU’s GDPR. She also supports Cathy O’Neil’s idea of an FDA for algorithms, which would assess the safety of AI systems before they’re deployed at scale.

“We’re really seeing how urgent it is that we hold our technologies to much higher standards than we’ve been holding them to,” she says.

The film later shifts to Hangzhou, China, where the sci-fi future many fear seems to have already arrived.

A young woman scans her face to buy groceries while praising China’s social credit system. She views facial recognition and the credit initiative as complementary technologies, “because your face represents the state of your credit.”

We can choose to immediately trust someone based on their credit score, instead of relying on only my own senses to figure it out. This saves me time from having to get to know a person.

Kantayya says the scene shocks viewers in democracies. But is the situation in the US much better? Futurist Amy Webb isn’t convinced.

“The key difference between the United States and China, is that China is transparent about it,” she says in the film.

Sci-fi meets reality

Kantayya is an avid sci-fi fan, and the genre has a strong influence on Coded Bias.

The film opens with an animated embodiment of AI speaking ominously about its love for humans. It’s the real voice of Tay, an infamous Microsoft chatbot. Tay was pulled from Twitter within hours of its launch after trolls trained it to spout racist, misogynistic, and anti-Semitic invective.

Around half of Coded Bias uses real transcripts from Tay. But its speech later morphs into a written screenplay narrated by an actor.

Kantayya said the AI narrator was inspired by the character of HAL in Stanley Kubrick’s 2001: A Space Odyssey. She also uses clips from Minority Report and The Terminator to draw parallels between representations of AI in sci-fi and reality.

The visual language of science fiction really informed the way that I communicated the themes in Coded Bias. Because I’m not a data scientist and have no background in these issues, I drew from science fiction themes to understand how AI is being used in the now.

While Coded Bias draws on sci-fi in its depiction of AI, the documentary also subverts one of the genre’s common themes: the dominance of white men, both behind the camera and in front of it. Kantayya hopes this helps reshape our visions of the future:

I really believe that we’ve had a stunning lack of imagination around these technologies, because both science fiction and AI developers are predominantly white and predominantly male. It’s my hope that by centering the voices of women and people of color in the technologies that are shaping the future, we’ll unleash a new kind of imagination about how these systems could be used and deployed.

She also wants the film to raise the public’s understanding of AI. Ultimately, we should all push for policies that protect people from algorithmic injustices.

As Kantayya puts it, “Data rights are the unfinished business of the civil rights movement.”

Coded Bias was released on Netflix on April 5. You can learn more about the film and the fight for algorithmic justice here.

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.