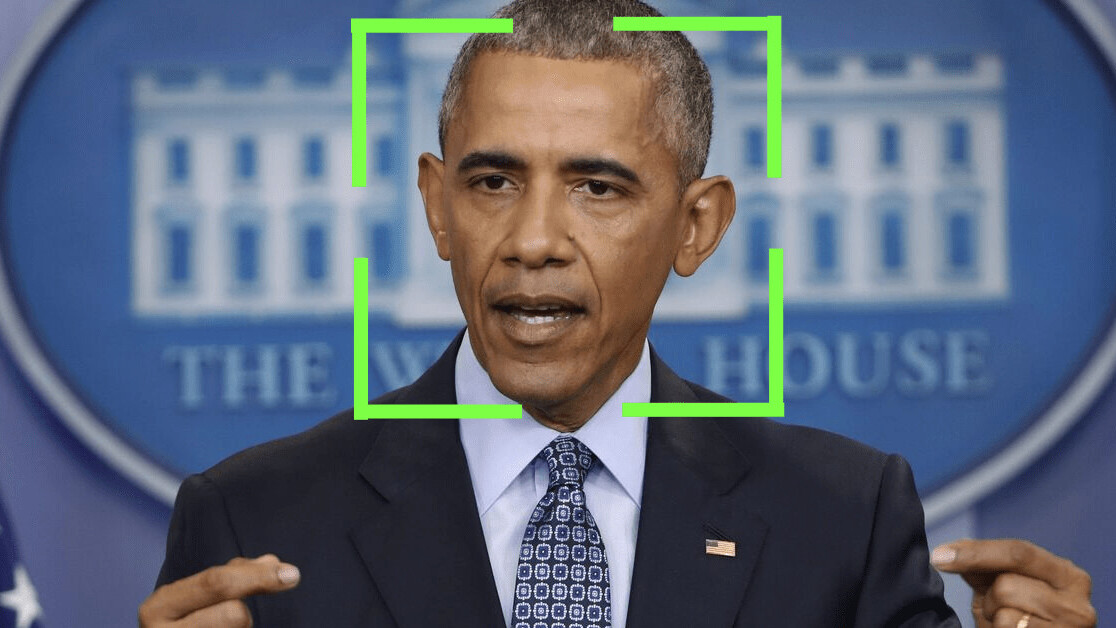

Revenge porn isn’t exactly a new phenomenon, but with advancements in AI, “deepfakes” — an AI-based technology used to digitally produce or alter realistic looking video content — are becoming increasingly harder to distinguish from real videos. This technology has also been used to doctor political videos, such as the clip that sounded, and looked like, Obama called President Trump a “dipshit.”

To combat this, the state of California passed two bills. The first now makes it illegal to post any manipulated videos of political figures, such as replacing a candidate’s face with the intention to discredit them, within 60 days of an election. The second bill gives people living in California the right to sue anyone who puts their image into a pornographic video using deepfake technology.

Deepfake tech has become easily accessible and videos can be made via apps or using affordable consumer-grade equipment, which is partly why the web is flooded with pornographic films of high-profile female celebrities. The erosive effects deepfakes could have on politics are also obvious — such as the doctored-footage of Nancy Pelosi (D-CA) sounding drunk, which Facebook refused to remove from the platform.

“Voters have a right to know when video, audio, and images that they are being shown, to try to influence their vote in an upcoming election, have been manipulated and do not represent reality,” Marc Berman, the California Assembly representative said in a press release, as first reported by Engadget. “[That] makes deepfake technology a powerful and dangerous new tool in the arsenal of those who want to wage misinformation campaigns to confuse voters.”

The American Civil Liberties Union highlighted how this bill won’t solve the issue of people weaponizing deepfake technology for political benefit. Instead, “it will only result in voter confusion, malicious litigation and repression of free speech,” the Union said in a letter.

While we are yet to see any governing bodies or politicians’ reputations being seriously destroyed by deepfakes, we have already witnessed tons of womens’ likenesses superimposed in sexual or pornographic videos without their consent — whether they’re celebrities or not.

These first steps made by the Californian government to combat malicious deepfake technology are promising, especially for those victims of revenge porn.

Get the TNW newsletter

Get the most important tech news in your inbox each week.