There’s an obvious gender imbalance in technology and voice assistants. Whether it’s Siri, Cortana, Alexa, or another female voice telling you when to turn right on Google Maps, it’s most commonly an AI ‘woman’ who takes our commands.

With so much female servitude in our smart devices, along with the rapid deployment of AI, it should come as no surprise that technology is hardwiring sexism into our future — but Q, the world’s first genderless voice, hopes to eradicate gender bias in technology.

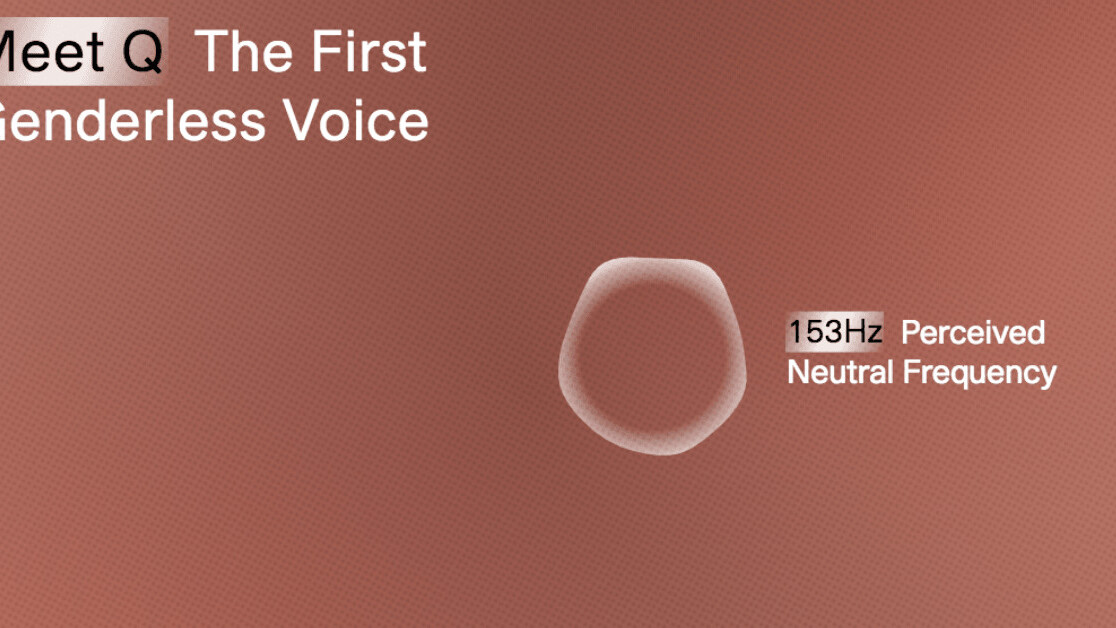

Created by a group of linguists, technologists, and sound designers, Q hopes to “end gender bias” and encourage “more inclusivity in voice technology.” They recorded the voices of two dozen people who identify as male, female, transgender, or non-binary in search for a voice that typically “does not fit within male or female binaries.” To find this voice, the Q team conducted a test involving over 4,600 people, who were asked to rate the voice on a scale of 1 (male) to 5 (female).

From this experiment, audio researchers were able to define a frequency range which is gender neutral. They recorded several voices, working on the pitch, the tone and the format filter and finally achieved “Q.”

Despite the underrepresentation of women in AI development, it’s no coincidence that almost all voice assistants are given typically female names, such as Amazon’s Alexa, Microsoft’s Cortana, and Apple’s Siri. According to several studies, regardless of the listener’s gender, people typically prefer to hear a male voice when it comes to authority, but prefer a female voice when they need assistance.

While a genderless voice is a step in the right direction towards inclusivity, technology cannot progress and move away from gender bias without diversity in creative and leadership roles. Gendered voice assistants reinforce deeply ingrained gender biases because the data being used in machine learning training is based upon human behavior — robots are only sexist because the humans they learn from are.

Q is not only challenging gender stereotypes, but also encouraging tech companies to take societal responsibility when it comes to diversity and inclusivity.

TNW Conference 2019 is coming! Check out our glorious new location, inspiring line-up of speakers and activities, and how to be a part of this annual tech bonanza by clicking here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.