The human brain is designed to make quick and effective decisions rather than stick to facts at all times. Instead of acting rationally, we prefer to act fast. This may lead to better outcomes indeed, but it might also lead you astray. Cognitive biases can be both a blessing and a curse.

With this article, you’re going to learn

- what exactly is a cognitive bias

- why UX designers should be well aware of different cognitive biases

- how both designers and users are prone to cognitive biases

- what are the most common cognitive biases in design

Ready to find out how our brains work? Let’s dive in!

What is a cognitive bias

Although cognitive biases are not an entirely new phenomenon, the term was first defined by Daniel Kahneman and Amos Tversky in the 1970s. The two widely acclaimed scientists were researching people’s innumeracy. They found out that most subjects make decisions that are far from rational, especially when it comes to large numbers.

Instead of sticking to facts, people tend to use mental shortcuts to estimate the outcome. These shortcuts are known as heuristics, which help us solve problems quicker, but may also lead to errors in thinking, called cognitive biases.

According to Interaction Design Foundation, a cognitive bias is a systematic (non-random) way in which the context affects judgment and decision-making. In other words, it’s all about the framing of information. We focus on different aspects depending on the surroundings. Because of that, our reasoning is not fully rational. Tversky and Kahneman found out that if we frame the same information differently, it may lead to different outcomes.

Read: [These are the Web Accessibility Standards designers need to know]

Here’s a classic example of the framing bias in action:

$290 may appear like a high price, yet it seems like a bargain when you know that the same product would normally cost $400. A $110 saving changes the perspective, doesn’t it?

This popular case is just the tip of the iceberg. It’s a proven and tested marketing trick to generate more sales, but there’s much more to cognitive biases that could matter to UX designers. Here’s why this kind of knowledge is sure to come in handy:

Why cognitive biases matter for UX designers

Before we dig deeper, it’s important to note that both the designers and the users are prone to cognitive biases. None of us is fully immune to heuristics and prejudices — and this is exactly why we should be aware of it. The same rules that affect how the users make decisions apply to UX designers.

As we’ve already mentioned above, we’re all prone to framing. The context, as well as our previous experiences, all affect design decisions. All these external factors make us focus on specific aspects of the issue — or ignore the other ones.

Kathryn Whitenton of the Nielsen Norman Group used a brilliant example to describe how this works for UX designers. Imagine that you’ve conducted a usability test with 20 users. The outcomes can be described in two different ways

- 20% of users couldn’t find the function on the website

- 80% of users found the function on the website.

See the difference? The researchers from Nielsen Norman Group decided to test both versions in an online quiz. Here’s what happened: 39% of UX designers who saw the success rated voted for a redesign. In the case of respondents who saw the failure rate, 51% of them thought that the feature needs to be redesigned.

This shows how framing research results and statistics in a different manner may lead to significantly different design decisions. On the other hand, the framing bias can affect the users too. It’s mostly visible in the perception of the pricing, what is expensive, and what can be considered a bargain.

Below we’re going to describe how the most common cognitive biases apply to both the users and the UX designers.

Ready to learn more?

Common cognitive biases in UX design

We’ve used one of the most popular biases, the framing bias, as the main example. Here’s a couple of other cases that are worth keeping in mind:

Anchoring bias

The anchoring bias(also known as the anchoring principle) is about relying on a single aspect and ignoring the other ones involved. Because people use it to make decisions, it’s also a judgment heuristic, exactly like framing.

How it works for the user: Anchoring bias can be hugely helpful in understanding user interfaces. It’s the bright side of this phenomenon — the user can stick to one clue, and therefore, learn to use the application faster. This goes in line with the principle of least effort and Jakob’s law. We’re describing these two in our in-depth article about psychological principles in UX.

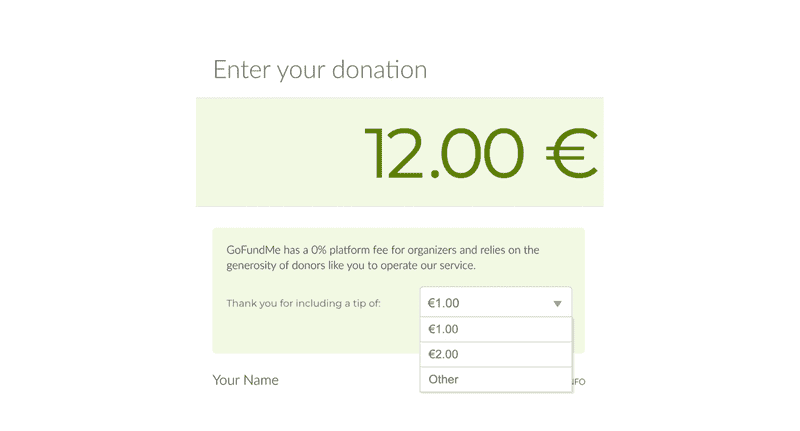

Suggested values are also a good example here. Most non-profit websites, such as GoFundMe, don’t charge a set fee for their services. Instead, they provide a suggestion:

How it works for the UX researcher: Because of the primacy effect, the first piece of information we receive usually becomes the anchor. It’s good to keep that in mind for user testing. The respondents may prefer the first version they were introduced to simply because it was the first one, not because it was actually better. One of the ways to avoid this is through A/B testing — you can show different version to your users in a different order:

Wording bias

What is the best qualitative research method and why it’s session recording?

This is a classic (a little exaggerated, yes) example of the wording bias, which is also known as response bias. It occurs when the question itself influences the answer.

How it works for the user: Imagine taking a survey, where the first question is: How difficult was it for you to use this feature? The question alone implies that using this particular feature is difficult, at least to some extent.

How it works for the UX researcher: The wording bias largely affects the validity of surveys. Here’s how different types of biased questions can affect research results:

- The leading question, e.g. Do you prefer the previous or the improved version of the app? The word improved suggests the new version is better than the old one.

- The double-barreled question, e.g. Are you enjoying the new inbox and text editor? What if the user is a fan of the inbox yet they’re skeptical about the editor? In this case, the respondent has to express the same opinion about both features.

- The absolute question, which may not always work. Sometimes the yes/no answer misses the point. For instance, when you ask the user if they enjoy using the new feature, you might want to leave space for their comments and ideas. This way, you can gain much more in-depth insights.

Sunk cost fallacy

Again, this is an example of how past decisions influence current choices. Sunk cost fallacy means that if we already invested a lot of resources in something, we’re going to keep investing more. It happens because we don’t want our efforts to go to waste. This is what makes us finish bad movies — we’re not likely to drop it if we’re already halfway through, right?

The sunk cost fallacy goes in line with another phenomenon called loss aversion, which states that our brains consider all losses as more severe than gains. What’s more, some studies suggest that losses are perceived as psychologically twice more important than gains! For instance, losing $100 hurts more than gaining 100$ satisfies.

The same rules apply to user experience design.

How it works for the user: The sunk cost bias is often a part of the user flow, especially when it comes to signing up for a service, or making a purchase. This mechanism can be triggered by different design tricks, such as:

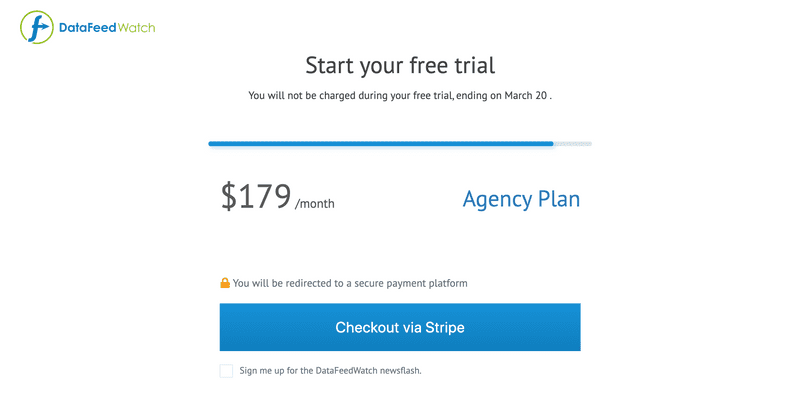

- Progress bar — This solution encourages users to complete their actions, even if they require a certain commitment. Have a look at this sign-up form example from DataFeedWatch:

- At this stage, the potential customer is most likely to drop out, as they need to provide payment details. The progress bar, however, indicates that it’s the final step of the process. It doesn’t make much sense to abandon the form now that you’ve gone so far, right?

- Sunk rewards — Used in loyalty programs, sunk rewards encourage the user to stick to your product. It’s a bit like collecting stamps in a cafe so you can get the 10th coffee for free. When you’ve got just one or two purchases left to get something extra, you probably won’t resist the temptation.

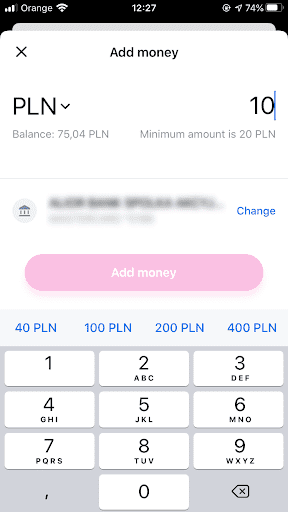

- Top-up suggestions– Have a look at this example from Revolut:

To use the service, you need to add money to your account first. But here’s the trick: you can’t add less than a certain amount (in this case, 20 PLN). This often means that you’re obliged to have more money in your account than you first needed. Because of that, you’re likely to use Revolut more often. What’s more, suggested top-up amounts right above the keyboard fulfill the same function. See the pattern?

How it works for the UX designer: You’ve probably already guessed it: we tend to stick to bad design because of the sunk cost fallacy. If you spent long hours working on a feature, you would want to stick to it even if it turns out to be a bad idea. One of the ways to avoid this is through agile development. Short sprints and iterations make it easier to apply changes along the way and avoid wasting resources.

Social desirability bias

This one’s about our tendency to seem likable and be accepted. Social desirability bias(also known as the friendliness bias) encourages the user to answer in a way they think is expected by the researcher.

How it works for the user: What’s interesting, the social desirability bias typically appears unconsciously. Most of the time, the respondents don’t realize that they respond in a kinder, more favorable manner. For instance, if you ask “How do you like the new dashboard?”, the user is likely to select a higher rating, just to make you feel better about it.

How it works for the UX researcher: Now that you know how social desirability bias works, you might be wondering how to prevent it from happening. This bias can be avoided through indirect questions. Instead of asking how the user feels about a feature, you can ask “How do you think an average user would interact with this feature?”. This way, they won’t feel the urge to appear as nice and friendly.

Key takeaways and cognitive biases reading list

The most important finding? We’re all prone to cognitive biases and the best we can do is to stay aware. Educating yourself about psychological principles that affect our minds is sure to pay off in the long run. It will help you understand user behavior, and it’s going to improve the quality of your work as a UX professional as well.

Here’s our suggested reading list, if you’d like to dig deeper:

Thinking, Fast and Slow by Daniel Kahneman

Biases and Heuristics by Henry Priest

Everyday Bias by Howard J. Ross

The Art of Thinking Clearly by Rolf Dobelli

We hope you found this inspiring. Happy learning!

This article was originally published on Livesession.io by Kalina Tyrkiel, Content Designer, passionate about UX Writing, coffee geek, and applied psychology student. She is based in Krakow, Poland. You can read the original article here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.