New York recently passed a bill providing guidelines for the operation of automated hiring software in the city. Allegedly, city council’s aim with this legislation was to protect New Yorkers from biased AI. But, from where we’re sitting, it looks like it’s going to do the exact opposite.

Up front: The bill, dubbed int 1894-2020, was meant to address the issue of bias in AI software.

Companies such as HireVue claim their algorithms can actually remove hiring bias – the schtick here is that computers can’t be bigots.

But the reality is that there’s no magic way to remove bias from an AI system. Algorithms aren’t magical spells. They’re usually math-based guidelines. And, because computers and algorithms are designed and programmed by humans, they contain inherent bias.

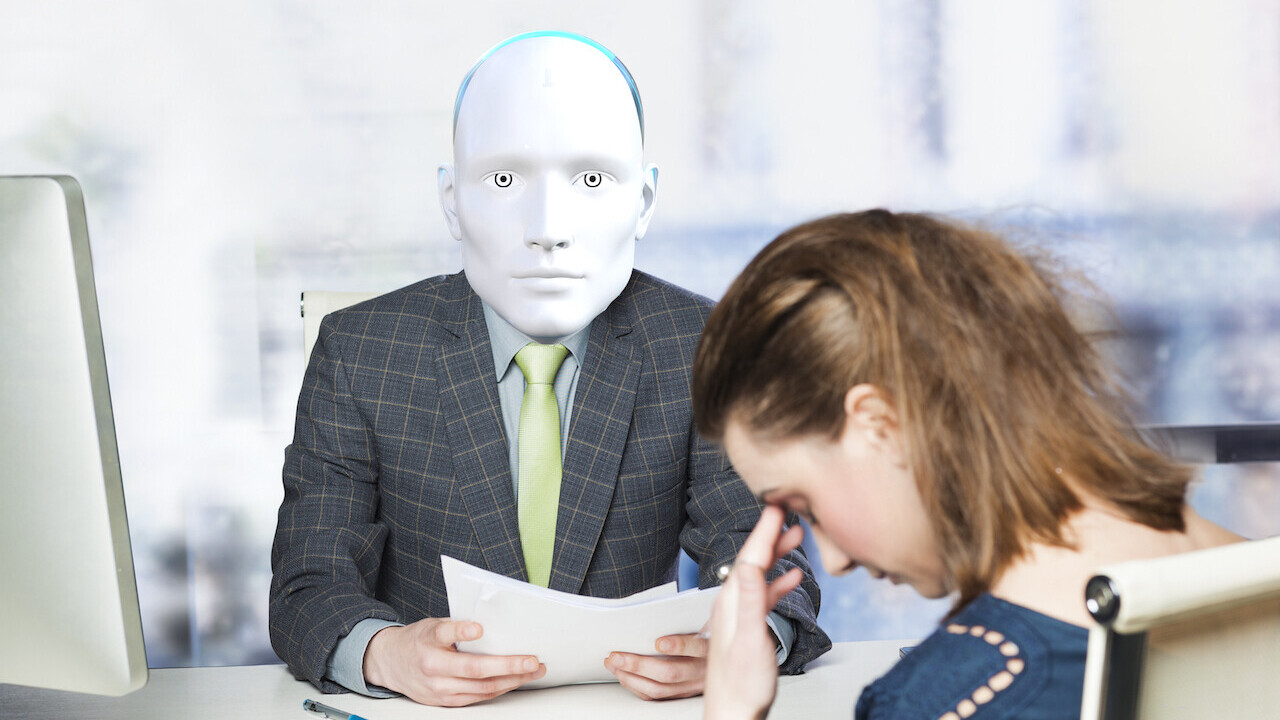

In other words: A computer cannot make value judgements on human beings. All they can do is make “guesses.” Some guesses are harmless, like when Netflix guesses you’ll like a certain movie and you don’t. You can just watch something else. Other guesses are bad, like when an algorithm decides you’re not worth hiring.

AI absolutely cannot tell if you’re lying. AI can’t understand emotions. And it certainly can’t measure human intelligence. Which makes most of the claims made by companies specializing in automated hiring systems specious at best and outright lies at worst.

But the real question here isn’t whether an AI can predict whether a human will be a good fit for a given job (it definitely can’t), it’s whether or not the new bill can protect New Yorkers from predatory snake oil companies that claim it can.

Good idea: passing a bill that protects New Yorkers from predatory snake oil companies.

It’s long past time politicians in the US to did something about these demonstrably harmful AI systems and the companies peddling them.

Bad idea: letting the software’s vendors decide whether an algorithm is biased or not.

Per the bill:

This bill would require that a bias audit be conducted on an automated employment decision tool prior to the use of said tool. The bill would also require that candidates or employees that reside in the city be notified about the use of such tools in the assessment or evaluation for hire or promotion, as well as, be notified about the job qualifications and characteristics that will be used by the automated employment decision tool. Violations of the provisions of the bill would be subject to a civil penalty.

But, those violations? They’re feckless. It’s up to the vendor to conduct and report audits demonstrating their algorithms aren’t biased.

Quick take: This is like letting Thanos conduct audits and report compliance with New York City ethics. “According to the big purple dude, his use of the Infinity Gauntlet is perfectly ethical. He did an audit and everything.”

In this case, New York’s passed a bill that gives AI vendors the power to legally determine whether they’re being ethical or not. This doesn’t protect citizens, it protects scammy AI companies.

HireVue gave a statement to the AP that sums it up best. Per that article:

HireVue, a platform for video-based job interviews, said in a statement this week that it welcomed legislation that “demands that all vendors meet the high standards that HireVue has supported since the beginning.”

Exactly. HireVue currently uses AI to allegedly assess candidates’ “e-motions.” The company actually claims computer vision algorithms can determine a user’s “emotional intelligence.” This, of course, is not possible because AI doesn’t have magic powers.

And, if that’s the standard New York’s using to determine whether an AI system is biased, then it’s open season on the Big Apple. I can’t think of a single AI startup, no matter how awful and scammy, that couldn’t pass that bar.

Get the TNW newsletter

Get the most important tech news in your inbox each week.