As a birder, I had heard that if you paid careful attention to the head feathers on the downy woodpeckers that visited your bird feeders, you could begin to recognize individual birds. This intrigued me. I even went so far as to try sketching birds at my own feeders and had found this to be true, up to a point.

In the meantime, in my day job as a computer scientist, I knew that other researchers had used machine learning techniques to recognize individual faces in digital images with a high degree of accuracy.

These projects got me thinking about ways to combine my hobby with my day job. Would it be possible to apply those techniques to identify individual birds?

So, I built a tool to collect data: a type of bird feeder favored by woodpeckers and a motion-activated camera. I set up my monitoring station in my suburban Virginia yard and waited for the birds to show up.

Image classification

Image classification is a hot topic in the tech world. Major companies like Facebook, Apple and Google are actively researching this problem to provide services like visual search, auto-tagging of friends in social media posts and the ability to use your face to unlock your cellphone. Law enforcement agencies are very interested as well, primarily to recognize faces in digital imagery.

When I started working with my students on this project, image classification research focused on a technique that looked at image features such as edges, corners and areas of similar color. These are often pieces that might be assembled into some recognizable object. Those approaches were about 70 percent accurate, using benchmark data sets with hundreds of categories and tens of thousands of training examples.

Recent research has shifted toward the use of artificial neural networks, which identify their own features that prove most useful for accurate classification. Neural networks are modeled very loosely on the patterns of communication among neurons in the human brain. Convolutional neural networks, the type that we are now using in our work with birds, are modified in ways that were modeled on the visual cortex. That makes them especially well-suited for image classification problems.

Miroslav (Credit: Hlavko/shutterstock.com)

Some other researchers have already tried similar techniques on animals. I was inspired in part by computer scientist Andrea Danyluk of Williams College, who has used machine learning to identify individual spotted salamanders. This works because each salamander has a distinctive pattern of spots.

Progress on bird ID

While my students and I didn’t have nearly as many images to work with as most other researchers and companies, we had the advantage of some constraints that could boost our classifier’s accuracy.

All of our images were taken from the same perspective, had the same scale and fell into a limited number of categories. All told, only about 15 species ever visited the feeder in my area. Of those, only 10 visited often enough to provide a useful basis for training a classifier.

The limited number of images was a definite handicap, but the small number of categories worked in our favor. When it came to recognizing whether the bird in an image was a chickadee, a Carolina wren, a cardinal or something else, an early project based on a facial recognition algorithm achieved about 85 percent accuracy – good enough to keep us interested in the problem.

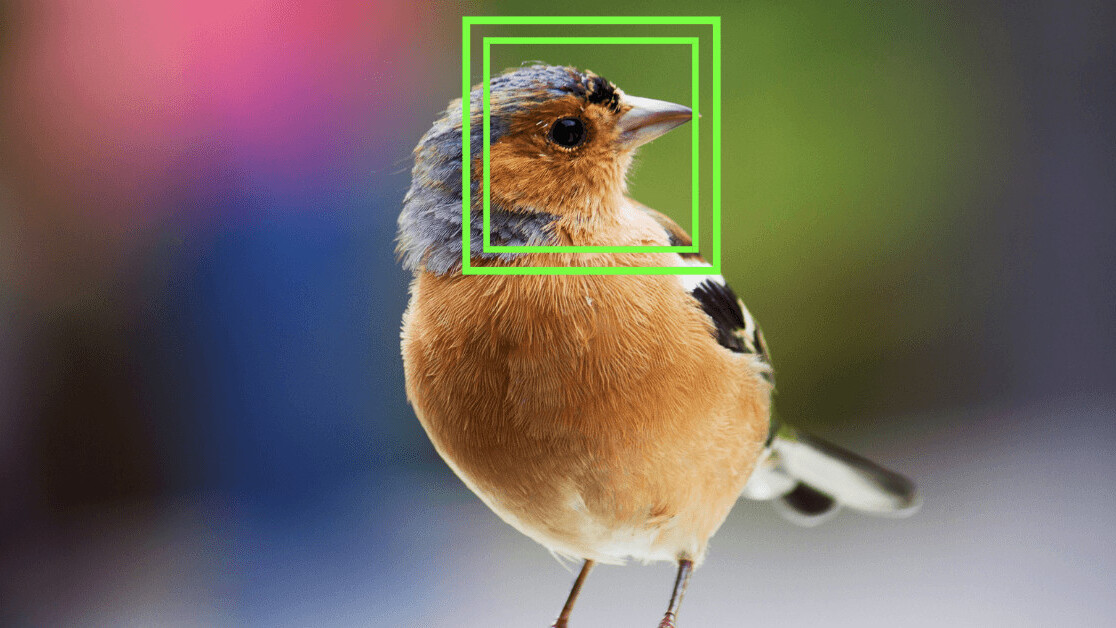

Identifying birds in images is an example of a “fine-grained classification” task, meaning that the algorithm tries to discriminate between objects that are only slightly different from each other. Many birds that show up at feeders are roughly the same shape, for example, so telling the difference between one species and another can be quite challenging, even for experienced human observers.

The challenge only ramps up when you try to identify individuals. For most species, it simply isn’t possible. The woodpeckers that I was interested in have strongly patterned plumage but are still largely similar from individual to individual.

So, one of our biggest challenges was the human task of labeling the data to train our classifier. I found that the head feathers of downy woodpeckers weren’t a reliable way to distinguish between individuals, because those feathers move around a lot.

They’re used by the birds to express irritation or alarm. However, the patterns of spots on the folded wings are more consistent and seemed to work just fine to tell one from another. Those wing feathers were almost always visible in our images, while the head patterns could be obscured depending on the angle of the bird’s head.

In the end, we had 2,450 pictures of eight different woodpeckers. When it came to identifying individual woodpeckers, our experiments achieved 97 percent accuracy. However, that result needs further verification.

How can this help birds?

Ornithologists need accurate data on how bird populations change over time. Since many species are very specific in their habitat needs when it comes to breeding, wintering and migration, fine-grained data could be useful for thinking about the effects of a changing landscape.

Data on individual species like downy woodpeckers could then be matched with other information, such as land use maps, weather patterns, human population growth and so forth, to better understand the abundance of a local species over time.

I believe that a semiautomated monitoring station is within reach at modest cost. My monitoring station cost around US$500. Recent studies suggest that it should be possible to train a classifier using a much broader group of images, then fine-tune it quickly and with reasonable computational demands to recognize individual birds.

Projects like Cornell Laboratory of Ornithology’s eBird have put a small army of citizen scientists on the ground for monitoring population dynamics, but the bulk of those data tends to be from locations where people are numerous, rather than from locations of specific interest to scientists.

An automated monitoring station approach could provide a force multiplier for wildlife biologists concerned with specific species or specific locations. This would broaden their ability to gather data with minimal human intervention.![]()

This article is republished from The Conversation by Lewis Barnett, Associate Professor of Computer Science, University of Richmond under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.