Jared Ficklin will be speaking at TNW2020, October 1 & 2, where the latest trends and emerging best practices in product design will be explored. Secure your online ticket now.

Each day I, like many others, sit with my laptop or smartphone and open a collection of apps. Throughout the day I change that collection, closing some and opening others. I focus, refocus, arrange and rearrange them constantly. Each time I am effectively assembling software into some imagined workflow, and workflows are the engine of modern productivity.

The feature I am using to set up the workflows is a largely hidden one that as designers we call ‘Multitask Computing.’ It’s most commonly part of the platform operating system. Multitasking is why people still own laptops and why designers work very hard to add new cross-app features to our handheld mobile computers.

When it comes to inventing, optimizing, and mastering workflows, humans are perfectly suited to the task of assembling technologies in ways that augment their human capability. Biologically, it’s how our mind works and how in evolution we bested larger, stronger cousins who possessed better ways to sense their surroundings.

[Read: ]

So naturally I’ll frequently visit an app store to find a new app perfectly suited to improve some part of a workflow. There is an often talked-about trend that we are moving away from the app economy. The friction of finding an app is staggering the costs of app store monopolies, which are getting litigious and may lead to regulation. But be sure the app economy is safely protected by the infinite workflows that it improves.

But there are also areas where monolithic software is seeking to capture entire workflows. argodesign (where I am a founding partner) is currently working on software with DreamWorks to improve the process of movie making. We have done similar work for other large companies wishing to consolidate the cruft of many apps that represent a workflow into a more streamlined experience.

Fields that have developed specialized workflows can be improved with consolidation. But this is niche and even then, these consolidations often reveal their own pattern of multitasking — like the many modules and marketplaces of Salesforce.

It’s clear however that our attempts at improving workflows are a signal both of their value and also that the current patterns of multitasking are reaching an edge…

Multitasking in a new hybrid world

To continue to improve workflow we need to improve multitasking itself. Spatial Computing offers us a way past that edge into new more effective patterns of multitasking.

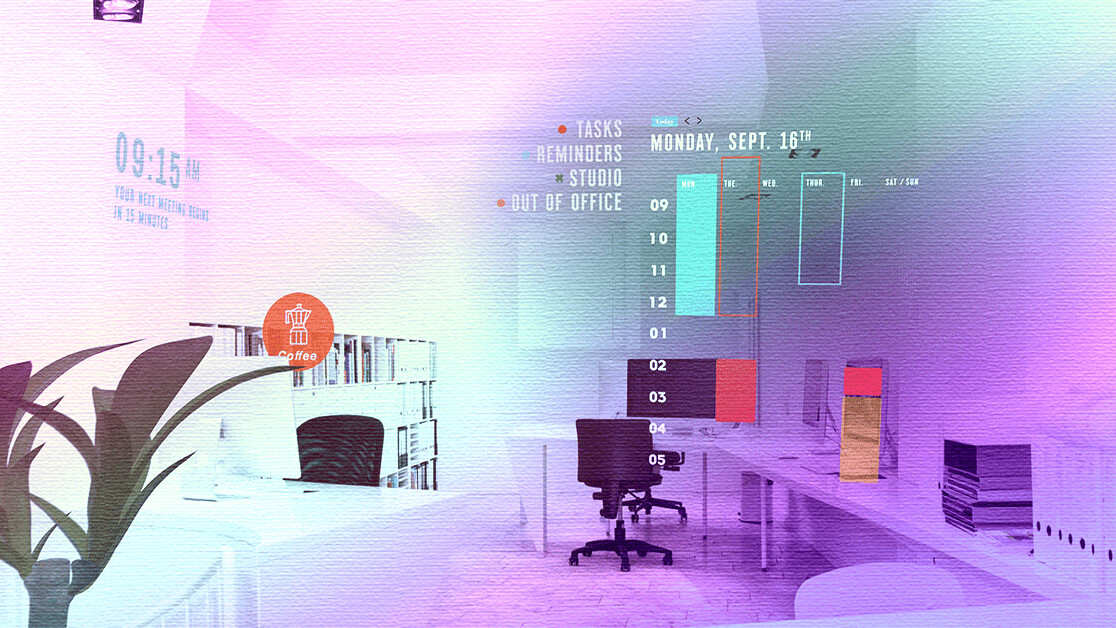

Increasingly, with the help of wearable mobile computers, like the Magic Leap One, my digital lifestyle is joining me in the real world. We are a strategic design partner for Magic Leap, and their device is creating a new pattern of computing that advances the power of multitask computing by creating new ways to assemble software into workflows. This promises to magnify the impact of computing in a very human way.

Wearable Mobile Computers like Magic Leap One or HoloLens 2 are capable of Augmented Reality (AR), Mixed Reality (MR), and Spatial Computing. They offer a new pattern of computing, with displays that feel vast when compared to screens because of their ability to project the digital into the physical space all around you.

But more than a simple heads-up display, they also possess a computer vision stack and software that allows the context of physical space you are in to participate in computing — a ‘placefullness’ if you will.

I am the creative lead on the Magic Leap project, so I use these devices every day. And while much is said about the amazing experiences one can have with AR and MR, we can also see a ton of value in how Spatial Computing as a pattern can improve Multitask Computing. Which means amplifying the ability of humans to put together productive workflows.

Goodbye screen edges, hello placefullness

The first advantage here is the display. The edge here turns out to be literal. We are literally running into the edge of our screens, even while the edges of our screen keep getting wider to accommodate more running apps.

With a wearable mobile computer, there is no edge to the screen. The entirety of the physical space around you can now hold running applications. On Magic Leap this new ‘desktop’ is called ‘The Landscape’ and in Landscape you can take a Google Meet and set it just off your left shoulder or scale it up to theatrical proportions. A trick that is much more useful for calendars… especially if you have seen my calendar.

The work of arranging and rearranging apps does not go away. In fact it picks up a new dimension of depth and accommodates physical objects. But within that emerges a new value that lends itself particularly well to workflows. You can put software in context next to the physical thing or in the physical place it represents.

I call this extra context ‘placefullness’, a concept first introduced to me by argo founder Mark Rolston. For consumers, placefullness will be a lot of Pokemon Go-styled fun. Also because of the way our memories favor geography, placefullness will offer new organizational metaphors for computing. For businesses, placefullness means digital workflows can now follow physical workflows.

Imagine yourself as a facilities engineer at a small brewery. You have gone digital via the Internet of Things, so you have this room somewhere filled with gauges on screens that represent things happening elsewhere. The ability to permanently place a link or app into physical space means you can move the digital gauge or the control software or the digital repair ticket next to the tank, or valve, or repair job.

Frictionless digital world

This new kind of multitasking is free of the friction of finding a screen, then distracting yourself with that screen and all the steps of having to call up the right application. That traditional work feels like a small friction. But multiply that times the thousands of times this happens everyday and it is a huge impact to computing. However, to really use this pattern requires a human to wear the computer and then place the links and software into the space around them.

Right now the price and size of wearable mobile computers is such that adoption is limited to businesses that can capture the value of these new workflows, along with novel experiential activations and the bleeding edge adopters that have been waiting a generation for this hardware to come together into an approachable package.

For many businesses, the current iterations of devices are very deployable. We all know Moore’s Law, and these devices will very quickly reach the type of form factor, price, and platform support that brings early adopter and then general adoption.

For a spatial computer to work, it’s given sight and the sensing capabilities of a human. It also must adjust its interaction model to include a layer of gesture, voice, and other traditional controls. It sees and recognizes the human context. The computer itself becomes more human.

When you wear a computer, you are looking outward at humanity rather than inward into computing. It’s a pattern that suits the human and even though on the long tail you can imagine so many ways AI and computing will augment and amplify our capabilities, there’s no question in my view that humans are the last mile in computing.

Get the TNW newsletter

Get the most important tech news in your inbox each week.