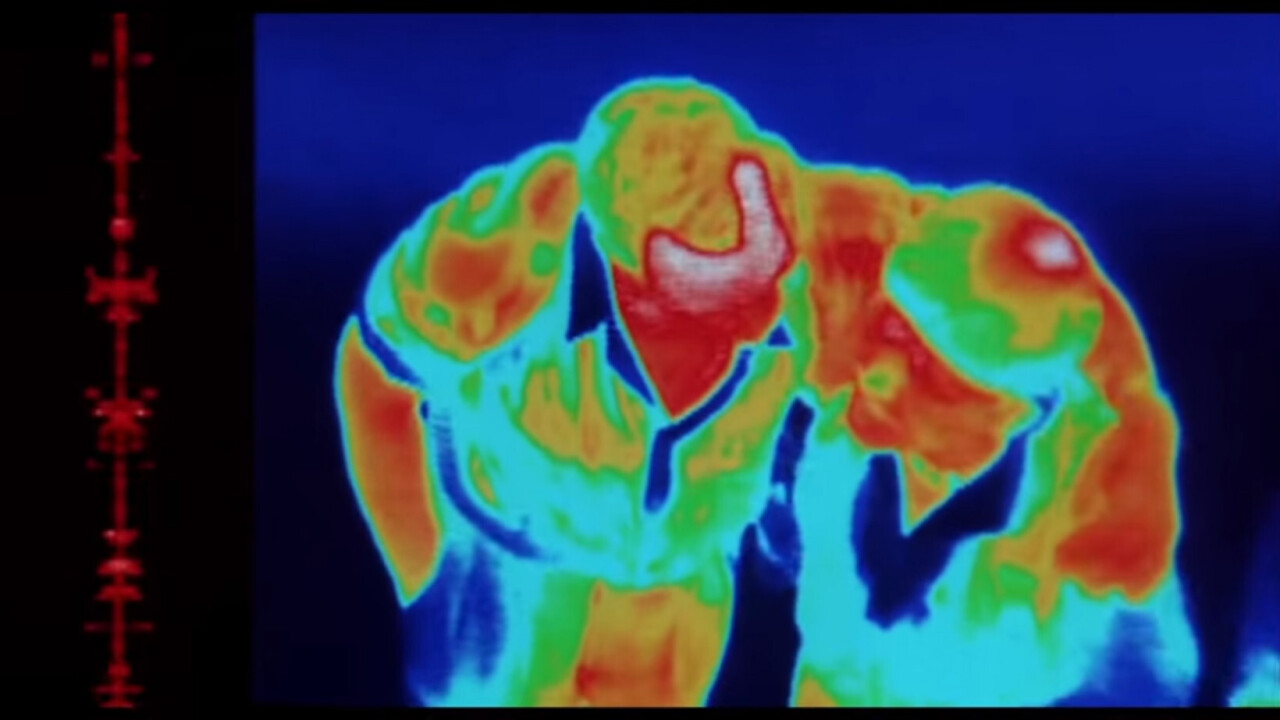

The US Army just took a giant step toward developing killer robots that can see and identify faces in the dark.

DEVCOM, the US Army’s corporate research department, last week published a pre-print paper documenting the development of an image database for training AI to perform facial recognition using thermal images.

Why this matters: Robots can use night vision optics to effectively see in the dark, but to date there’s been no method by which they can be trained to identify surveillance targets using only thermal imagery. This database, made up of hundreds of thousands of images consisting of regular light pictures of people and their corresponding thermal images, aims to change that.

How it works: Much like any other facial recognition system, an AI would be trained to categorize images using a specific number of parameters. The AI doesn’t care if it’s pictures of faces using natural light or thermal images, it just needs copious amounts of data to get “better” at recognition. This database is, as far as we know, the largest to include thermal images. But with less than 600K total pics and only 395 total subjects it’s actually relatively small compared to standard facial recognition databases.

[Read next: Meet the 4 scale-ups using data to save the planet]

This lack of comprehensive data means that it simply wouldn’t be very good at identifying faces. Current state-of-the-art facial recognition performs poorly at identifying anything other than white male faces and thermal imagery contains less uniquely identifiable data than traditionally-lit images.

These drawbacks are evident as the DEVCOM researchers conclude in their paper:

Analysis of the results indicates two challenging scenarios. First, the performance of the thermal landmark detection and thermal-to-visible face verification models were severely degraded on off-pose images. Secondly, the thermal-to-visible face verification models encountered an additional challenge when a subject was wearing glasses in one image but not the other.

Quick take: The real problem is that the US government has shown time and time again it’s willing to use facial recognition software that doesn’t work very well. In theory, this could lead to better combat control in battlefield scenarios, but in execution this is more likely to result in the death of innocent black and brown people via police or predator drones using it to identify the wrong suspect in the dark.

H/t: Jack Clark, Import AI

Get the TNW newsletter

Get the most important tech news in your inbox each week.