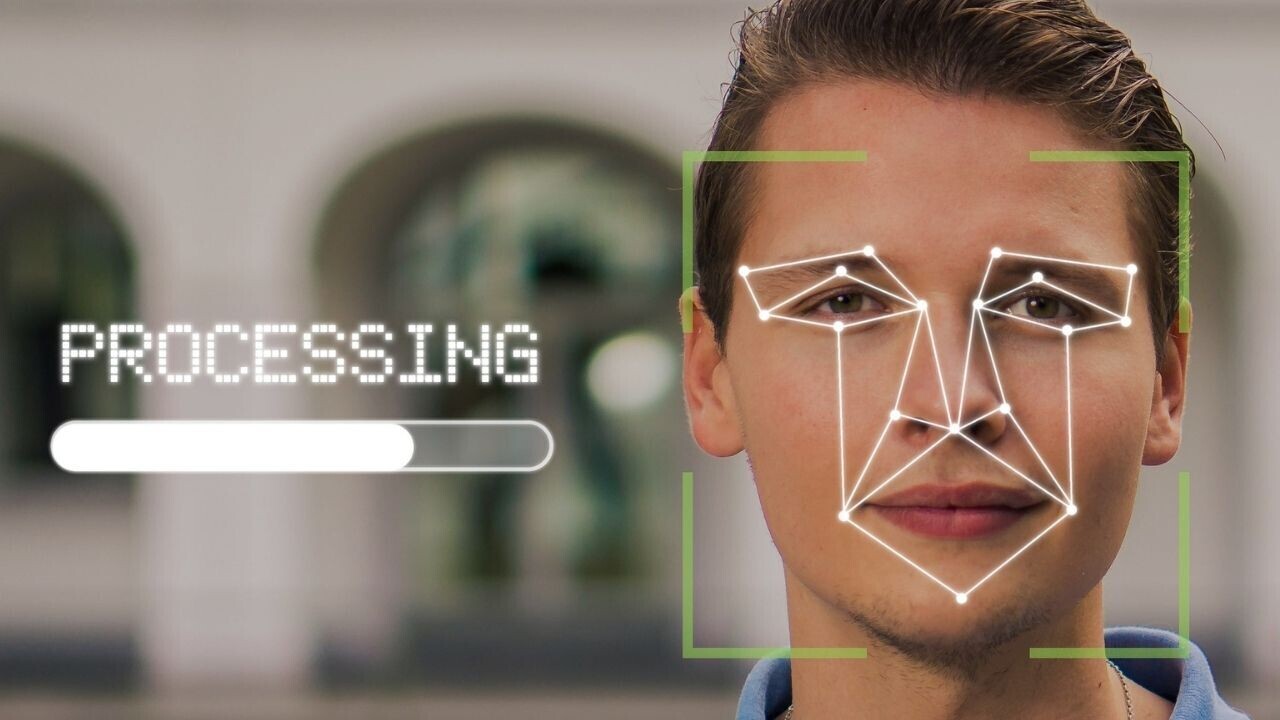

The growing use of emotion recognition AI is causing alarm among ethicists. They warn that the tech is prone to racial biases, doesn’t account for cultural differences, and is used for mass surveillance. Some argue that AI isn’t even capable of accurately detecting emotions.

A new study published in Nature Communications has shone further light on these shortcomings.

The researchers analyzed photos of actors to examine whether facial movements reliably express emotional states.

They found that people use different facial movements to communicate similar emotions. One individual may frown when they’re angry, for example, but another would widen their eyes or even laugh.

The research also showed that people use similar gestures to convey different emotions, such as scowling to express both concentration and anger.

Study co-author Lisa Feldman Barrett, a neuroscientist at Northeastern University, said the findings challenge common claims around emotion AI:

Certain companies claim they have algorithms that can detect anger, for example, when what really they have — under optimal circumstances — are algorithms that can probably detect scowling, which may or may not be an expression of anger. It’s important not to confuse the description of a facial configuration with inferences about its emotional meaning.

Method acting

The researchers used professional actors because they have a “functional expertise” in emotion: their success depends on them authentically portraying a character’s feelings.

The actors were photographed performing detailed, emotion-evoking scenarios. For example, “He is a motorcycle dude coming out of a biker bar just as a guy in a Porsche backs into his gleaming Harley” and “She is confronting her lover, who has rejected her, and his wife as they come out of a restaurant.”

The scenarios were evaluated in two separate studies. In the first, 839 volunteers rated the extent to which the scenario descriptions alone evoked one of 13 emotions: amusement, anger, awe, contempt, disgust, embarrassment, fear, happiness, interest, pride, sadness, shame, and surprise.

Next, the researchers used the median rating of each scenario to classify them into 13 categories of emotion.

The team then used machine learning to analyze how the actors portrayed these emotions in the photos.

This revealed that the actors used different facial gestures to portray the same categories of emotions. It also showed that similar facial poses didn’t reliably express the same emotional category.

Strike a pose

The team then asked additional groups of volunteers to assess the emotional meaning of each facial pose alone.

They found that the judgments of the poses alone didn’t reliably match the ratings of the facial expressions when they were viewed alongside the scenarios.

Barrett said this shows the importance of context in our assessments of facial expressions:

When it comes to expressing emotion, a face does not speak for itself.

The study illustrates the enormous variability in how we express our emotions. It also further justifies the concerns around emotion recognition AI, which is already used in recruitment, law enforcement, and education,

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.