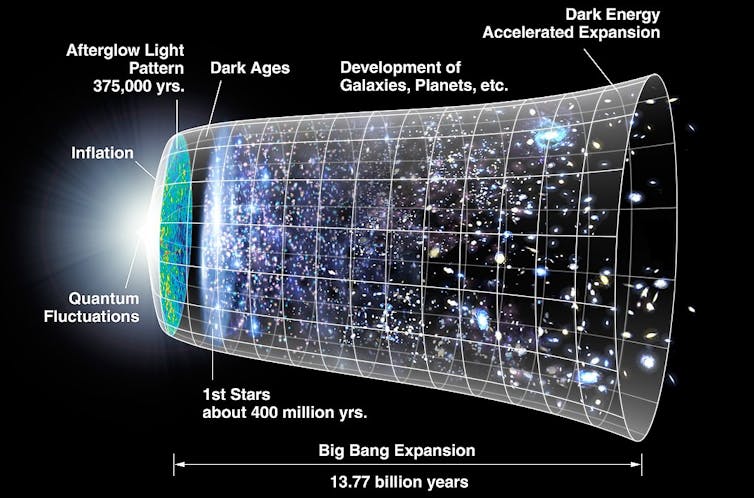

Advances in astronomical observation over the past century have allowed scientists to construct a remarkably successful model of how the cosmos works. It makes sense – the better we can measure something, the more we learn. But when it comes to the question of how fast our universe is expanding, some new cosmological measurements are making us ever more confused.

Since the 1920s we’ve known that the universe is expanding – the more distant a galaxy is, the faster it is moving away from us. In fact, in the 1990s, the rate of expansion was found to be accelerating. The current expansion rate is described by something called “Hubble’s Constant” – a fundamental cosmological parameter.

Until recently, it seemed we were converging on an accepted value for Hubble’s Constant. But a mysterious discrepancy has emerged between values measured using different techniques. Now a new study, published in Science, presents a method that may help to solve the mystery.

The problem with precision

Hubble’s Constant can be estimated by combining measurements of the distances to other galaxies with the speed they are moving away from us. By the turn of the century, scientists agreed that the value was about 70 kilometers per second per megaparsec – one megaparsec is just over 3m light-years. But in the last few years, new measurements have shown that this might not be a final answer.

If we estimate Hubble’s Constant using observations of the local, present-day universe, we get a value of 73. But we can also use observations of the afterglow of the Big Bang – the “cosmic microwave background” – to estimate Hubble’s Constant. But this “early” universe measurement gives a lower value of around 67.

Worryingly, both of the measurements are reported to be precise enough that there must be some sort of problem. Astronomers euphemistically refer to this as “tension” in the exact value of Hubble’s Constant.

If you’re the worrying kind, then the tension points to some unknown systematic problem with one or both of the measurements. If you’re the excitable kind, then the discrepancy might be a clue about some new physics that we didn’t know about before. Although it has been very successful so far, perhaps our cosmological model is wrong, or at least incomplete.

Distant versus local

To get to the bottom of the discrepancy, we need a better linking of the distance scale between the very local and very distant universe.

The new paper presents a neat approach to this challenge. Many estimates of the expansion rate rely on the accurate measurement of distances to objects. But this is really hard to do: we can’t just run a tape measure across the universe.

One common approach is to use “Type 1a” supernovas (exploding stars). These are incredibly bright, so we can see them at a great distance. As we know how luminous they should be, we can calculate their distance by comparing their apparent brightness with their known luminosity.

To derive Hubble’s Constant from the supernova observations, they must be calibrated against an absolute distance scale because there is still a rather large uncertainty in their total brightness. Currently, these “anchors” are very nearby (and so very accurate) distance markers, such as Cepheid Variable stars, which brighten and dim periodically.

If we had absolute distance anchors further out in the cosmos, then the supernova distances could be calibrated more accurately over a wider cosmic range.

Far-flung anchors

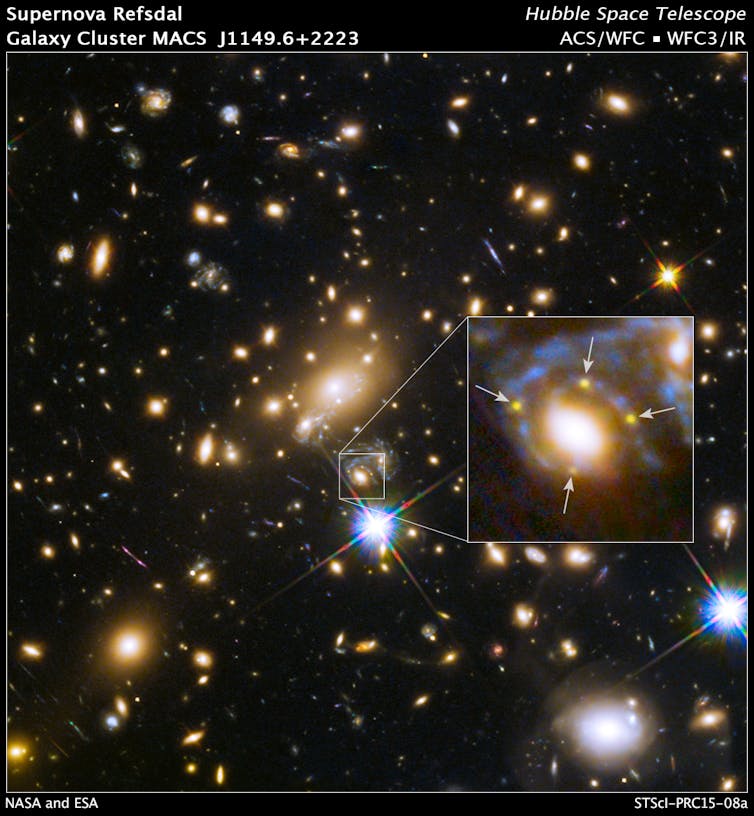

The new work has dropped a couple of new anchors by exploiting a phenomenon called gravitational lensing. By looking at how light from a background source (like a galaxy) bends due to the gravity of a massive object in front of it, we can work out the properties of that foreground object.

If the background source has a fairly constant brightness, we don’t notice that time delay. But when the background source itself varies in brightness, we can measure the difference in light arrival time. This work does exactly that.

The time delay across the lensed image is related to the mass of the foreground galaxy deflecting the light, and its physical size. So when we combine the measured time delay with the mass of the deflecting galaxy (which we know) we get an accurate measure of its physical size.

Like a penny held at arms length, we can then compare the apparent size of the galaxy to the physical size to determine the distance, because an object of fixed size will appear smaller when it is far away. The authors present absolute distances of 810 and 1230 megaparsecs for the two deflecting galaxies, with about a 10-20% margin of error.

Treating these measurements as absolute distance anchors, the authors go on to reanalyze the distance calibration of 740 supernovas from a well-established data set used to determine Hubble’s Constant. The answer they got was just over 82 kilometers per second per megaparsec.

This is quite high compared to the numbers mentioned above. But the key point is that with only two distance anchors the uncertainty in this value is still quite large. Importantly, though, it is statistically consistent with the value measured from the local universe. The uncertainty will be reduced by hunting for – and measuring – distances to other strongly lensed and time-varying galaxies. They are rare, but upcoming projects like the Large Synoptic Survey Telescope should be capable of detecting many such systems, raising hopes of reliable values.

The result provides another piece of the puzzle. But more work is needed: it still doesn’t explain why the value derived from the cosmic microwave background is so low. So the mystery remains, but hopefully not for too long.![]()

This article is republished from The Conversation by James Geach, Professor of Astrophysics and Royal Society University Research Fellow, University of Hertfordshire under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.