When you take a picture of a cat and Google’s algorithms place it in a folder called “pets,” with no direction from you, you’re seeing the benefit of image recognition AI. The exact same technology is used by doctors to diagnose diseases on a scale never before possible by humans.

Diabetic retinopathy, caused by type two diabetes, is the fastest-growing cause of preventable blindness. Each of the more than 415 million people living with the disease risks losing their eyesight unless they have regular access to doctors.

In countries like India there are simply too many patients for doctors to treat. There are 4,000 diabetic patients for every ophthalmologist in India, where the US has one for every 1,500 patients.

It’s worse in other developing nations. Of all known cases of diabetic retinopathy more than 80 percent of sufferers live in places with little or no access to care. These people are going blind because of poverty.

This is why companies such as Verily, one of Google’s many sister-companies under Alphabet, chose diabetic retinopathy as the entry-point for massive scale neural network-powered medical insights.

How it works

It’s actually a bit simpler than you might think – and it’s all about the data. Today’s algorithms and deep learning networks are well-suited for processing the individual segments and pixels in an image and classifying the image in one of any number of categories. For example, Google’s ImageNet (the core visual recognition AI for the company) has more than 22,000 categories containing at least 14 million images.

AI can diagnose diabetic retinopathy in the exact same way it determines whether something is a hotdog or not – which is also how physicians do it.

Doctors diagnose diabetic retinopathy through interpreting retina scans. Similar to examining an X-ray or MRI, the physician scans images for specific indications of abnormal markers. They have to be on the lookout for unrelated artifacts such as dust or lens flares, but otherwise it’s just a matter of looking for specific markers.

The problem

Over 415 million people need at least an annual retina scan to prevent the onset of diabetes-caused blindness. Even at just one scan a year that’s hundreds of millions of necessary images. And there just aren’t enough doctors to review that much data.

Over the past three years machine learning developers have achieved several breakthroughs in creating image recognition AI. We’ve legitimately reached a point where a computer’s ability to perform medical diagnostics based on reviewing images exceeds that of humans (in very specific use cases).

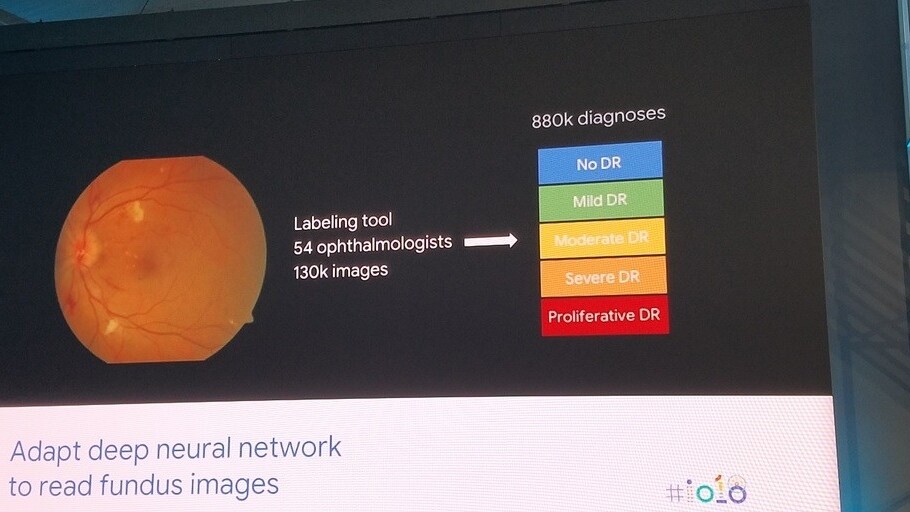

In 2016 Google’s researchers published a paper demonstrating their convolutional neural network (CNN) – a targeted deep learning system – beat ophthalmologists in accuracy diagnosing the disease. This year, the same CNN went from challenging general ophthalmologists to beating retinal specialists at accurately detecting the signs of diabetic retinopathy.

It’s about time

Right now, anyone on this planet at risk for type two diabetes who doesn’t have viable access to a medical practitioner who can diagnose these diseases is playing roullette with their health. It’s not just vision that’s at stake: diabetes can ravage the liver and greatly increase the risk of cardiovascular problems — among a myriad of other damaging or potentially fatal effects.

But AI can completely solve this problem. According to Lily Peng, Product Manager for the Medical Imaging team at Google Research, the answer for overwhelmed physicians is offloading the parts of their jobs that can be done by machines:

Deep learning is good for tasks you’ve done 10,000 times and on the 10,001th time you’re sick of it. This is really good for the medical field.

AI won’t be your doctor in the future, it’s here to help your physician right now. Jessica Mega, Verily’s Chief Medical Officer, believes that machine learning can streamline the diagnostic process:

Is technology going to replace physicians or going to replace the healthcare system? The way I think about it, it just augments the work we do. If you think about the stethoscope it was invented about 200 years ago. It doesn’t replace the physician is just augments them.

Many of the challenges facing the healthcare industry can be solved by machine learning, but the developers working on these problems can’t do it alone. Google, Verily, IBM, Intel, Microsoft, and hundreds of other companies are racing against the clock to find a way to make the field of medicine a proactive discipline that prevents disease instead of the reactive one it is.

And this will require mass adoption by the medical community, the healthcare industry, and regulatory bodies in every country.

AI isn’t just the future of medical care, it’s the present. Lives are at stake, and every second matters to someone who’s facing a life without eyesight because they don’t have the same access to a medical professional that someone in another geographic location does.

It’s time to rethink the way we approach caring about ourselves and each other. Machine learning provides a way for doctors to stop being data analysts and become the patient care-providers the field so desperately needs.

The Next Web’s 2018 conference is almost here, and it’ll be ??. Find out all about our tracks here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.