OpenAI has unveiled a neural network known as DALL-E that converts text into striking images — like these pictures of a baby panda in a tutu wielding a lightsaber:

OpenAI said in a blogpost that the system is a 12 billion parameter version of the landmark GPT-3 language model:

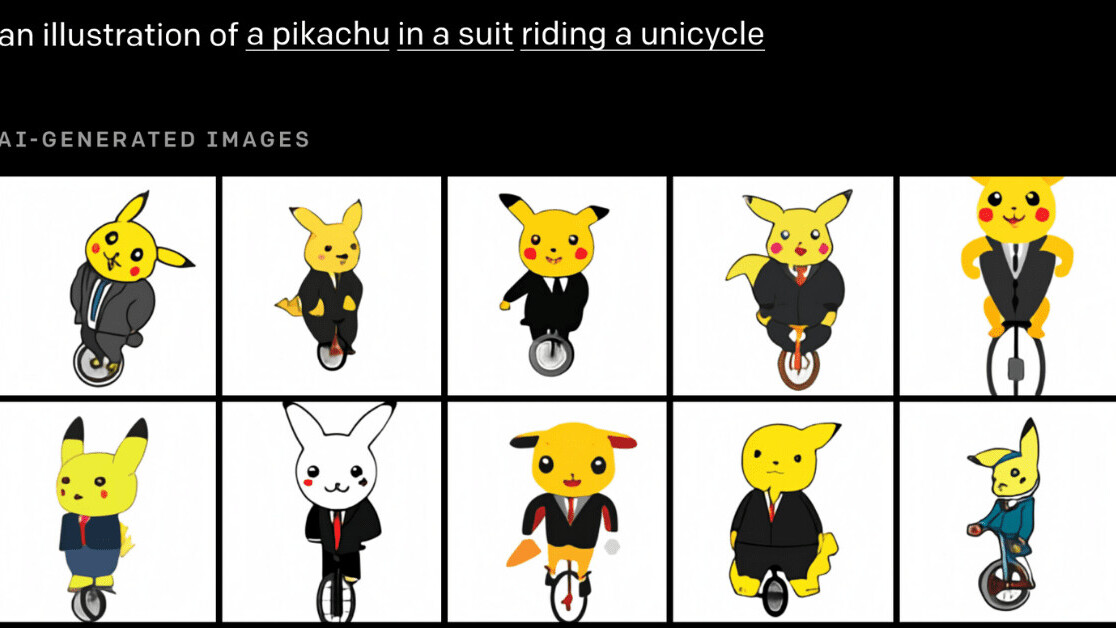

We’ve found that it has a diverse set of capabilities, including creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

DALL-E — a portmanteau of the names of surrealist artist Salvador Dalí and Pixar’s robot WALL-E — was trained on a dataset of text-image pairs extracted from the internet.

This allows it to create entirely new pictures by exploring the structure of a prompt — including fantastical objects combining unrelated ideas that it was never fed in training.

[Read: Meet the 4 scale-ups using data to save the planet]

It can produce some seriously impressive images of landmarks, locations, hybrid animals, and designs from different decades. But OpenAI admits that not all the results are successful.

The company said the system sometimes fails to draw some of the specified items and confuses the associations between objects and their specified attributes:

Generally, the longer the string that DALL-E is prompted to write, the lower the success rate. We find that the success rate improves when parts of the caption are repeated. Additionally, the success rate sometimes improves as the sampling temperature for the image is decreased, although the samples become simpler and less realistic.

DALL-E also often reflects superficial stereotypes when answering queries about geographical facts, such as flags, cuisines, and local wildlife. This shortcoming is particularly significant in light of the growing concerns about the biases of large-language models.

OpenAI said it plans to analyze the societal impacts of models such as DALL-E. But the company believes the system shows “that manipulating visual concepts through language is now within reach.”

You can try out a controlled demo of DALL-E for yourself at the OpenAI website.

Get the TNW newsletter

Get the most important tech news in your inbox each week.