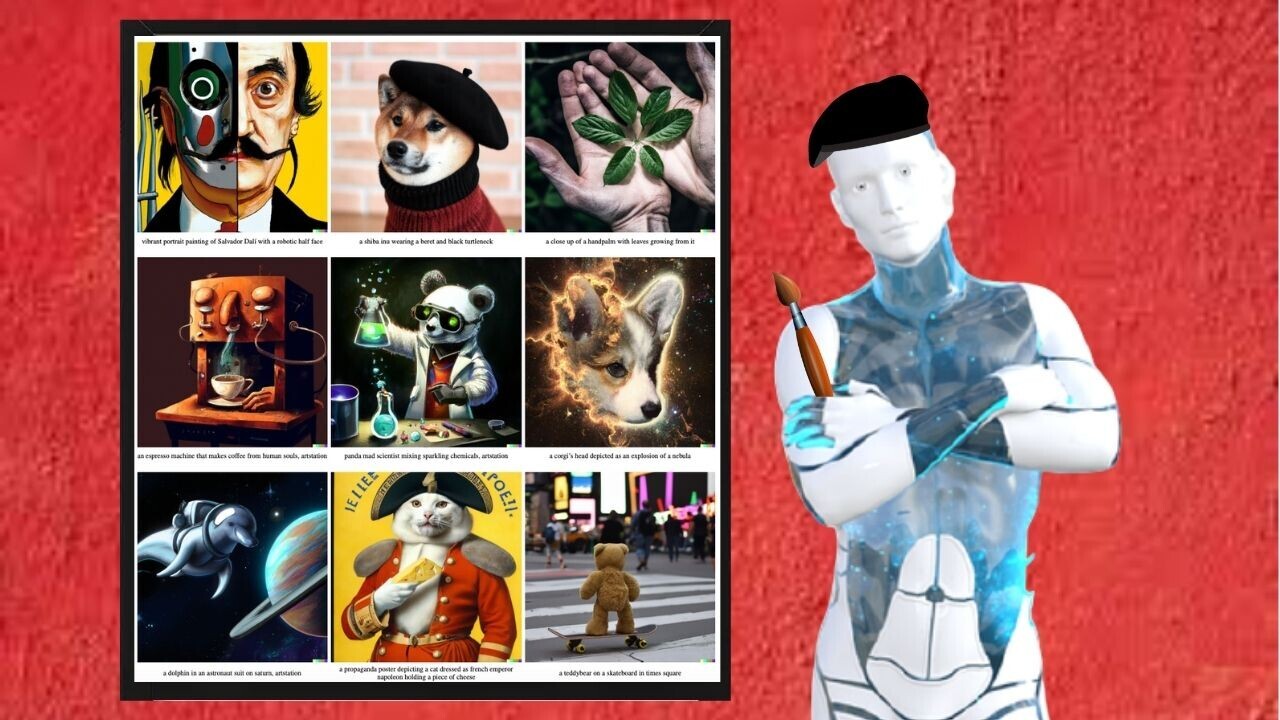

OpenAI has unveiled a new AI tool that turns text into images — and the results are stunning.

Named DALL-E 2, the system is the successor to a model unveiled last year. While its predecessor generated some impressive outputs, the new version is a major upgrade.

DALL-E-2 adds enhanced textual comprehension, faster image generation, and four times greater resolution.

“When approaching DALL-E 2 we focused on improving the image resolution quality and improving latency, rather than building a bigger system,” OpenAI researcher Aditya Ramesh told TNW.

Animal helicopter chimeras generated with DALL·E 2: pic.twitter.com/5b8a9iq3k9

— Aditya Ramesh (@model_mechanic) April 7, 2022

The new tool also introduces two extra capabilities: reinterperations of existing images and an editing feature called inpainting.

Inpainting makes edits to an existing image by analyzing a natural language caption.

It can add and remove components, while integrating the expected changes to shadows, reflections, and textures.

DALL·E 2 was trained on pairs of images and their corresponding captions, which taught the model about the relationships between pictures and words.

New images are generated through a process called diffusion.

This begins with a pattern of random dots. The system then gradually transforms the pattern into a picture when it recognizes specific aspects of that image.

Some of DALL-E 2’s creations look almost too good to be true. Yet the researchers say the system tends to generate visually coherent images for most captions that people try.

The above pictures of an astronaut, for example, were curated from a set of nine produced by the model. Prafulla Dhariwal a research scientist at OpenAI, said the results are generally consistent:

Sometimes, it can be helpful to iterate with the model in a feedback loop by modifying the prompt based on its interpretation of the previous one or by trying a different style like ‘an oil painting,’ ‘digital art,’ ‘a photo,’ ‘an emoji,’ etcetera. This can be helpful for achieving a desired style or aesthetic.

DALL-E 2’s potential uses are vast.

Graphic designers, app developers, media outlets, architects, commercial illustrators, and product designers could all use the tool for inspiration, new creations, and editing.

Commercial artists may be nervous about their future employment prospects. Ramesh acknowledges that many jobs could change:

We have seen AI be a good tool for people in the creative space. For example, as photo editing software has become more powerful and accessible it has allowed more people to enter the photography field. In recent years, we’ve also seen artists use AI to create new kinds of art.

It’s hard to predict the future, but we do know AI will have an impact on jobs much like personal computers did. The nature of many jobs will change, jobs that never existed before will be created, and others may be eliminated.

Created with DALL·E 2 by @OpenAI

Prompt:

"Mona Lisa is drinking wine with da Vinci."// Even if we don't see Maestro, the composition is perfect. Note the horizontal level of liquid in the glass.

Made with #DALLE // #DALLEmerz pic.twitter.com/wk8Kf6DKcd

— Merzmensch Kosmopol (@Merzmensch) April 6, 2022

The system hasn’t yet been released to the public. OpenAI CEO Sam Altman hopes to launch the product this summer, but the researchers first want to investigate the risks.

They plan to integrate safeguards that prevent the system from generating deceptive and otherwise harmful content.

In addition, DALL·E 2 inherits various biases from its training data — and its outputs sometimes reinforce societal stereotypes.

The team has already removed explicit content from the training data and banned violent, hateful, and adult content in their content policy.

If filters identify images and text prompts that break the rules, the system won’t generate the outputs. Automated and human monitoring systems have also been implemented as safeguards against misuse.

View this post on Instagram

Altman believes DALL-E’s mechanism could change how we interact with machines.

“This is another example of what I think is going to be a new computer interface trend: you say what you want in natural language or with contextual clues, and the computer does it,” he said in a blogpost.

DALL-E may also boost our understanding of how AI sees the world. OpenAI hopes this helps them create systems that benefit humanity — and aren’t manipulated to foster hatred and deception.

Get the TNW newsletter

Get the most important tech news in your inbox each week.