Two separate studies, one by UK-based artificial intelligence lab DeepMind and the other by researchers in Germany and Greece, display the fascinating relations between AI and neuroscience.

As most scientists will tell you, we are still decades away from building artificial general intelligence, machines that can solve problems as efficiently as humans. On the path to creating general AI, the human brain, arguably the most complex creation of nature, is the best guide we have.

Advances in neuroscience, the study of nervous systems, provide interesting insights into how the brain works, a key component for developing better AI systems. Reciprocally, the development of better AI systems can help drive neuroscience forward and further unlock the secrets of the brain.

For instance, convolutional neural networks (CNN), one of the key contributors to recent advances in artificial intelligence, are largely inspired by neuroscience research on the visual cortex. On the other hand, neuroscientists leverage AI algorithms to study millions of signals from the brain and find patterns that would have gone. The two fields are closely related and their synergies produce very interesting results.

Recent discoveries in neuroscience show what we’re doing right in AI, and what we’ve got wrong.

DeepMind’s AI research shows connections between dopamine and reinforcement learning

A recent study by researchers at DeepMind proves that AI research (at least part of it) is headed in the right direction.

Thanks to neuroscience, we know that one of the basic mechanisms through which humans and animals learn is rewards and punishments. Positive outcomes encourage us to repeat certain tasks (do sports, study for exams, etc.) while negative results detract us from repeating mistakes (touch a hot stove).

The reward and punishment mechanism is best known by the experiments of Russian physiologist Ivan Pavlov, who trained dogs to expect food whenever they hear a bell. We also know that dopamine, a neurotransmitter chemical produced in the midbrain, plays a great role in regulating the reward functions of the brain.

Read: [Chess grandmaster Gary Kasparov predicts AI will disrupt 96 percent of all jobs]

Reinforcement learning, one of the hottest areas of artificial intelligence research, has been roughly fashioned after the reward/punishment mechanism of the brain. In RL, an AI agent is set to explore a problem space and try different actions. For each action it performs, the agent receives a numerical reward or penalty. Through massive trial and error and by examining the outcome of its actions, the AI agent develops a mathematical model optimized to maximize rewards and avoiding penalties. (In reality, it’s a bit more complicated and involves dealing with exploration and exploitation and other challenges.)

More recently, AI researchers have been focusing on distributional reinforcement learning to create better models. The basic idea behind distributional RL is to use multiple factors to predict rewards and punishments in a spectrum of optimistic and pessimistic ways. Distributional reinforcement learning has been pivotal in creating AI agents that are more resilient to changes in their environments.

The new research, jointly done by Harvard University and DeepMind and published in Nature last week, has found properties in the brain of mice that are very similar to those of distributional reinforcement learning. The AI researchers measured dopamine firing rates in the brain to examine the variance in reward prediction rates of biological neurons.

Interestingly, the same optimism and pessimism mechanism that AI scientists had programmed in distributional reinforcement learning models was found in the nervous system of mice. “In summary, we found that dopamine neurons in the brain were each tuned to different levels of pessimism or optimism,” DeepMind’s researchers wrote in a blog post published on the AI lab’s website. “In artificial reinforcement learning systems, this diverse tuning creates a richer training signal that greatly speeds learning in neural networks, and we speculate that the brain might use it for the same reason.”

What makes this finding special is that while AI research usually takes inspiration from neuroscience discovery, in this case, neuroscience research has validated AI discoveries. “It gives us increased confidence that AI research is on the right track since this algorithm is already being used in the most intelligent entity we’re aware of: the brain,” the researchers write.

It will also lay the groundwork for further research in neuroscience, which will, in turn, benefit the field of AI.

Neurons are not as dumb as we think

While DeepMind’s new findings confirmed the work done in AI reinforcement learning research, another research by scientists in Berlin, this time published in Science in early January, proves that some of the fundamental assumptions we made about the brain are quite wrong.

The general belief about the structure of the brain is that neurons, the basic component of the nervous system are simple integrators that calculate the weighted sum of their inputs. Artificial neural networks, a popular type of machine learning algorithm, have been designed based on this belief.

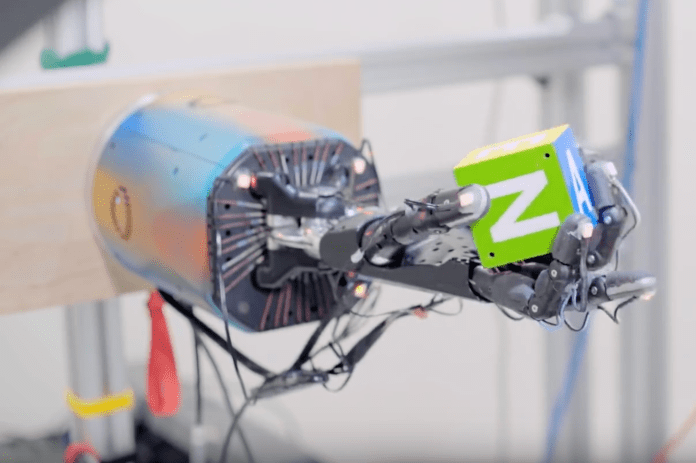

Alone, an artificial neuron performs a very simple operation. It takes several inputs, multiplies them by predefined weights, sums them and runs them through an activation function. But when connecting thousands and millions (and billions) of artificial neurons in multiple layers, you obtain a very flexible mathematical function that can solve complex problems such as detecting objects in images or transcribing speech.

Multi-layered networks of artificial neurons, generally called deep neural networks, are the main drive behind the deep learning revolution in the past decade.

But the general perception of biological neurons being “dumb” calculators of basic math is overly simplistic. The recent findings of the German researchers, which were later corroborated by neuroscientists at a lab in Greece, proved that single neurons can perform XOR operations, a premise that was rejected by AI pioneers such as Marvin Minsky and Seymour Papert.

While not all neurons have this capability, the implications of the finding are significant. For instance, it might mean that a single neuron might contain a deep network within itself. Konrad Kording, a computational neuroscientist at the University of Pennsylvania who was not involved in the research, told Quanta Magazine that the finding could mean “a single neuron may be able to compute truly complex functions. For example, it might, by itself, be able to recognize an object.”

What does this mean for artificial intelligence research? At the very least, it means that we need to rethink our modeling of neurons. It might spur research in new artificial neuron structures and networks with different types of neurons. Maybe it might help free us from the trap of having to build extremely large neural networks and datasets to solve very simple problems.

“The whole game—to come up with how you get smart cognition out of dumb neurons—might be wrong,” cognitive scientist Gary Marcus, who also spoke to Quanta, said in this regard.

This story is republished from TechTalks, the blog that explores how technology is solving problems… and creating new ones. Like them on Facebook here and follow them down here:

Get the TNW newsletter

Get the most important tech news in your inbox each week.