For better or worse, it’s getting easier than ever to doctor video footage, and the latest development in this field is as scary as it is impressive. A new algorithm developed by researchers from Stanford University, Max Planck Institute for Informatics, Princeton University, and Adobe makes it possible to alter human speech in a video, just by changing the text in its transcript.

This method is said to alter the video while preserving the speaker’s characteristics. To accomplish this, the algorithm first reads the phonemes and pronunciation of letters and words from the original video, and creates a model of the speaker’s head to accurately replicate the speaker’s voice and movements.

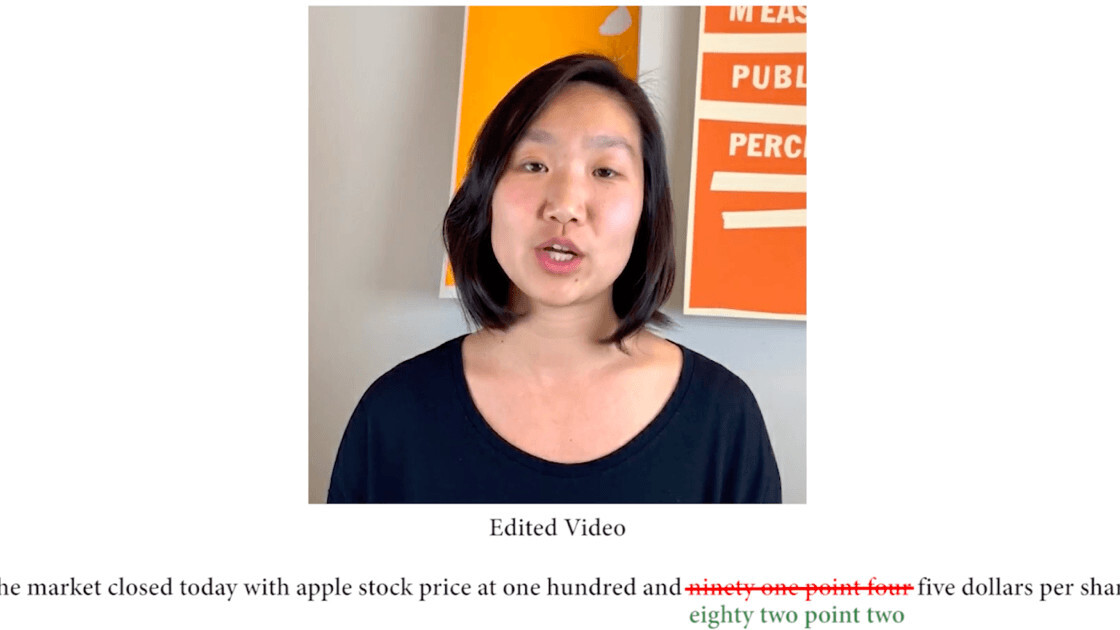

Once you edit the transcript, the algorithm performs a search for segments containing lip movements that make the words you’ve typed in, and replaces the original phrase. But the replaced part can have a lot of pauses and cuts, because it’s stitched together tiny segments of video from across the clip. So, the algorithm applies some intelligent smoothing to make the edited video appear more natural.

Right now, this algorithm works only when you feed it at least 40 minutes of original footage to train it.

In a video explaining this new method, Stanford’s Ohad Fried shows how easy it is to replace a phrase without compromising the quality of speech.

Naturally, this algorithm raises concerns that anyone can edit a speech (including those of public figures, like politicians), inject some misinformation, and make it look natural – adding to our worries about deepfakes. However, Fried said that we’ve been through this with photo editing software as well and the world still turns.

With that in mind, as well as numerous options for enabling edited videos to be identified, Fried believes this tool could prove useful to video producers in negating the time and effort required to re-shoot flubbed portions of speeches and other footage involving humans speaking in front of a camera.

He added that there can be several options like digital watermarking to avoid counterfeit videos, and the research would, in fact, encourage others to build such solutions:

One is to develop some sort of opt-in watermarking that would identify any content that had been edited and provide a full ledger of the edits. Moreover, researchers could develop better forensics such as digital or non-digital fingerprinting techniques to determine whether a video had been manipulated for ulterior purposes. In fact, this research and others like it also build the essential insights that are needed to develop better manipulation detection.

While researchers are optimistic about the positive use of this algorithm, it’d be foolish to assume that this would be safe to use in mainstream products without proper safeguards.

You can read more about the research here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.