In January, IBM published a research study dubbed ‘Diversity in Faces‘ to explore whether AI-based systems developed biases against people based on their appearance. The company used a dataset consisting of nearly a million pictures of people’s faces from photo-hosting site Flickr. However, it failed to notify those Flickr users that it was using their pictures to train an AI – and you could be one of them.

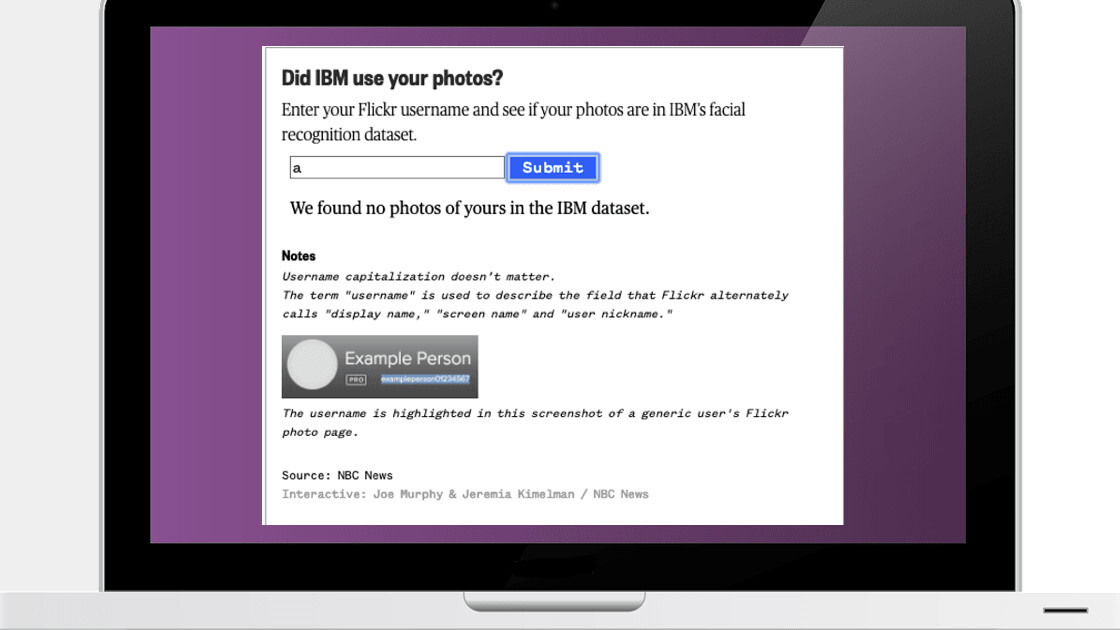

NBC, which broke the story, has developed a tool to check if IBM has used your Flickr photos.

Here’s how it works:

- Open this link in your browser, and scroll down until you see the tool as captured below.

- Enter your username, and click on the Submit button.

- If IBM’s used your photos, the tool will show you a message with the number of photos used in the company’s research.

While IBM said that Flickr users or subjects can opt-out of the research, NBC’s report suggests it’s extremely hard. The aforementioned tool will help you to check if IBM used your photos, but you’ll still have to provide it individual links of the photos to get them removed. That’s a shitty way to handle user data for research. Hopefully, the company makes it the opt-out process easier.

TNW Conference 2019 is coming! Check out our glorious new location, inspiring line-up of speakers and activities, and how to be a part of this annual tech extravaganza by clicking here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.