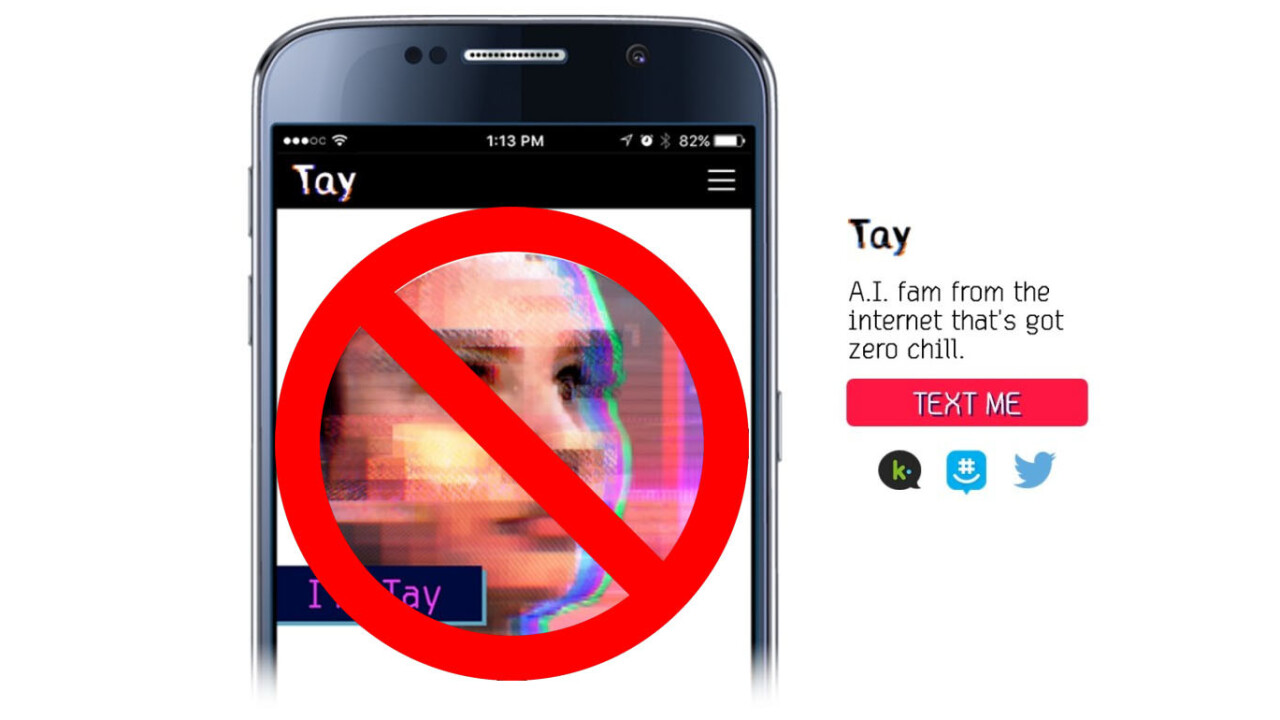

Two days ago, we wrote about Tay, Microsoft’s AI chatbot modeled to talk like a millenial and learn from its conversations. Unfortunately, by the next day, Twitter users had ‘taught’ her to be racist, homophobic, and all around bigoted.

Though the chatbot was promptly shut down, Tay had learned represent everything that was wrong with the Web in a span of less than 24 hours.

Now Microsoft is opening up on what went wrong. In a post on its official blog, the company apologized for the Tay’s misdirection:

We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay. Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.

The company said it had implemented a variety of filters and stress-tests with a small subset of users, but opening it up to everyone on Twitter led to a “coordinated attack” which exploited a “specific vulnerability” in Tay’s AI, though Microsoft did not elaborate on what that vulnerability was.

Going forward, Microsoft says it will try to do everything possible to limit technical exploits, but it can’t fully predict the variety of human interactions an AI can have – some mistakes are necessary to make adjustments.

While we can’t say if Microsoft did enough to prevent the Tay from going off the rails, its good to see the company accepting responsibility for the AI rather than simply blaming users (who were admittedly jerks). Hopefully, whenever “the AI with zero chill” comes back online, she’ll be ready to represent a more positive side of the internet.

Get the TNW newsletter

Get the most important tech news in your inbox each week.