Dr. Patrick Lin is the Director of Ethics and the Emerging Sciences Group at California Polytechnic State University in the U.S. and is one of the nation’s leading experts in ethical issues surrounding robots, particularly those on the horizon in the field of military robotics. I had the pleasure of interviewing Dr. Lin about the state of ethical robotics and why we really fear bad robots.

Dr. Patrick Lin is the Director of Ethics and the Emerging Sciences Group at California Polytechnic State University in the U.S. and is one of the nation’s leading experts in ethical issues surrounding robots, particularly those on the horizon in the field of military robotics. I had the pleasure of interviewing Dr. Lin about the state of ethical robotics and why we really fear bad robots.

CBM: Why do you think so many sci-fi movies are about robots behaving unethically? Why do you think humans are fascinated by evil robots?

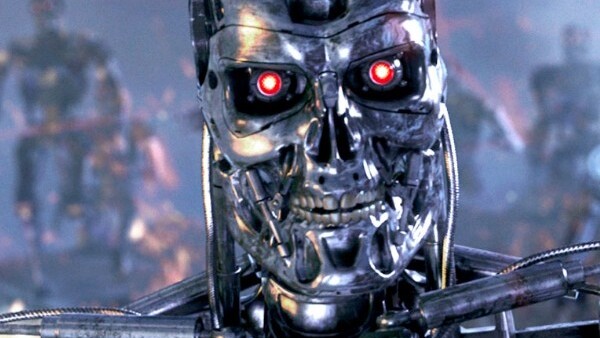

PL: I think that much of this is a reflection about ourselves, Homo sapiens. In important ways, robots are a replacement for humans: they can do many of our jobs better – usually described as the three D’s: dull, dirty, and dangerous jobs – and they can do some jobs that we can’t do at all, such as explore deep underwater or Martian environments. Thus, our fascination with bad robots is really that we are worried about the mischief we bring unto ourselves through technology, directly or by proxy. It’s like a backfire – a spectacular, violent event that happens when things go differently than planned.

It’s also about the hubris we have in thinking that we can master our environment and in the faith we place in technology. But unlike, say, computers that crash, robotic errors can cause serious physical harm, which is more visceral than other harms. We trust that computer errors will eventually be fixed, such as restoring a bank account that was accidentally erased, but physical harm can’t be undone. Errors aside, we might interact with or employ robots in ways that lend themselves to serious injuries. Unlike computers, robots have been causing direct and immediate pain and harm in the course of their normal operation, with the first recorded death about 30 years ago.

CBM: What are the main issues surrounding robots and ethics?

PL: Today, the most urgent issues are related to military robots. First, the military has long been a key driver of technology research and development. They have also relaxed rules or standards that we would not have in a civilian setting. For instance, in a city, there would be rigorous testing and safeguards (we hope) to ensure that a robot doesn’t harm or kill a human being; but in the military, that could be the robot’s exact job—to kill the enemy. Therefore, robotics may naturally be used more widely in a military setting than a civilian one, and thus raising ethical issues earlier. The “Terminator” scenario isn’t plausible or near-term enough to be seriously discussed now, but it’s not unreasonable to worry now that the military would field a war-robot that autonomously makes life-and-death decisions as soon as it could. Other current concerns include the psychological effect of, say, a virtual pilot pulling the trigger to kill people with a Predator UAV remotely controlled half a world away as well as the legality of using such robots for missions we probably otherwise would not attempt, such as cross-border strikes in Pakistan.

Apart from military uses, robots today are raising difficult questions about whether we ought to use them to babysit children and as companions to the elderly, in lieu of real human contact. Job displacement and economic impact have been concerns with any new technology since the Industrial Revolution, such as the Luddite riots to smash factory machinery that was replacing workers. Medical, especially surgical robots, raise issues related to liability or responsibility, say, if an error occurred that harmed the patient, and some fear a loss of surgical skill among humans. And given continuing angst about privacy, robots present the same risk that computers do (that is, “traitorware” that captures and transmits user information and location without our knowledge or consent), if not a greater risk given that we may be more trusting of an anthropomorphized robot than a laptop computer.

CBM: How do Asimov’s 3 Laws of Robotics play into our current state of robot ethics? What about the way we program robots?

PL: By pointing to Asimov’s laws as a possible solution, which some people do, the first fantasy here is that ethics is reducible to a simple set of rules. Even when you’ve identified ethical principles or values, it’s not clear how to resolve conflicts among them. For instance, Alan Dershowitz famously pointed out that democracies faced a triangular conflict among security, transparency, and civil liberties on the issue of whether we ought to engage in torture or not, for instance, in a ticking time-bomb case. We might also agree to some general principle that we should never kill an innocent person, but what if killing an innocent person needed to save 10 lives, or 100, or 100,000 – can we defend a non-arbitrary threshold number at which the principle can be overruled?

PL: By pointing to Asimov’s laws as a possible solution, which some people do, the first fantasy here is that ethics is reducible to a simple set of rules. Even when you’ve identified ethical principles or values, it’s not clear how to resolve conflicts among them. For instance, Alan Dershowitz famously pointed out that democracies faced a triangular conflict among security, transparency, and civil liberties on the issue of whether we ought to engage in torture or not, for instance, in a ticking time-bomb case. We might also agree to some general principle that we should never kill an innocent person, but what if killing an innocent person needed to save 10 lives, or 100, or 100,000 – can we defend a non-arbitrary threshold number at which the principle can be overruled?

Second, many issues in robot ethics are not about perfect programming. Imagine that we’ve created a perfectly ethical babysitting robot – it is still an open question of whether we ought to use them in the first place: Replacing human love and attention with machines to care for our children and senior citizens may be a breach of our moral responsibility to care for them. Perfect programming also doesn’t solve the issue of job displacement, hacking for malicious uses, and other worries.

Third, even if all robot-ethics issues are about programming, we have yet to create a perfectly running piece of complex software or hardware, so why would we think that the right programming will solve all our problems with robots? Hardly a couple days go by without some application, including the operating system, crashing on my computer, and these are applications that have benefitted from years of development, field testing, and evolution.

CBM: What are examples of technologies that got “too far ahead of our ability to manage them–as new technologies often do,” as you wrote in your Forbes essay?

PL: Well, I also work in other areas of technology ethics. So in nanotechnology, for instance, there was a rush to bring to market products that used nanomaterials, because “nanotechnology” was a trendy marketing buzzword. Yet there were few studies done on, say, whether the body can absorb the nanoparticles found in some sunblock lotions or whether there is a negative environmental impact from nanosilver particles discharged by some washing machines. Companies just started selling these products with little regard of larger effects.

As another example, Ritalin is being (ab)used by otherwise-normal students and workers looking to more focus and productivity in their studies or job – besides the unknown health risk, we haven’t really thought about the bigger picture, how cognitive enhancers might be disruptive to society, much as steroids are to sports. Also, consider that the Human Genome Project started in 1990, but it was only 17 years later that there was actual legislation to protect Americans from discrimination by employers or insurers who might have access to their genetic profiles. Finally, look at the modern world with networked computers – we’re still fumbling through intellectual-property issues (such as, music file-sharing) and privacy claims. All this is to say that there’s a considerable lag time between ethics or public policy and the development of a technology. My colleague, Jim Moor at Dartmouth, calls this a “policy vacuum.”

CBM: How do researchers and academics study robot ethics? Can you actually perform experiments or is the field mainly speculative?

PL: Scenario-planning is just one item in a larger toolbox for applied ethicists. And being speculative isn’t by itself an objection to these scenarios. For instance, counterterrorism efforts involve imagining “what-if” scenarios that might not have yet occurred and hopefully never will occur; nevertheless, planning for such scenarios are often useful and instructive, no matter how remote the odds are. But imagining the future must also go hand in hand with real science or what is possible and likely. Some scenarios, such as a robots taking over the world or the “Terminator” scenario, are so distant, if even possible, that it’s hard to justify investing much effort on those issues at present.

Winston Churchill reportedly said, “It is a mistake to try to look too far ahead. The chain of destiny can be grasped only one link at a time.”

Other tools include looking at history for past lessons and analogues and even looking at science fiction or literature to identify society’s assumptions and concerns about a given technology such as robotics, and this means working with academics beyond mere philosophers. And of course we need to work closely with scientists to stay grounded in real science and technology. This could lead to interesting experiments, for instance, programming a robot to follow a specific ethical theory or set of rules to see how they perform relative to other robots following different ethical theories.

CBM: Is it possible to program ethic behaviors into robots? And do you agree with that approach?

PL: This could work for robots used for specific tasks or in narrow operating environments, but it’s hard to see how that’d be possible today or in the near time for a general-purpose robot that’s supposed to move freely through society and perform a wide range of tasks – what is ethical in the larger, unstructured world depends on too many variables and may require a lot of contextual knowledge that machines can’t grasp, at least in the foreseeable future. In other words, what is ethical is simply much too complex to reduce to a programmable set of rules. And even a “bottom-up” approach of letting the robot explore the world and learn from its actions and mistakes might still not get a general-purpose robot ethical enough where we can responsibly unleash it upon the world. But robots tasked with specific jobs, such as building cars or delivering classroom instruction, could certainly be programmed to avoid the obvious unethical scenarios, such as harming a person. Wendell Wallach at Yale and Colin Allen at Indiana University, Bloomington, discuss exactly these issues in their recent book.

CBM: Do you believe that we can program emotional intelligence into artificial intelligence?

PL: We seem close to creating robots that appear to convincingly exhibit emotions and empathy, as well as identify and respond to emotions when they encounter them in humans and elicit certain emotions (or “affective computing”). But this is much different from creating robots that actually feel emotion, which we’re not even close to doing. This requires a more complete theory of mind than we currently have and probably consciousness – and we still know little about how minds work.

PL: We seem close to creating robots that appear to convincingly exhibit emotions and empathy, as well as identify and respond to emotions when they encounter them in humans and elicit certain emotions (or “affective computing”). But this is much different from creating robots that actually feel emotion, which we’re not even close to doing. This requires a more complete theory of mind than we currently have and probably consciousness – and we still know little about how minds work.

Even if we could make robots that feel emotions, how would we verify that their experience of pain or happiness is qualitatively similar to ours? In philosophy, this is called the “other minds” problem, which is that we can’t even prove that other minds exist, or that your experience of red is the same as mine. But maybe that doesn’t matter as long as your experience of red is functionally equivalent to mine, that is, we call the same things “red.” As it applies to robots, maybe it doesn’t matter so much that a robot actually feels happiness or fear, as long as it acts the same as if it did.

CBM: What do you see happening in the future? Will robots have ethics built into their software?

PL: I have no idea, and by the time that’s even possible, it would be so far off in the future that maybe no one today can really say. But one thing seems to be certain, which is that the future is never the way we had imagined it’d be. It could be that robotic autonomy isn’t reliable enough that we would trust it, and so predictions like self-driving cars (like flying cars forecasted half a century ago) never come to being. But if we ever do have robots that we would require to behave ethically – for instance, because they roam through society and interact with kids, humans, and property – then, yes, I hope there’s something in place to help ensure that happens. Ron Arkin at Georgia Tech is working on something similar, with his concept of an “ethical governor” which, like a car’s governor, limits the behavior of a robot.

CBM: Then, why study robot ethics?

PL: Because technology makes a real impact on lives and the world, and how we develop and use them have practical effects, some positive and some negative. Think of all the great benefits from the Internet – but also think of the bad, like cyberbullying that has led to deaths in the real world. If we can reduce some of these negative effects by thinking ahead about the social and policy questions technology will give rise to, then we’re leaving the world in a little better shape than we found it, and that’s all any of us can really do.

Personally, I’m interested in robot ethics because robots do capture the imagination, as I mentioned at the beginning. As Peter Singer puts it, they’re frakkin’ cool. And even though I went on to study philosophy in college and graduate school, I’ve been interested in science and technology as far back as I can remember. This includes programming computers before I was a teenager – which doesn’t sound like a big deal these days, but it was unusual 30 years ago. An Atari 400 or TI-99 4/A or Apple IIe might not have the processing power of an ordinary cellphone today, but they have personality and promise a sort of joyful discovery that only robots can dream of having.

I first met Dr. Patrick Lin less than two years ago, when I had the pleasure of working with him on a piece titled “The Ethical War Machine,” for a report I put together on the state of artificial intelligence.

To raise awareness of these issues, as well as chase down a few rabbit holes, Dr. Patrick Lin is publishing an edited volume called Robot Ethics, with MIT Press due out later in 2011, along with his colleagues at Cal Poly, philosopher Keith Abney and roboticist George Bekey. Bekey is also a professor emeritus at USC and founder of its robotics lab. So, together, they make a uniquely qualified research team to investigate robot ethics. Lin is now also working with such groups as Stanford Law School’s Center for Internet and Society, which is also interested in robot ethics, and so we should have some interesting projects coming up.

Get the TNW newsletter

Get the most important tech news in your inbox each week.