A team of scientists from the University of Maryland recently came up with a take on the hyperdimensional computing theory that could give robots memories and reflexes. This could break the stalemate we seem to be at with autonomous vehicles and other real-world robots, and lead to more human-like AI models.

The solution

The Maryland team came up with a theoretical method by which hyperdimensional computing – a hypervector-based alternative to computations based on Booleans and numbers – could replace current deep learning methods for processing sensory information.

According to Anton Mitrokhin, a PhD student and author on the team’s research paper, this is important because there’s a processing bottle-neck keeping AI from functioning like humans do:

Neural network-based AI methods are big and slow, because they are not able to remember. Our hyperdimensional theory method can create memories, which will require a lot less computation, and should make such tasks much faster and more efficient.

The creation of memories – something current AI doesn’t have – is important for the prediction of future tasks. Imagine playing tennis: you don’t perform the calculations in your head every time you hit the ball you just run over, grunt, and hit it. You perceive the ball and you act – there’s no third envelope in play where real-world data is transformed into digital data that’s then processed for action. This ability to translate perception into action without a filter is intrinsic to our ability to function in the real world.

The problem

The driver of a Tesla was killed in May 2016 when the car’s semi-autonomous driver assistance system failed to “see” the white trailer of a semi-truck, and the vehicle crashed into it at highway speeds. The same thing happened again last week. Different model Tesla; different version of Autopilot; same result. Why?

While Elon Musk deserves some of the blame, and other human error deserves the lion-share, the fact remains that deep learning sucks at driving cars. And there’s not much hope it’s going to get a lot better.

Crying wolf is getting old. Even me tweeting about crying wolf getting old is getting old… "The Long and Lucrative Mirage of the Driverless Car", "That reality is still far off, but that hasn’t stopped companies from cashing in on" it. https://t.co/eJukjBgtKb

— Rodney Brooks (@rodneyabrooks) May 16, 2019

The reason for this is complex, but it can be easily explained. AI doesn’t know what a car, person, trailer, or hotdog looks like. You can show a deep learning-based AI model a million pictures of a hotdog and train it to recognize pictures of hotdogs with 99.9 percent accuracy – but it’ll never know what one actually looks like.

When a car drives itself, it’s not seeing the roads – cameras don’t allow AI to see. An AI-based computer brain for a driverless car may as well be a person in an isolation booth listening to descriptions of what’s happening on the roads in a different country, spoken by someone who is poorly translating them from a language they don’t speak very well. It’s not an optimum system, and the people who understand how deep learning works aren’t surprised people are dying in autonomous vehicles.

The future

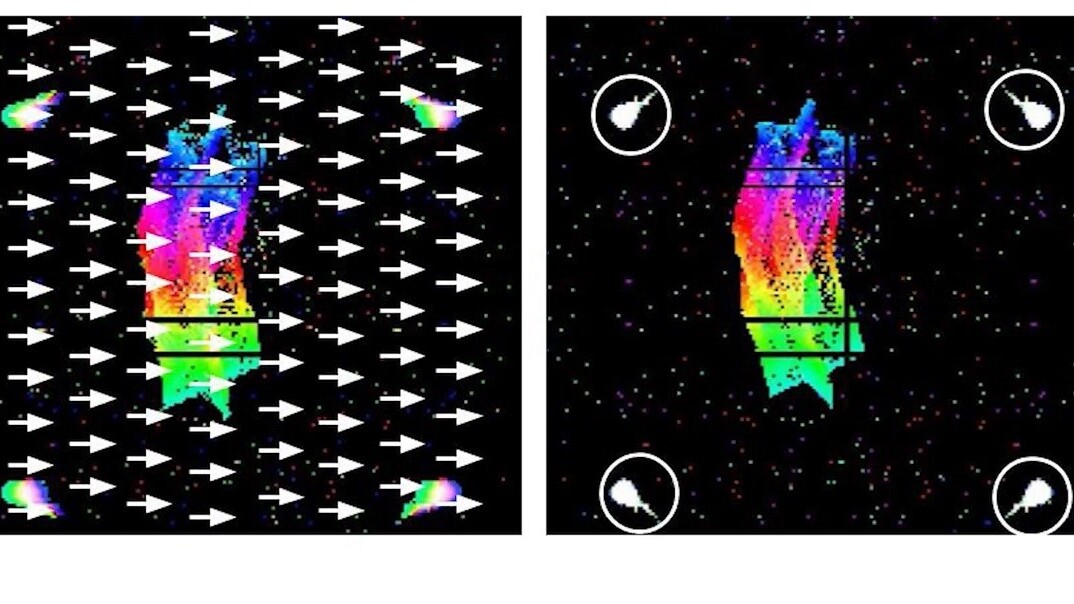

Hyperdimensional computing theory offers the ability for AI to truly “see” the world and make its own inferences. Instead of trying to brute-force process the entire universe by doing the math for every perceivable object and variable, hypervectors can enable “active perception” in robots.

According to Yiannis Aloimonos, lead author on the research paper:

An active perceiver knows why it wishes to sense, then chooses what to perceive, and determines how, when and where to achieve the perception. It selects and fixates on scenes, moments in time, and episodes. Then it aligns its mechanisms, sensors, and other components to act on what it wants to see, and selects viewpoints from which to best capture what it intends. Our hyperdimensional framework can address each of these goals.

While the creation and implementation of a hyperdimensional computing operating system for robots is still theoretical, the ideas provide a path forward for research that could result in a paradigm for driverless car AI that solves the current generation’s deal-breaking problems.

Furthermore, the implications go beyond just robotics. The researchers’ ultimate goal is to replace iterative neural network models – which are time-consuming to train and incapable of active perception – with hyperdimensional computing-based ones that are faster and more efficient. This could lead to a sort of show it don’t grow it approach to developing new machine learning models.

We may be closer to achieving a robot capable of learning to perform new tasks in unfamiliar environments – like Rosie The Robot from “The Jetsons” – than most experts think. Of course, tech like this could also lead to other… less cartoony things:

This is nothing. In a few years, that bot will move so fast you’ll need a strobe light to see it. Sweet dreams… https://t.co/0MYNixQXMw

— Elon Musk (@elonmusk) November 26, 2017

Get the TNW newsletter

Get the most important tech news in your inbox each week.