Google has unveiled a creepily human-like language model called LaMDA that CEO Sundar Pichai says “can converse on any topic.”

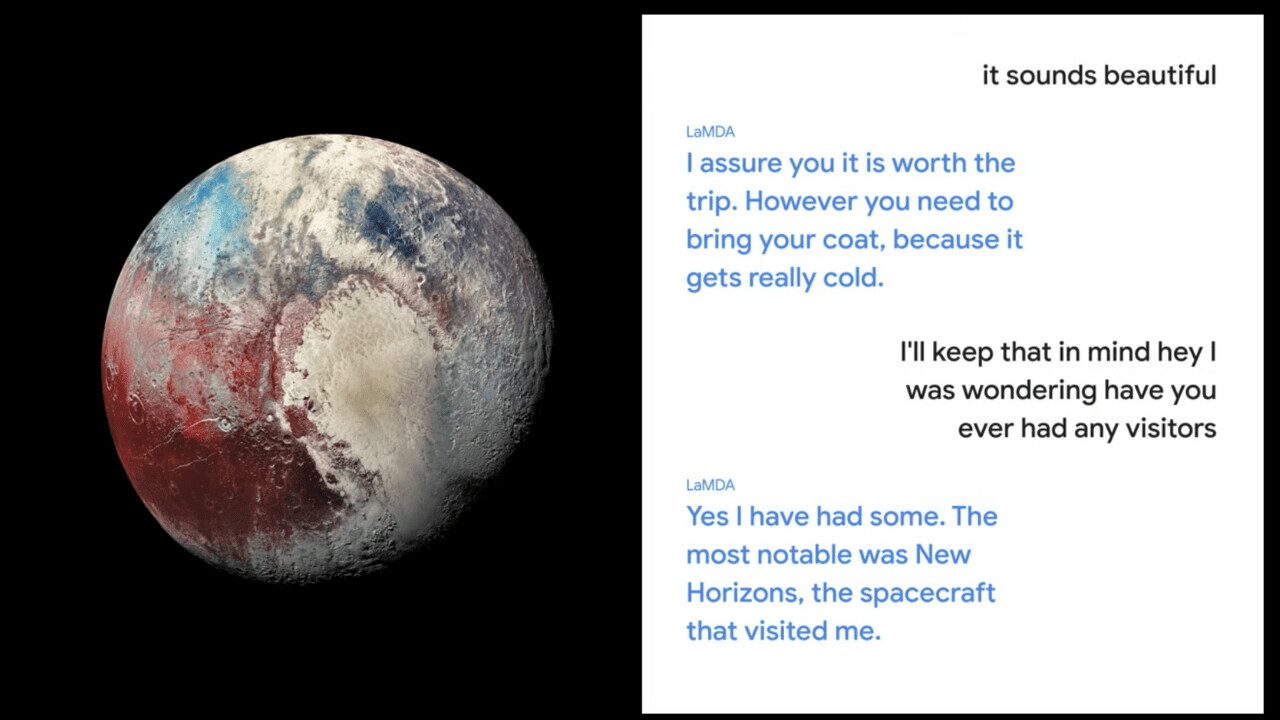

During a demo at Google I/O 2021 on Wednesday, Pichai showcased how LaMDA can enable new ways of conversing with data, like chatting to Pluto about life in outer space or asking a paper airplane about its worst travel experiences.

Pichai said LaMDA generates a natural conversation style by synthesizing concepts — like the Horizon spacecraft and the coldness of the cosmos — from its training data, rather than hand-programming them in the model.

As the responses aren’t pre-defined, they maintain an open-ended dialogue that Pichai said “never takes the same path twice” and can cover any topic.

[Read more: This dude drove an EV from the Netherlands to New Zealand — here are his 3 top road trip tips]

The model also assumed an eerily anthropomorphic form. The AI paper plane, for instance, addressed its interlocutor as “my good friend” while Pluto complained that it’s an under-appreciated planet.

In a blog post, Google said that LaMDA — short for “Language Model for Dialogue Applications” — is built on Transformer, the same neural network architecture used to create BERT and GPT-3:

That architecture produces a model that can be trained to read many words (a sentence or paragraph, for example), pay attention to how those words relate to one another, and then predict what words it thinks will come next.

But unlike most other language models, LaMDA was trained on dialogue. During its training, it picked up on several of the nuances that distinguish open-ended conversation from other forms of language. One of those nuances is sensibleness. Basically: does the response to a given conversational context make sense?

The flow of the conversations was impressively lifelike in the demo, but the model sometimes generated nonsensical responses — like Pluto claiming it’s been practicing flips in outer space, or abruptly ending the conversation to go flying.

It remains a research project for now, but Pichai suggested it could be used in a range of products:

We believe LaMDA’s natural conversation capabilities have the potential to make information and computing radically more accessible and easier to use. We look forward to incorporating better conversational features into products like Google Assistant, Search, and Workspace. We’re also exploring how to give capabilities to developers and enterprise customers.

While LaMDA is only trained on text, Pichai said Google is also working on multimodal models that can help people also communicate across images, audio, and video.

Inevitably, Pichai promised that the model meets Google’s “incredibly high standards on fairness, accuracy, safety, and privacy”, and is being developed in line with the company’s AI principles.

Those claims didn’t impress AI ethicist Timnit Gebru, who was fired by Google following a dispute over a research paper she co-authored on the risks of large language models — which are used in many of the search giant’s products.

"…And even when the language it’s trained on is carefully vetted, the model itself can still be put to ill use…Our highest priority, when creating technologies like LaMDA, is working to ensure we minimize such risks. "

If that ain't a bold faced lie I don't know what is. https://t.co/bOQ1UUCWvx

— Timnit Gebru (@timnitGebru) May 18, 2021

Greetings Humanoids! Did you know we have a newsletter all about AI? You can subscribe to it right here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.