Google today gave us a peek behind the curtain of “Lens,” the new image recognition AI inside the Pixel 2.

Search what you see: to help you interact with the world around you, Pixel 2 comes with an exclusive preview of Google Lens. #madebygoogle pic.twitter.com/lOMvNwWwBV

— Google (@Google) October 4, 2017

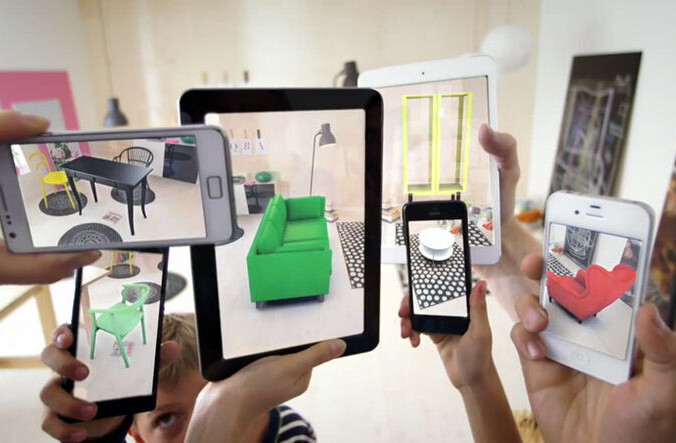

Lens uses sophisticated image recognition and tools like Google Translate to interpret what it sees into meaningful data for the user. When you use Google Lens — which they referred to as “Lensing it,” during the show — you simply point your phone at an object, such as an art print or a movie poster, and Lens will display the most relevant information it can find on Google.

Lens was originally announced at Google I/O earlier this year. At the time, it was described as being able to help the user understand what they were looking at through their phone’s camera. Today’s show gave more specific information on how Lens pulled data from the web to give the user the information they need.

Google Pixel owners will get an exclusive first look at Lens later this year, and the Pixel 2 will be the first phone to ship with Lens loaded onto it. Presumably other phones will have the Lens app some time after, but Google was tight-lipped on its timeframe.

For more from Google’s Pixel 2 hardware event, follow all our coverage here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.