Google announced at it’s I/O conference today that it’s working on a new form of AI called Google Lens, which understands what you’re looking at and can help you by providing relevant responses.

With Google Lens, your smartphone camera won’t just see what you see, but will also understand what you see to help you take action. #io17 pic.twitter.com/viOmWFjqk1

— Google (@Google) May 17, 2017

With Lens, Google’s Assistant will be able to identify objects in the world around you and perform actions based on Google’s various apps.

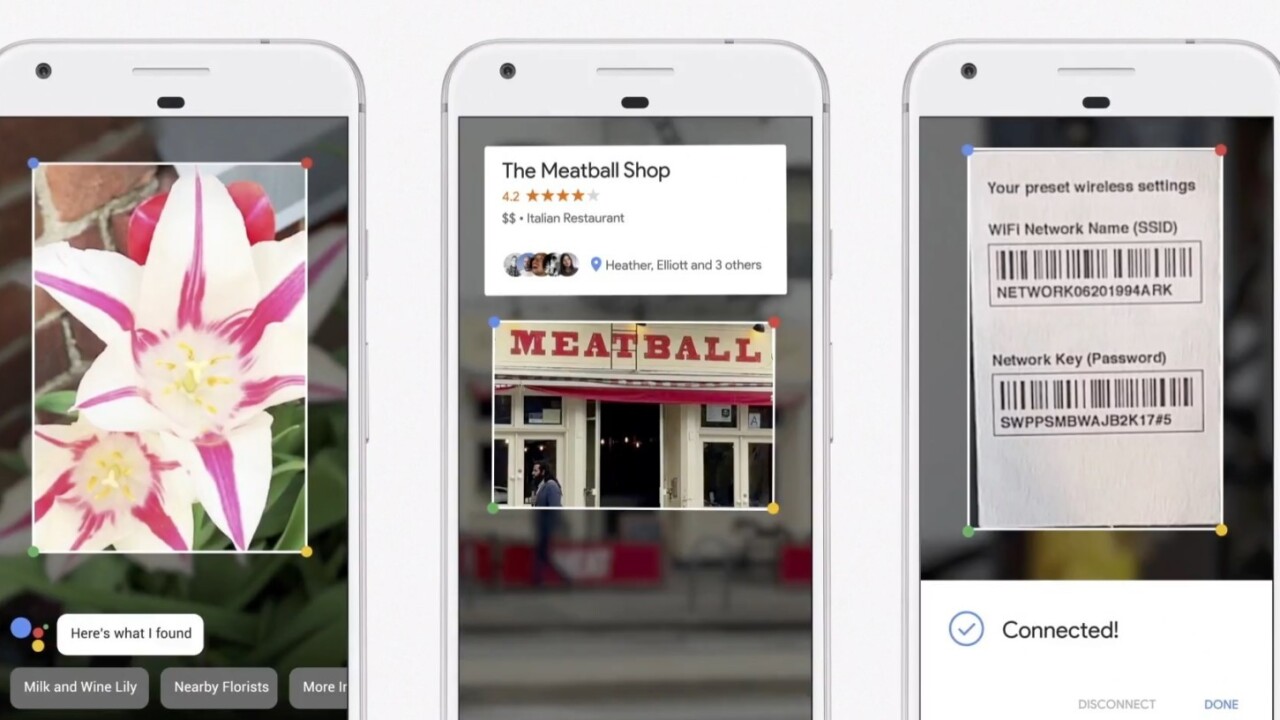

For example, Lens can help you identify a flower you’re looking at using Google image search, or provide you with restaurant recommendations based on your location in Google Maps.

The service seems very ambitious and versatile. Scott Huffman, Vice President of Engineering for Assistant, mentioned using Lens to translate a Japanese street sign and using it to identify a food he didn’t recognize. Huffman said Lens facilitates a conversation between the user and Assistant using visual context and machine learning.

Some of the features are even more complex and helpful. If you want to connect to WiFi on your phone, you can just point your camera at the security credentials, and the Assistant will do the rest of the work.

Get the TNW newsletter

Get the most important tech news in your inbox each week.