Machine learning, which helps computers do things like understand complex voice commands and improve image search capabilities, can be taxing on traditional hardware. Google should know – over 100 of its products and features use this technology to run and improve themselves constantly.

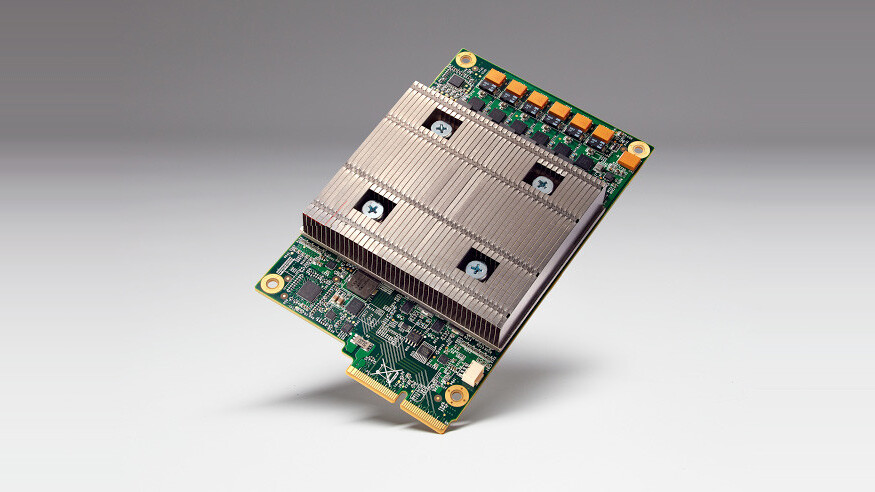

The company has revealed that over the past few years, it quietly developed its own custom processor for such tasks. The Tensor Processing Unit (TPU) is built expressly for running TensorFlow, Google’s in-house machine learning system that it open-sourced last year.

Google says it’s used TPUs in its data centers for more than a year now, and that the performance improvements they offer are roughly equivalent to fast-forwarding technology about seven years into the future, if you go by Moore’s Law.

The company notes that it’s been able to squeeze more operations per second into the silicon and get better results in less time than previous solutions. This level of performance allows TPUs to assist powerful AI like AlphaGo, which defeated world Champion in a Go tournament earlier this year.

Sadly, it’s not for sale. However, you can be sure that these processors will be at the heart of Google’s forthcoming technologies and services as AI and machine learning become more important in the future.

If you’re hankering for a way to integrate machine learning into your own hardware projects, you’ll want to check out Movidius’ USB stick-sized Fathom. It’s compatible with TensorFlow and other systems and will soon be available publicly for under $100.

Get the TNW newsletter

Get the most important tech news in your inbox each week.