Facebook’s experiment with manipulating the emotions of hundreds of thousands of users has quite rightly drawn criticism from people worried about the ethics of such a practice, no matter how scientific its approach. How could they mess with people’s emotions without them being willing test subjects?

Defenders of Facebook’s actions, such as VC (and owner of a stake in Facebook through Andreessen Horowitz) Marc Andreessen, say that this is no different to any other A/B test. It’s true that we’re unwilling subjects in experiments run on websites and apps all the time. The color of a ‘Buy’ button, the call-to-action in an ad, an unannounced new feature in a Twitter app… we don’t know about these tests but they influence our behavior. Still, affecting users’ emotions over the space of a week feels different.

This wasn’t just testing a different design or function to influence our behavior – that kind of test is to be expected. After all, when you visit a website or open an app, you’re stepping onto its creator’s turf and expect to be pushed towards buying something, subscribing to something and the like. On Facebook, though, we expect to see our friends’ lives – the good and the bad in equal measure. Showing only positive posts or negative posts just to see what will happen oversteps a line of what many of us are comfortable with.

Manipulation everywhere

Andreessen argues that TV shows, books, newspapers and indeed other people deliberately affect our emotions.

Andreessen argues that TV shows, books, newspapers and indeed other people deliberately affect our emotions.

The difference, though, is that we willingly enter into these experiences knowing that we’ll be frightened by a horror movie, uplifted by a feel-good TV series, upset by a moving novel, angered by a political commentator or persuaded by a stranger. These are all situations we’re familiar with – algorithms are newer territory, and in many cases we may not know we’re even subjected to them.

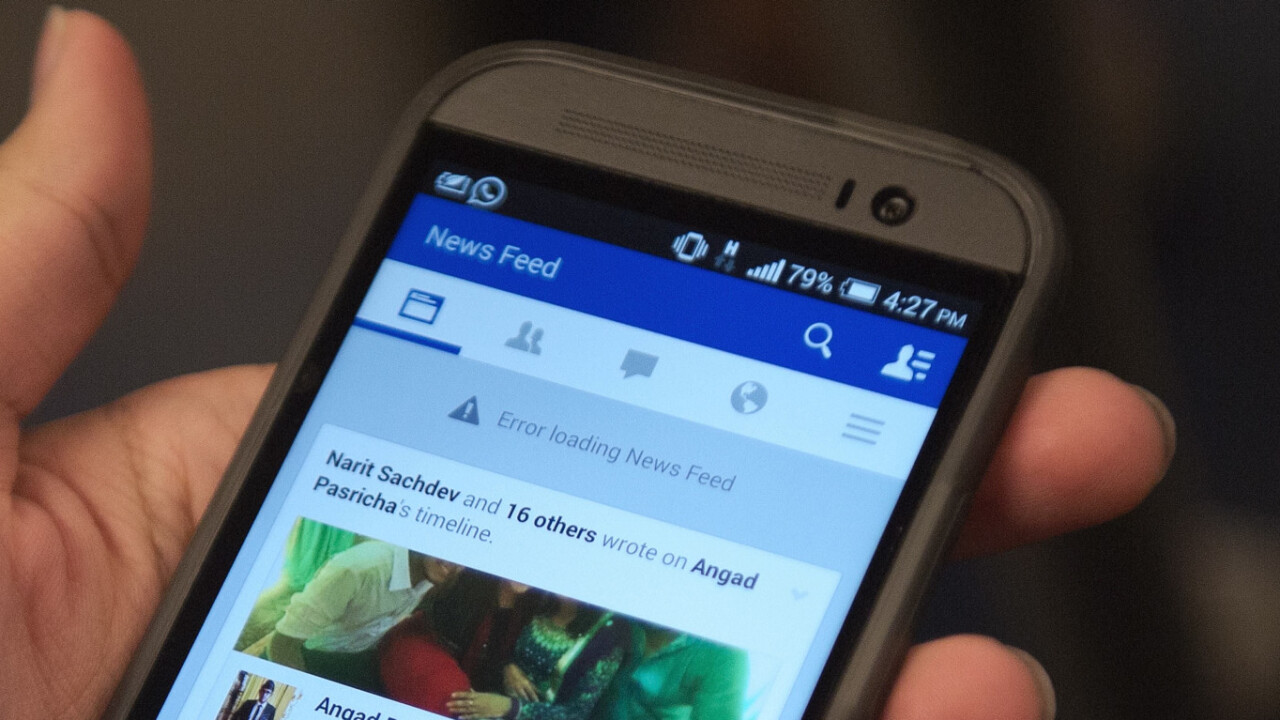

How many people outside the tech and media world, I wonder, understand just how artificial the Facebook News Feed is – that it doesn’t show everything it could, and why that is?

Are you algorithm-savvy?

Algorithms are generally depicted by the tech industry as positive things. For example, Foursquare recommends nearby places based on your previous actions and those of your friends (great!):

Then there’s Google, which displays search results based on universal factors like the level of authority a page has, but also personalized things like your past searches and other behavior on your Google account (thanks, that’s useful!); Facebook wants to keep you coming back, so it filters trashy app alerts and the like from your News Feed and shows you the kinds of posts you’re most likely to interact with (not ideal for marketers or people who want to see everything their friends do, but at least it’s in the interests of a positive user experience).

What the Facebook experiment shows us is that algorithms can be tweaked to affect us in ways that are against our interests. Feeling low because you only see negative status updates isn’t very nice but I’m sure those who would like to manipulate us in other ways are very interested in this research – the politician who wants to get people to vote a certain way, or the activist who wants to sway people to his or her cause by exposing them to more of certain opinions.

These people can’t affect Facebook’s News Feed algorithm, but the results of the emotion experiment could be applied in other places, and we need to be aware of that. Maybe the next The Triumph of the Will will be an algorithm tweak for some online service rather than a film.

Sadly, while being media-savvy (a skill built up by humans over the past century) empowers us to be able to tell when an ad, TV show or newspaper is trying to influence us, it’s not so simple with algorithms that we have no way of understanding unless we can poke around the code that guides them.

Being algorithm-savvy amounts to little more than knowing that any app or website could be trying to manipulate you in undesirable ways through what it selects to show you versus what you expect it to show you, but at least that’s a start.

Don’t miss: Do you know where your photos are?

Image credit: AFP/Getty Images

Get the TNW newsletter

Get the most important tech news in your inbox each week.