The brilliant minds at Google’s sister-company Deepmind are at it again. This time it appears they’ve developed a system by which driverless cars can navigate the same way humans do: by following directions.

A long time ago, before the millennials were born, people had to drive in their cars without any form of GPS navigation. If you wanted to go some place new you used a paper map – they were like offline screenshots of a Google Maps image. Or someone gave you a list of directions.

It turns out, this worked out just fine because people are pretty good at following a series of simple commands. Chances are, most of you could arrive at the intended destination if if I handed you a sheet of paper with the following directions written on it:

- Take 1st Street south from exit 1

- Follow that for 2 miles

- Turn right at the big clock tower

- Keep right for a while until you see the fork in the road

- Turn left at the fork

- Third house on the left

AI wouldn’t be able to make heads or tails of that. You’ve been trained your entire life to follow directions. Think about it: you’ve been navigating schoolhouse hallways, finding your way to the dentist’s office, and following step-by-step instructions your entire life. All of your experience transfers over, even if you’re somewhere you’ve never been before.

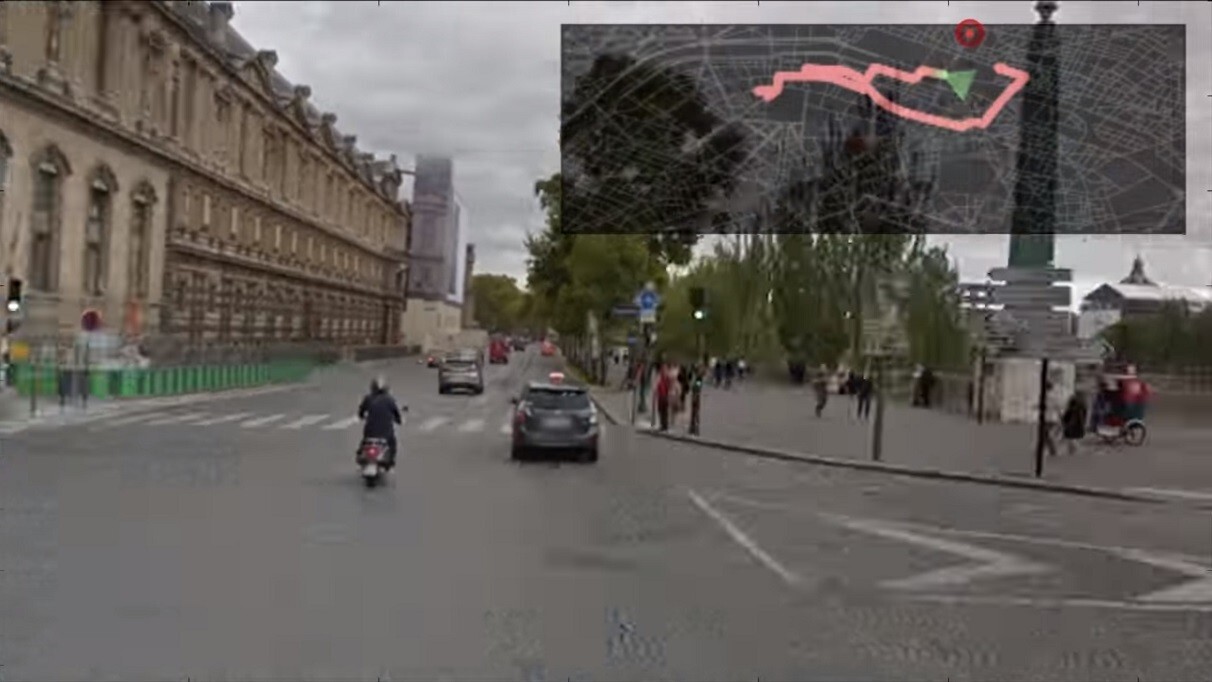

DeepMind had to come up with a way to train AI to do, basically, the same thing. Around this time last year the company developed a way to turn Google Street View imagery into a training environment for AI. Since it’s a bad idea to just let driverless cars loose on the roads, the company uses this environment to train AI to navigate a city without using a map or relying on any other knowledge.

Last year the AI could get from point ‘A’ to point ‘B’ by zooming around the images until it found the place it was looking for. Basically, it virtually ‘drives’ around the map environment until it finds a match. This isn’t useful unless your idea of a fun taxi ride is the navigational equivalent of hitting the random button on your Spotify play list.

Now Deepmind’s come up with a frame work by which AI can follow directions to navigate Street View. The team created what’s called the StreetNav training environment and developed several tasks by which an AI can be trained to navigate within the environment.

The researchers trained the AI on certain areas of New York City. Using various different parameters, the team gave the AI a set of navigational instructions and then rewarded it for accurately completing the instructions and arriving at the intended destination.

It’s worth pointing out that this isn’t a video game or driving simulator. The AI isn’t training on traffic models or weather variables. What Deepmind is doing is a concocting a general approach to common sense navigation for driverless cars.

There’s currently no framework by which you can hop in a driverless taxi and get results by saying “I don’t know the address, but I can tell you how to get there from here.” Before that can happen on real roads, someone has to teach it how to follow directions in the lab.

Unfortunately the agents didn’t perform quite as well as had been hoped. According to the white paper:

Given the gap between reported and desired agent performance here, we acknowledge that much work is still left to be done.

That’s not to say the work isn’t a success. The AI models Deepmind trained were able to transfer knowledge gained training in certain areas of New York City and apply them in other areas of the city it’d never trained on. Furthermore, the team even tested the model on areas of Pittsburgh – a city it’d never been exposed to — where it also performed quite well. This is a benchmark for further testing and the groundwork for future models.

Hopefully it all eventually adds up to a world where you don’t have to act like a robot to use one. Deepmind’s latest advances should pave the way for a more human driverless car experience.

Want to learn more about AI than just the headlines? Check out our Machine Learners track at TNW2019.

Get the TNW newsletter

Get the most important tech news in your inbox each week.