How will augmented reality (AR) glasses work for consumers? Specifically, what will be the user interface (UI) and/or user experience (UX) for the eyewear that will bring AR to the masses?

The user experience will be very important since we are talking about a change to the fundamental human and machine interface. Up until now, the interaction has been between humans and computers, personal computers, laptops, mobile devices, and tablets.

What we’re looking at over the next 10 years is a migration towards other yet-to-be-designed devices that are near-to-eye that will enable us to see the real world. But it will also have the ability to post digital content either floating loosely in the real world or within our view of the real world.

Need input on the inputs

It’s going to take something totally new to trigger a large-scale consumer response when it comes to augmented reality wearables. What’s going to lead the development of these devices? I think it will come down to decisions regarding the input. Those decisions are going to dictate what the UI and UX will become. This is instead of the opposite; the UX/UI dictating the inputs.

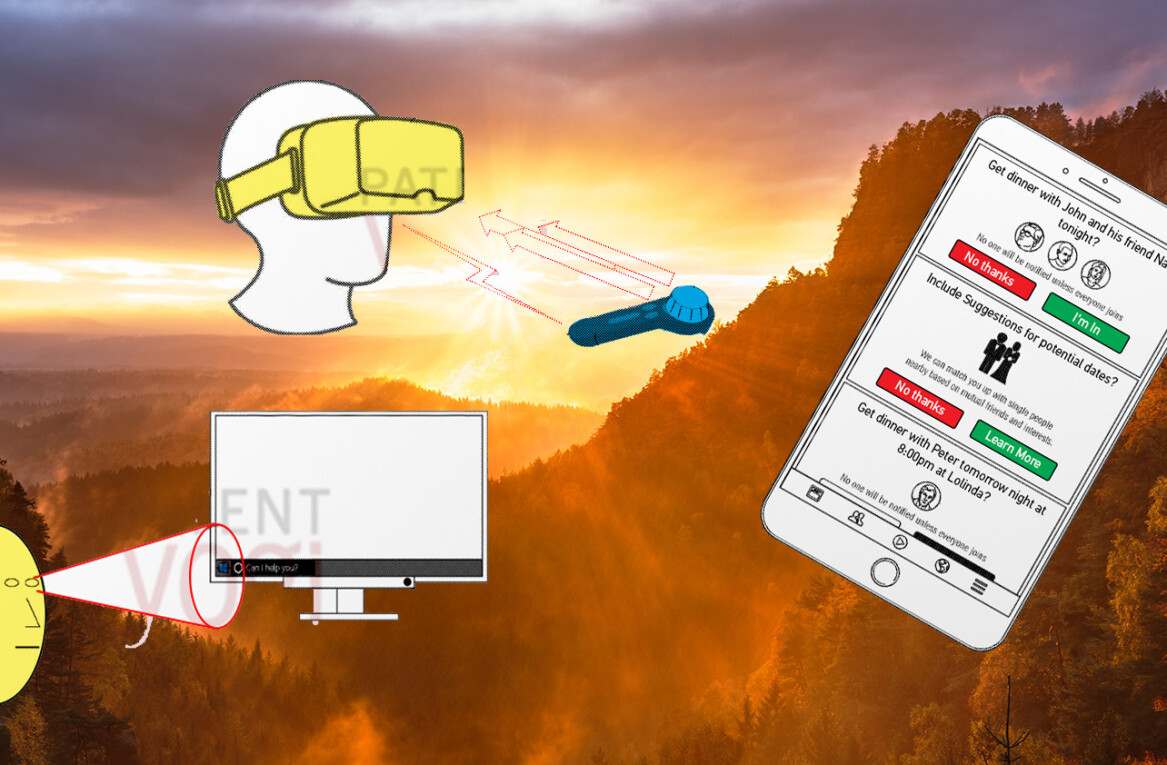

For example, input for a mobile phone means the ability to swipe, to use finger pinching, and the use of voice. These are all useful attributes in our current interaction with computers. Some of these will live on for AR wearables. For example, voice may be combined with gesture recognition.

A camera embedded on the headset can notice where your hands are in relation to the visual content and in relation to how far it is away from your head. This is fundamental for “spatial computing” which is how companies like Magic Leap and Microsoft refer to the next man/machine interface.

Voice command or AI-powered personal assistants can also help control your graphic orientation, and decision-making environment. There’s also the likely inclusion of a controller as an input. This could take the form of a little mouse in your hand, very similar to some of the virtual reality (VR) headsets that utilize hand controllers.

Eye-tracking or gazing will also be a factor. Say I’m in a meeting, now I can’t really use my hands in front of my face. And I can’t really talk. So eye-tracking or gazing could be another way to navigate content, but not be noticeable to anyone else. This input will be critical in circumstances when neither voice or using your hands is an option.

So those are the four primary inputs: Eye-tracking (gazing), controllers, voice, and gesture recognition. The question still remains, what input is going to lead to what in terms of UI and UX? We don’t have the answers yet but I believe it’s going to be an amalgamation of all of those inputs. And the combination will have to be contextual.

Here’s what I mean. Say I put my smart glasses on in the morning just ahead when I’m about to start driving. The headset will have a gyro on it and it’ll know that it’s in motion. Once I achieve a speed of more than a few miles per hour, I will no longer be able to use gesture recognition.

But I will be able to use voice recognition, and I’ll be able to use eye-tracking. So the device itself has to be smart enough to know where I am and what I’m doing, sort of what some smartphones can do now—such as disabling potentially distracting features when you are moving in a car.

How much is too much?

It is imperative to understand the importance of not overdoing the amount of digital content you throw at a user in a benign setting, and all the more so while he or she is driving. It is a dilemma that has to be solved no matter what. Many people believe a Waze-like application will be one of the first for AR eyeglasses. It makes sense and people should be excited about it. But it is going to take a very lightweight version of that kind of application.

But it is possible. You can have a very, very translucent graphic interface, like a blue line, that follows the road. And then in the corners of the glasses, it can show your speed or maybe the time until you arrive to your location. But nothing else. No little cartoon characters. No points. Very minimalistic.

But there is always a danger in overdoing it. For the average person, the cognitive load one experiences when using AR glasses may prove too heavy. And users would always prefer less and not more. They want to see their view of the real world. But they also want to have only the information that they absolutely need when they need it. It won’t work to overwhelm people by throwing too much digital content and too many distractions into one’s view.

How cool will it be when it happens?

So there are some challenges in fitting augmented reality into an eyewear device and reworking the interaction between man and machine is only part of the challenge.

First, you have to get people to pay for and wear the glasses. How do you price it? How do I give enough value that someone might wear glasses that just had laser surgery? What’s too much input, or too much content? What’s the right amount? These are the considerations industry insiders are obsessing on. But when it’s all figured out, it will be a major game changer. You are looking at a cultural shift that will even dwarf the autonomous automobile.

Get the TNW newsletter

Get the most important tech news in your inbox each week.