If Alexa understood your needs and anticipated your decisions, would you love it or would it annoy the crap out of you?

Sophia Yeres of Huge, a Brooklyn-based digital marketing agency, presented a talk at SXSW called “The Risks of the Quantified Mind.” She spoke about the future of voice-driven interfaces, and the potential of voice-first devices, such as Google Home and Amazon Alexa, to evolve into emotionally-connected, self-prioritizing personal assistants.

Since the word “Risks” is right in the title of the talk, it’s understandable it covered potentially negative outcomes of intelligent, voice-driven digital assistants that try to predict what you will need to decide from one minute to the next.

The primary downside discussed was the limitation or outright removal of choice and discovery from the equation. Yeres pointed out that variety of discovery has been linked to better memory retention and cognitive function. People who have a lot of experience have more positive memories and are less likely to remember bad experiences.

For example, suppose future-Siri knows what music you like and presents a list of music for you based on your known listening patterns, much like Spotify. Yes, you’ll have a list of music you are most likely to enjoy, but you’ll miss the opportunity to organically discover new music you like outside those spheres. Now extend that to everything in your life, from entertainment to travel patterns to food.

Yeres also pointed out that humans are inherently dynamic, while computers and data are precise. What you like and prefer one day may not be the same from day to day, and it’s difficult for a computer to anticipate and plan for that.

It wasn’t all negative.

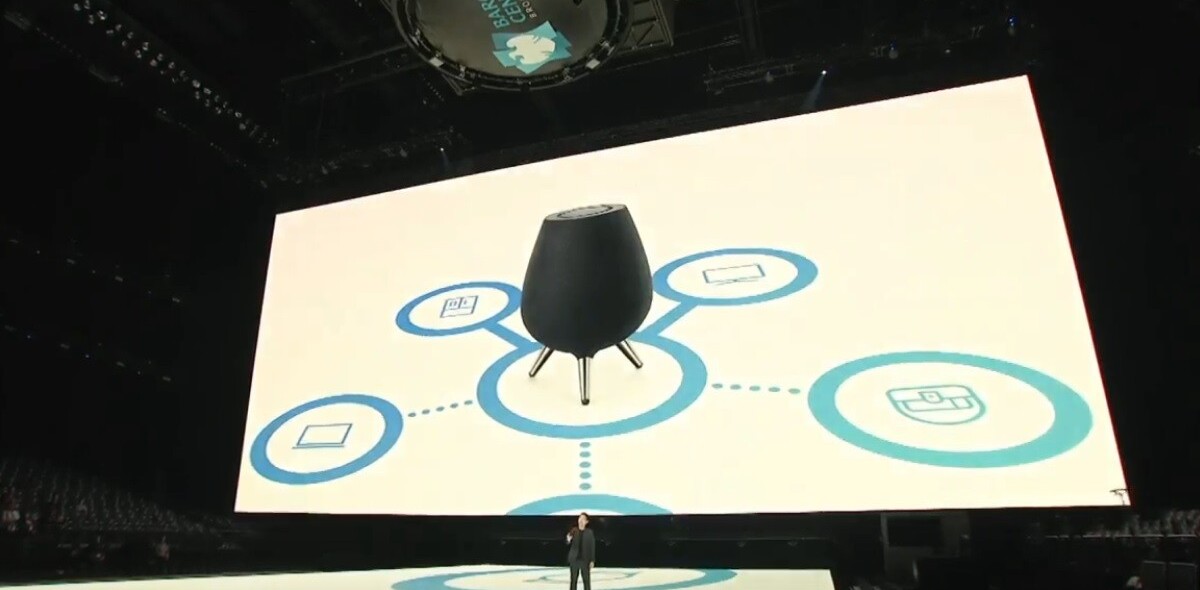

Yeres describes a future where humans can talk to their computers without needing to translate what they say into a language the computer will understand. Through better hardware and deeper data sets of human speech, voice-driven interfaces will eventually become interface- and screen-free personal assistants capable of understanding our emotional needs as easily as our speech.

Yeres hopes, in the future, that voice-driven interfaces would evolve to the point where human choice and discovery is part of the makeup of the personal assistant computer.

Get the TNW newsletter

Get the most important tech news in your inbox each week.