Artificial intelligence is as important as electricity, indoor plumbing, and the internet to modern society. In short: it would be extremely difficult to live without it now.

But it’s also, arguably, the most over hyped and misrepresented technology in history — and if you remove cryptocurrency from the argument there’s no debate.

We’ve been told that AI can (or soon will) predict crimes, drive vehicles without a human backup, and determine the best candidate for a job.

We’ve been warned that AI will replace doctors, lawyers, writers, and anyone in a field not computer-related.

Yet none of these fantasies have come to fruition. In the case of predictive policing, hiring AI, and other systems purported to use machine learning to glean insights into the human condition: they’re BS, and they’re dangerous.

AI cannot do anything a human can’t, nor can it do most things a human can.

For example, predictive policing is alleged to use historical data to determine where crime is likely to take place in the future so that police can determine where their presence is needed.

But the assumptions driving such systems are faulty at their core. Trying to predict crime density over geography by using arrest data is like trying to determine how a chef’s food might taste by looking at their headshots.

It’s the same with hiring AI. The question we ask is “who is the best candidate,” but these systems have no way of actually determining that.

It might seem difficult to digest. There are tens of thousands of legitimate businesses peddling AI software. And a significant portion of them are pushing BS.

So what makes us right and them wrong? Well, let’s take a look at some examples so we can figure out how to separate the wheat from the chaff.

Hiring AI is a good place to start. There is no formula for hiring the perfect employee. These systems either take the same data available to humans and find candidates whose files most match those of people who’ve been successful in the past (thus perpetuating any existing or historical problems in the hiring process and defeating the point of the AI) or they use non-related data such as “emotion detection” or similar pseudoscience-based quackery to do the same feckless thing.

The bottom line is that AI can’t determine more about a candidate than a human can. At best businesses using hiring AI are being swindled. At worst, they’re intentionally using systems they know to be anti-diversity mechanisms.

The simplest way to determine if AI is BS is to understand what problem it’s attempting to solve. Next, you just need to determine if that problem can be solved by moving data around.

Can AI determine recidivism rates in former felons? Yes. It can take the same data as a human and glean what percentage of inmates are likely to commit crimes again.

But it cannot determine which humans are likely to commit crimes again because that would require magical psychic powers.

Can AI predict crime? Sure, but only in a closed system where ground truth crime data is available. In other words, we’d need to know about all the crimes that happen without the cops being involved, not just the tiny percent where someone actually got caught.

But what about self-driving cars, robot surgeons, and replacing writers?

These are all strictly within the domain of future tech. Self driving cars are exactly as close today as they were in 2014 when deep learning really started to take off.

We’re in a lingering state of being “a couple of years away” from level 5 autonomy that could go on for decades.

And that’s because AI isn’t the right solution, at least not real AI that exists today. If we truly want cars to drive themselves we need a digital rail system within which to constrain the vehicle and ensure all other vehicles in proximity operate together.

In other words: people are too chaotic for a rules-based learner (AI) to adapt to using only sensors and modern machine learning techniques.

Once again, we realize that asking AI to safely drive a car, in the current typical traffic environments, is in effect giving it a task that most humans can’t complete. What is a good driver? Someone who is never at fault for an accident the entire time they drive?

This is also why lawyers and writers won’t be replaced any time soon. AI can’t explain why a crime against a defenseless child might merit harsher punishment than one against an adult. And it certainly can’t do with words what Herman Melville or Emily Dickinson did.

Where we find AI that isn’t BS, almost always, is when it’s performing a task that is so boring that, despite there being value in that task, it would be a waste of time for a human to do it.

Take Spotify or Netflix for example. Both companies could hire a human to write down what every user listens to or watches and then organize all the data into informative piles. But there are hundreds of millions of subscribers involved. It would take thousands of years for humans to sort through all the data from a single day’s sitewide usage. So they make AI systems to do it faster.

It’s the same with medical AI. AI that uses image recognition to notice anomalies or perform microsurgery is incredibly important to the medical community. But these systems are only capable of very specific, narrow tasks. The idea of a robotic general surgeon is BS. We’re nowhere close to a machine that can perform a standard vasectomy, sterilize itself, and then perform orthoscopic knee surgery.

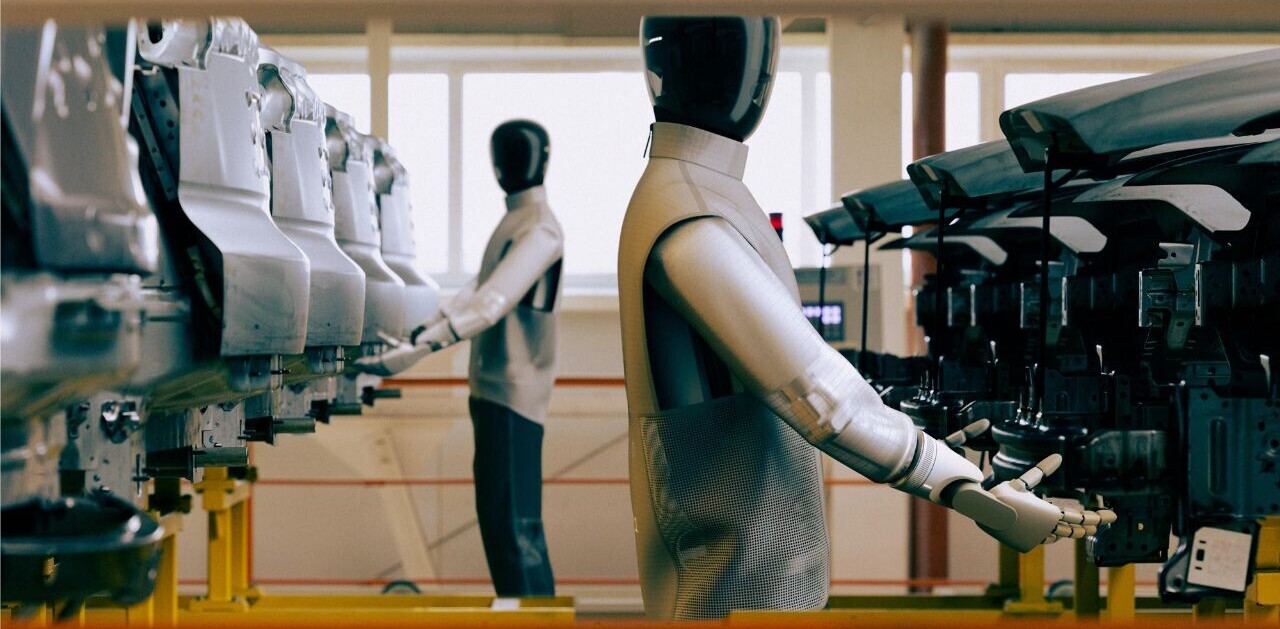

We’re also nowhere close to a machine that can walk into your house and make you a cup of coffee.

Other AI BS to be leery of:

Fake news detectors. Not only do these not work, but even if they did, what difference would it make? AI can’t determine facts in real time so these systems either search for human-curated keywords and phrases or just compare the website publishing the questionable article to a human-curated list of bad news actors. Furthermore, detecting fake news isn’t a problem. Much like pornography, most of us know it when we see it. Unlike porn however, nobody in big tech or the publishing industry seems to be interested in censoring fake news.

Gaydar: we won’t rehash this, but AI cannot determine anything about human sexuality using image recognition. In fact all facial recognition software is BS, with the sole caveat being localized systems that are trained specifically on the faces they’re meant to detect. Systems trained to detect faces in the wild against mass datasets, especially those associated with criminal activity, are inherently biased to the point of being faulty at conception.

Basically, be wary of any AI system purported to judge or rank humans against datasets. AI has no insight into the human condition, and that’s unlikely to change any time in the near future.

Get the TNW newsletter

Get the most important tech news in your inbox each week.