An advanced artificial intelligence created by OpenAI, a company founded by genius billionaire Elon Musk, recently penned an op-ed for The Guardian that was so convincingly human many readers were astounded and frightened. And, ew. Just writing that sentence made me feel like a terrible journalist.

That’s a really crappy way to start an article about artificial intelligence. The statement contains only trace amounts of truth and is intended to shock you into thinking that what follows will be filled with amazing revelations about a new era of technological wonder.

Here’s what the lede sentence of an article about the GPT-3 op-ed should look like, as Neural writer Thomas Macaulay handled it earlier this week:

The Guardian today published an article purportedly written “entirely” by GPT-3, OpenAI‘s vaunted language generator. But the small print reveals the claims aren’t all that they seem.

There appears to be a giant gap between the reality of what even the most ‘advanced’ AI systems can do and what the average, intelligent adult who doesn’t work directly in the field believes it can.

Technology journalists and AI bloggers bear our fair share of the blame, but this isn’t a new issue nor an unaddressed one.

[Read: The tech trends defined 2020 so far, according to 5 founders]

In 2018 another reporter for The Guardian, this one a human named Oscar Schwartz, published an article titled “The discourse is unhinged: how the media gets AI alarmingly wrong.” In it they discuss a slew of headlines from that same year proclaiming that Facebook AI researchers had to pull the plug on an experiment after a natural language processing system created its own negotiation language.

Articles surrounding the controversy painted the picture of an out-of-control AI with capabilities beyond its developers’ intentions. The truth is that the developers found the results interesting but by no means were they surprised or shocked.

So what gives? Why are we sitting here two years later dealing with the same thing again?

Why

Media hype plays its part, but there’s more. Genevieve Bell, a professor of engineering and computer science at the Australian National University, was quoted in the piece Schwartz wrote as saying:

We’ve told stories about inanimate things coming to life for thousands of years, and these narratives influence how we interpret what is going on now. Experts can be really quick to dismiss how their research makes people feel, but these utopian hopes and dystopian fears have to be part of the conversations. Hype is ultimately a cultural expression that has its own important place in the discourse.

Ultimately we want to believe that the catalyst for our far-future technological aspirations could manifest as an unknown side-effect of something innocuous. It might not be rational to believe that an AI system designed to string together letters in a manner consistent with language processing is going to suddenly become sentient on its own, but it sure is fun.

The movies always have a line about human hubris and how we never saw it coming. But the reality is that we’re straining our eyeballs looking for any sign of hype we can push out. But there’s more to that too.

It would appear the threat of an AI winter has passed, but VCs and startups are still making a mint on AI applications that are nothing but hype, such as systems purported to predict crime or whether a job candidate will be a good fit. And as long as there are “experts” willing to opine that these systems can do things they’re demonstrably not capable of, the general public perception of what AI can and can’t do will be muddied at best.

The myths and the realities

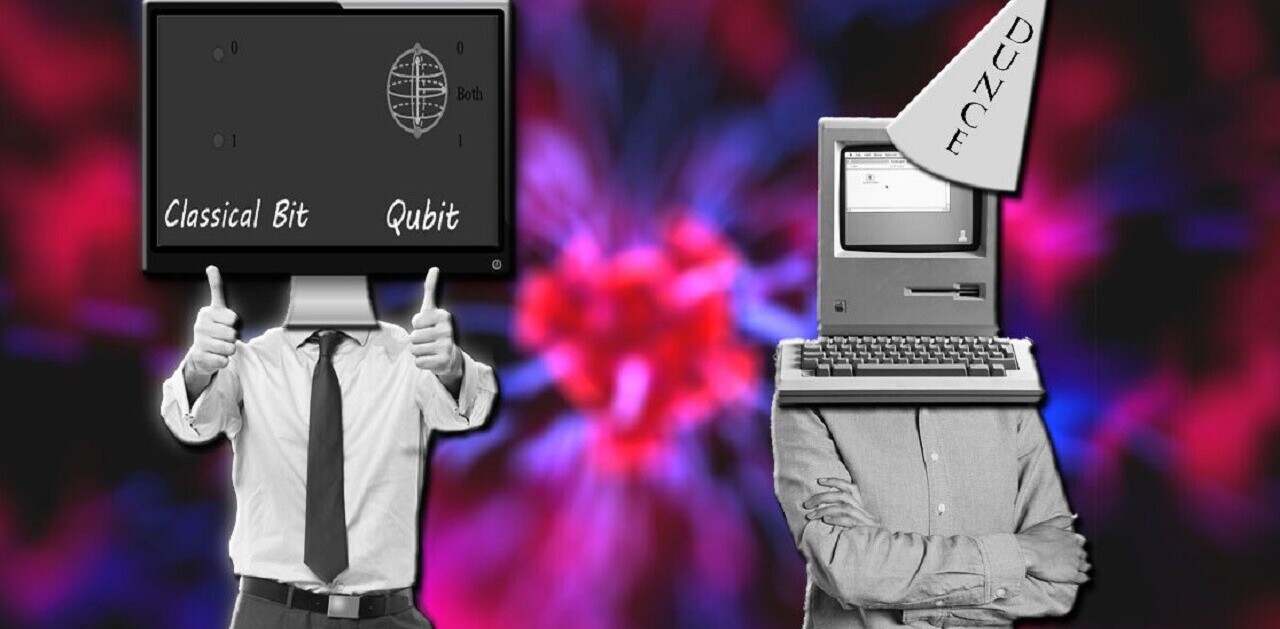

It’s arguable the general public entirely misunderstands modern AI at a fundamental level. But the most common misconceptions involve artificial general intelligence (AGI) and the AI humans interact with the most.

We’ll start with AGI. Here’s the ground truth: there exists no sentient, human-level, conscious, or self-aware AI system. Nobody is close. The myth is that systems like GPT-3 or Facebook’s language processing algorithms are able to teach themselves new capabilities from raw data. That’s incorrect and borderline nonsensical.

And we can demonstrate this by breaking down some of the most common myths people hold about the narrow AI they use everyday or see in hyperbolic headlines.

Myth: Facebook’s AI knows everything about you.

Reality: People believe this because everyone’s got an anecdote about a time where we said something out loud to another person while our phone was locked or in our pocket and the next time we checked our feed there was an advertisement for exactly what we were talking about. The truth is that these are coincidences.

Bust it: Research a product category you’d never actually purchase across several of your devices. As a cis-male, for example, I can research nursing bras and pregnancy tests on the internet with my laptop and smart phone and I know I’ll get advertisements across Chrome, Facebook, and numerous other products related to being “with child” for a couple of weeks.

What this tells you is that the algorithm isn’t “predicting” or “thinking,” it’s just following your footsteps. In this case, the value is that AI can assess your individual habits through the use of a simple keyword-matching algorithm.

Humans could do it better, but it would be a stupid business model to hire a person to spend their entire day watching what you do online so they can decide what ads to show you. Facebook CEO Mark Zuckerberg would need to employ half the planet as ad-servers so that the other half could get served. AI is infinitely more efficient at such a simple, mindless task.

Myth: GPT-3 understands language and can write an article as well as a human.

Reality: It absolutely does not and cannot. Everything is parameters and data to the system. It doesn’t understand the difference between a dog and a cat or a person and an AI. What it does is imitate human language. You can teach a parrot to say “I love Mozart,” but that doesn’t mean it understands what it’s saying. AI is the same.

Bust it: GPT-3’s most amazing results are cherry-picked, meaning the people showing them off make multiple attempts and go with the ones that look the best. That’s not to say GPT-3’s trickery isn’t impressive, but when you realize that it’s already going through billions of parameters, the novelty wears off pretty quickly.

no. GPT-3 fundamentally does not understand the world that it talks about. Increasing corpus further will allow it to generate a more credible pastiche but not fix its fundamental lack of comprehension of the world. Demos of GPT-4 will still require human cherry picking. https://t.co/6vl3ettSZk

— Gary Marcus (@GaryMarcus) August 2, 2020

Think about it this way: If you’re thinking of a number and I try to guess that number the more guesses I get – the more parameters telling me whether my output is right or wrong – the better the odds I’ll eventually guess correctly. That doesn’t make me a psychic, it’s called brute-force intelligence and it’s what GPT-3 does. That’s why it takes a supercomputer to train the model: we’re just throwing muscle at it.

Most AI works the same way. There are future-facing schools of thought, such as the field of symbolic AI, that believe we will be able to produce more intuitive AI one day. But the reality of AI in 2020 is that it’s no closer to general intelligence than a calculator is. When that changes, it’s almost certain to be an intentional endeavor, not the result of an experiment gone unexpectedly right or wrong.

The singularity won’t be an accident

When companies like OpenAI and DeepMind say they’re working towards AGI that isn’t to say that GPT-3 or DeepMind’s chess-playing system are examples of that endeavor. We’re not talking about drawing a straight line from one tech to the future, as we can with the Wright brothers famous manned aircraft and today’s modern jets – more like inventing the wheel on the way to eventually building a space ship.

At the end of the day, modern AI is amazing in its ability to replace mindless human labor with automation. AI performs tasks in seconds that would take humans thousands of years – like sorting through 50 million images. But it’s not very good at these tasks when compared to a human with enough time to accomplish the same goal. It’s just efficient enough to be valuable.

Where the rubber hits the road – developing Level 5 autonomous vehicles, for example – AI simply isn’t capable enough to replace humans when efficiency isn’t the ultimate goal.

Nobody can say how long it’ll be until the myths about “super intelligent” AI are more closely aligned with the reality. Perhaps there’ll be a “eureka” moment to prove the experts wrong – and maybe aliens will land on Earth and give us the technology in exchange for your Aunt’s peach cobbler recipe.

More likely, however, we’ll achieve AGI in the exact same way we achieved the atomic bomb and the internet: through concentrated research and endeavor towards a realistic goal. And, based on where we are right now, that means we’re likely several decades away from an AI that can not only write an op-ed, but also understand it.

So you’re interested in AI? Then join our online event, TNW2020, where you’ll hear how artificial intelligence is transforming industries and businesses.

Get the TNW newsletter

Get the most important tech news in your inbox each week.