Over the past year, I wrote about a bunch of companies working on voice synthesis technology. They were very much in the early stages of development, and only had some pre-made samples to show off. Now, researchers hailing from the Montreal Institute for Learning Algorithms at the Universite de Montreal have a tool you can try out for yourself.

It’s called Lyrebird, and the public beta requires just a minute’s worth of audio to generate a digital voice that sounds a lot like yours. The company say its tech can come in handy when you want to create a personalized voice assistant, a digital avatar for games, spoken-word content like audiobooks in your voice, for when you want to preserve the aural likeness of actors, or for when you just love the sound of your own voice and want to hear it all the time.

I decided to give it a go, and I have to admit that the results were spooky.

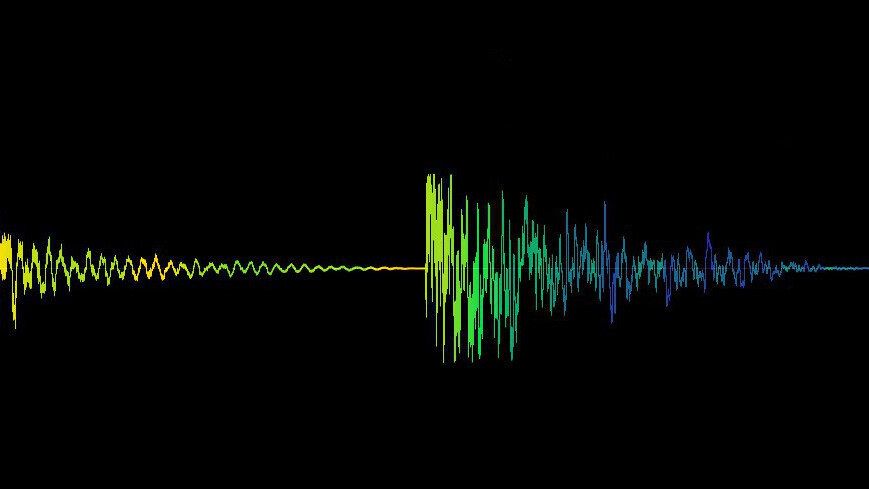

Here’s a clip I recorded to train the system:

And here’s a clip of my digital voice, reading out text that I typed into Lyrebird – using words I didn’t record while training the system:

Right, it’s time to unplug everything, chuck my phone, don a tinfoil hat, and move to the woods.

It’s pretty eerie that a publicly available tool can mimic audio this well with such a small sample to learn from.

Granted, you can’t yet spoof just about anyone’s voice with Lyrebird’s front-facing app: you have to train the system by recording audio of sentences that appear on screen, so you can’t just upload a minute of Kim Jong -Un’s speech from a video clip and expect to generate a digital voice.

Plus, the generated audio may not hold up to close scrutiny, and you could certainly have audio forensics experts analyze and point out glitches and signs indicating that it was synthesized. But it could still be enough to mislead people for a while. For example, India has a major problem dealing with fake news and hoaxes spread via WhatsApp; it could be the perfect vehicle for spreading misinformation among the service’s 280 million users across the country.

It’s also worth noting that this is just the beginning for voice synthesis technology. Lyrebird says that the more audio samples it has, the better its digital voices will sound. Adobe is also working on Project VoCo, which could open the open up the possibility of editing recorded audio just as easily as you would copy and paste text in a document.

Lyrebird says that it only has society’s best interests at heart:

…we are making the technology available to anyone and we are introducing it incrementally so that society can adapt to it, leverage its positive aspects for good, while preventing potentially negative applications.

It also offers to analyze any audio you send its way to check if it’s authentic or if it’s been spoofed.

At the same time, the company also says it can generate high-quality digital voices of any person, provided you get their permission. It’s unclear as to how Lyrebird plans to validate that sort of authorization, and whether you’ll need to train the system as I did, or if you can simply record your target and send the company an audio file to work with.

Should you be scared? Maybe not just yet – but given how quickly technology is advancing, particularly in the field of machine learning, we might have a wholly different story for you tomorrow.

The other problem is that we don’t have a culture, habit, or easily available tools for analyzing spoofed audio. Without those, we’re susceptible to falling prey to scammers and those who seek to spread false information (see Russian government agencies meddling with the US presidential elections).

It’s hard to be sure about whether this means the web will soon be flooded with fake voice recordings. But the truth is that synthesized audio could easily be turned into another attack vector for malicious actors. That’s one more thing to worry about online that we’re not entirely prepared for.

Get the TNW newsletter

Get the most important tech news in your inbox each week.