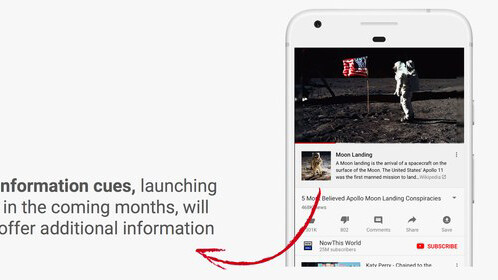

For years, YouTube has been plagued with videos that spread hoaxes, hateful speech, and all manner of misinformation. Its latest move to combat that is to display links to Wikipedia articles when you watch conspiracy theory videos, said YouTube CEO Susan Wojcicki at SXSW this week.

Wired noted that the new Information Cues feature would surface links to Wikipedia pages on videos about things like chemtrails and whether humans have ever landed on the moon. YouTube will first supplement videos that focus on common conspiracy theories floating around the web this way.

Every single video on the YouTube trending page is fake. The videos are made by 'verified' YouTube creators, they garner millions of views and the sad reality is that Google is indirectly encouraging the promotion of hoax content with AdSense dollars. ? pic.twitter.com/EepDCylP6y

— Amit Agarwal (@labnol) March 1, 2018

That raises the question, is this the best that YouTube can do? While Wikipedia is an incredible resource for information on the web, its content is contributed by the general populace, and can be manipulated to spread misinformation. Meanwhile, YouTube still isn’t fully taking on the responsibility of censoring problematic videos on its platform, and its efforts to do continue to be inconsistent at best.

Technologist Tom Coates points out that YouTube’s new feature might also strain Wikipedia’s team of volunteers by sending hordes of potential conspiracy theorists their way, who may either contest the encyclopedia’s content or attempt to edit it:

The bad bit is that there’s a good chance that YouTube is sending millions of abusive conspiracy minded crazies in Wikipedia’s direction and just *hoping* that it’s resilient enough to withstand it. This is not a given!

— Tom Coates (@tomcoates) March 14, 2018

It’s also worth noting that Google already makes use of Wikipedia to supplement its search results, without having to pay for the privilege of doing so. YouTube’s Information Cues works the same way, and it remains to see if the Wikipedia community will suffer as a result. Here’s Katherine Maher, executive director of the Wikimedia Foundation that runs Wikipedia:

While we are thrilled to see people recognize the value of @Wikipedia’s non-commercial, volunteer model, we know the community’s work is already monetized without the commensurate or in-kind support that is critical to our sustainability. https://t.co/d8TdTTPdgp

— Katherine Maher (@krmaher) March 14, 2018

Ultimately, the Information Cues feature may turn out to be helpful – but it seems like a tiny part of a solution to a huge problem, and hardly sounds like YouTube stepping up to the challenge of fighting misinformation. The problem affects users and creators across the globe, and the company needs to do more to fix things.

The Next Web’s 2018 conference is just a few months away, and it’ll be ??. Find out all about our tracks here.

Get the TNW newsletter

Get the most important tech news in your inbox each week.