YouTube is in hot water again after it failed this week to catch a conspiracy theory video before it topped the Trending section — proving the company still doesn’t have a handle on its own algorithms, despite being raked over the coals for this multiple times in the past.

The video in question — which, in the interest of good taste, I’m not going to embed or link to — is about David Hogg, one of the survivors of the Parkland high school shooting rampage which happened last week. The video claims he is an actor because he was … somewhere other than Florida at some point in his life. It makes as much sense as it sounds.

It topped YouTube’s Trending section for several hours, over videos from official news sites. It was eventually removed by site moderators.

The last time YouTube faced scrutiny over its Trending section was last month, when shocked viewers saw that Logan Paul’s “Suicide Forest” video was posted prominently there. It was eventually removed by Paul himself, but the question as to how it got there remained.

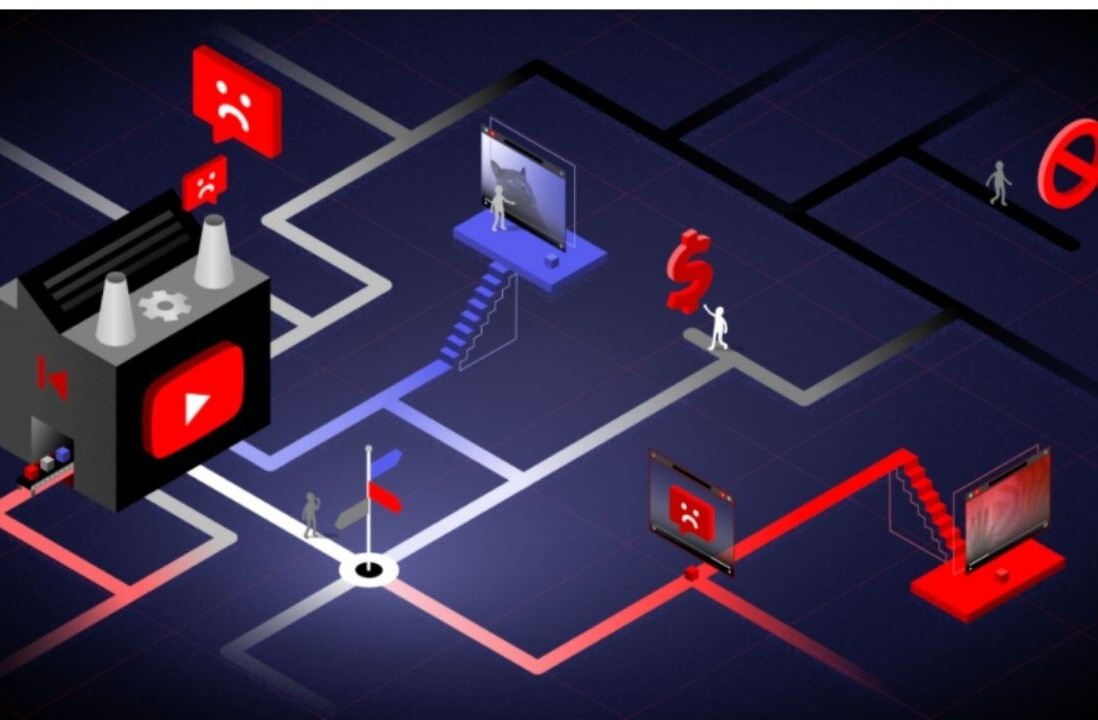

The Trending section is predominantly controlled by an algorithm, so it’d be understandable if it picked up any video that happened to be getting a ton of views at any given moment, but the outline of the Trending section clearly says it avoids videos that are not “misleading, clickbaity or sensational.” I should think the video about David Hogg would certainly fall into at least one of those three categories.

YouTube’s apology, as reported by Motherboard, sheds some light on why the video was present, and why the algorithm may not be as selective as it sounds:

This video should never have appeared in Trending. Because the video contained footage from an authoritative news source, our system misclassified it. As soon as we became aware of the video, we removed it from Trending and from YouTube for violating our policies. We are working to improve our systems moving forward.

So neither the title, subject matter, nor tags were enough to trip YouTube’s alarms — all the video maker had to do was include footage from a news source.

Susan Wojcicki, YouTube’s CEO, promised last December to bring in more human moderators to help make sure nothing slipped by the algorithm, but either the company hasn’t hired them yet or that’s clearly not working.

It’s forgivable for YouTube to miss bad videos among the rank and file — it’d be impossible to go through that many minutes of uploaded footage with a fine-tooth comb. But if any page needs to be more closely monitored by human eyeballs, it’s the page of videos YouTube is most actively promoting to its users.

Get the TNW newsletter

Get the most important tech news in your inbox each week.