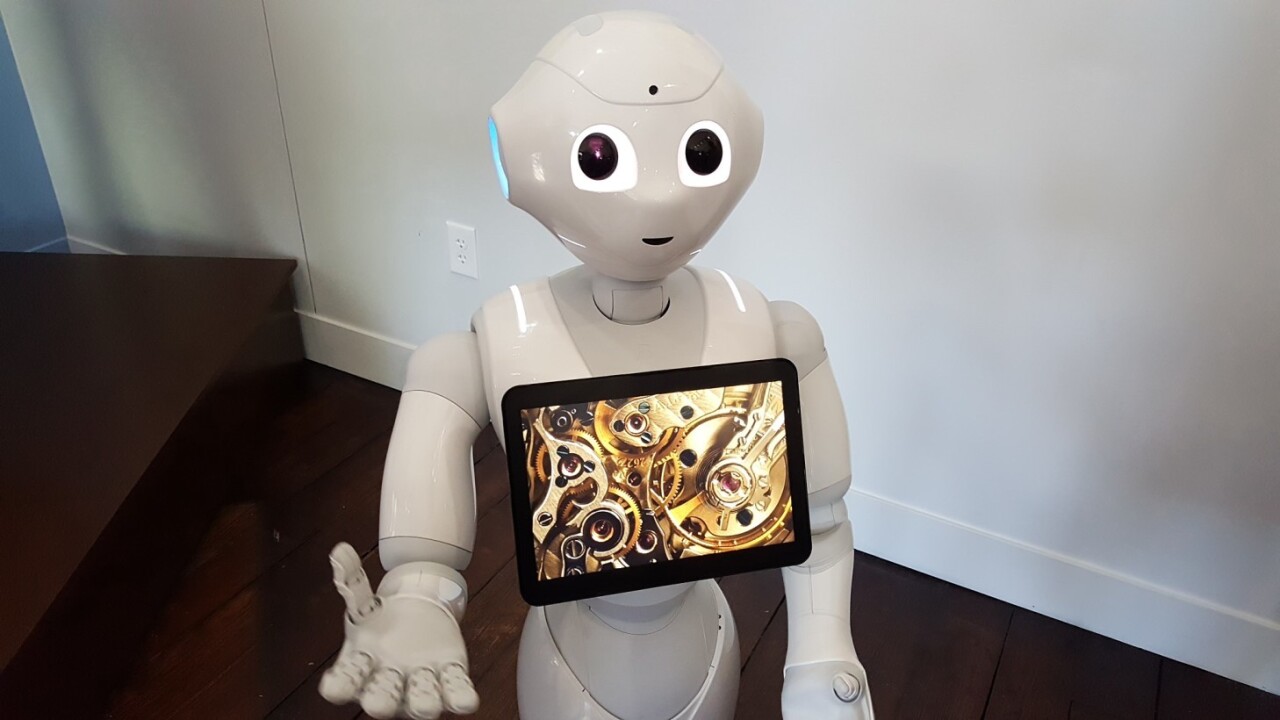

At the IBM Cognitive Studio at SXSW, the company demoed the Watson-powered emotion-reading robot, Pepper.

More than 7,000 robots have been deployed at Japanese stores, cruise ships and hotels, with goals of providing guest services, entertainment, and advertising promotional events. This year, IBM and SoftBank want to start putting Pepper in retailers in the US and Europe, and are currently looking for software partners to build applications that work best for those retail needs.

We met up with Pepper for a brief demo, and put its emotion-reading skills to the test.

The demo version of Pepper at the core is still quite basic – in an application, Pepper uses its camera and attempts to figure out how you’re feeling based solely off facial recognition technology. A blank, neutral face is where most people start, but as you’re interacting with Pepper, it will figure out if you’re smiling or pouting.

In my test, exaggerated emotions such as big smiles and Grumpy Cat-level frowns are easy for Pepper to detect. However, I was surprised to see Pepper also recognized small facial changes, such as a pursed lips when I was confused with what to do next or a quick laugh. Pepper displays what it sees with a tablet attached to its chest, and you can see the changes through a basic line graph and an accompanying emoticon.

Since the IBM Cognitive Studio was quite loud, Pepper wasn’t able to react to my ever-changing moods, but instead mimics what it thinks it sees. When it detected I was ‘sad,’ Pepper slumped over and sinked its head to the side, but a smile made Pepper cheery and waved its arms around. It was oddly comforting to see a robot try to empathize with your feelings.

It takes tone of voice into consideration as well, such as a sharp shouts as a negative emotion, and vice versa for laughs. I wasn’t able to test sarcasm, but that would be the ultimate test of whether it can truly differentiate intent in each person’s voice.

IBM also anticipates people will try to touch Pepper as part of their interaction, and worked with Aldebaran to help programme it to recognize kicks as anger, and gentle, head-petting motions as happy. So far, Pepper’s only been involved in one publicized abuse incident, in which a drunk man kicked Pepper over and was subsequently arrested.

Is it smarter than your ex-significant other at recognizing when you’re upset? Maybe not, but it certainly doesn’t seem to be worse at it if we’re talking from a purely physical standpoint. Emotions are complicated, and a robot that can recognize moods beyond the obvious smile or frown could be more than just gimmicks for retailers to invest in. It just needs the right applications to make it truly valuable.

Get the TNW newsletter

Get the most important tech news in your inbox each week.