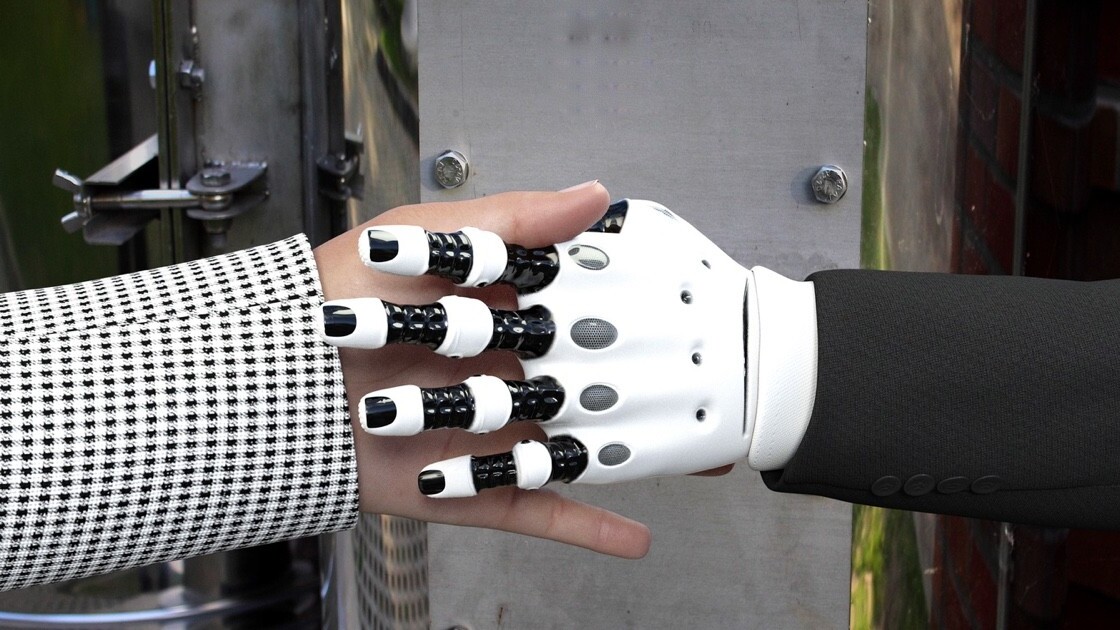

Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have developed a brand new AI that can feel objects just by seeing them – and vice versa.

The new AI can predict how it would feel to touch an object, just by looking at it. It can also create a visual representation of an object, just from the tactile data it generates by touching it.

Yunzhu Li, CSAIL PhD student and lead author on the paper about the system, said the model can help robots handle real-world objects better:

By looking at the scene, our model can imagine the feeling of touching a flat surface or a sharp edge. By blindly touching around, our model can predict the interaction with the environment purely from tactile feelings. Bringing these two senses together could empower the robot and reduce the data we might need for tasks involving manipulating and grasping objects.

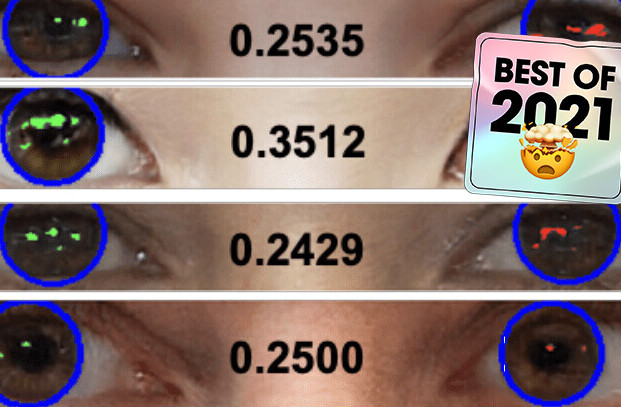

The research team used a KUKA robot arm with a special tactile sensor called GelSight to train the model. Then it made the arm touch 200 household objects 12,000 times, and recorded the visual and tactile data. Based on that, it created a data set of 3 million visual-tactile images called VisGel.

Andrew Owens, a postdoctoral researcher at the University of California at Berkeley, opined this research can aid robots in knowing how firmly it should grip an object:

This is the first method that can convincingly translate between visual and tactile signals. Methods like this have the potential to be very useful for robotics, where you need to answer questions like ‘is this object hard or soft?’, or ‘if I lift this mug by its handle, how good will my grip be?’ This is a very challenging problem, since the signals are so different, and this model has demonstrated great capability.

The researchers are presenting this paper at The Conference on Computer Vision and Pattern Recognition in the US this week.

Get the TNW newsletter

Get the most important tech news in your inbox each week.