Samsung’s research center at Moscow has developed a new AI that can create talking avatars of photos and paintings without using any 3D modeling.

A paper posted by the research team suggests that while traditionally researchers have used a large number of images to create a talking head model, a new technique can achieve it by few, or potentially even one image.

In a video, engineer Egor Zakharov, explains that although it’s possible to create a model through a single image, training it through multiple images “leads to higher realism and better identity preservation.”

Samsung said that the model creates three neural networks during the learning process. First, it creates an embedded network that links frames related to face landmarks with vectors. Then using that data, the system creates a generator network which maps landmarks into the synthesized videos. Finally, the discriminator network assesses the realism and pose of generated frames.

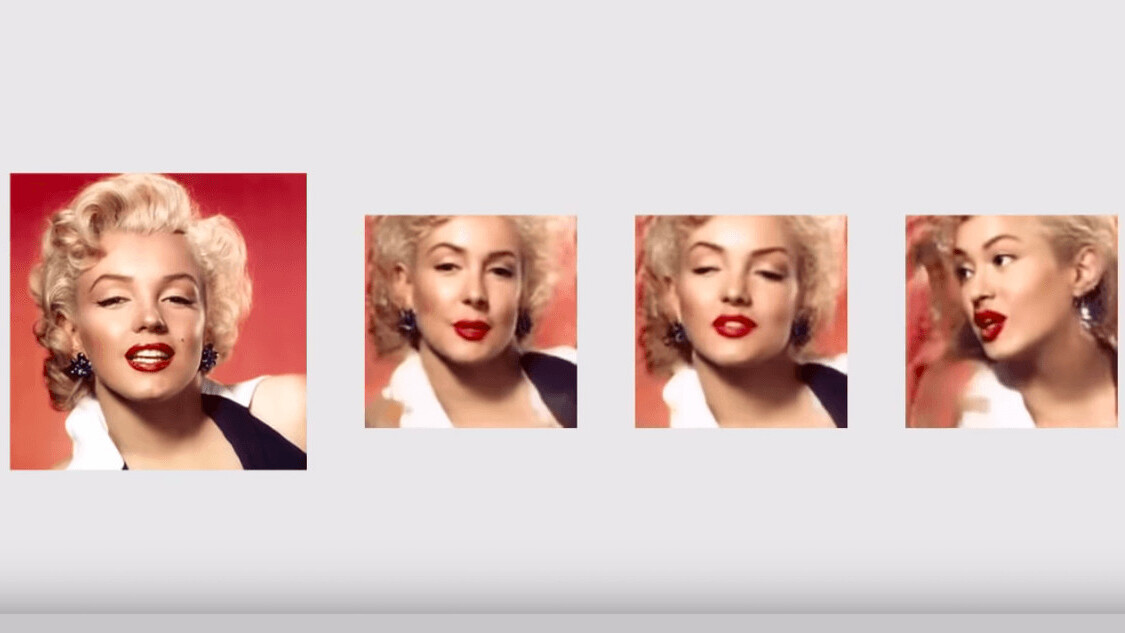

It even shows off animated headshots of well-known figures like Leonardo Da Vinci’s Mona Lisa, Albert Einstien, and Marilyn Monroe. To achieve that, the model referred to thousands of YouTube videos of celebrities talking (from VoxCeleb2 dataset) at the meta-learning stage. That helped it understand face landmarks and movements.

The paper says such ability has practical applications for telepresence, including video conferencing and multi-player games, as well as the special effects industry.

However, there’s a huge possibility that the model can be used to create deep fakes. We’ve seen in the past how deep fakes have been used to create impersonations of celebrities including fake porn videos.

Get the TNW newsletter

Get the most important tech news in your inbox each week.