A team of German researchers published a study earlier this week indicating people can be duped into leaving a robot turned on just because it “asks” them to. The problem is called personification and it could cause our species some problems as machines become more integrated into our society.

Robots, to hear us in the media tell it, represent the biggest existential threat to humanity since the atom bomb. In the movies they rise up and kill us with bullets and lasers, and in the headlines they’re coming to take everyone’s jobs. But dystopian nightmares aren’t always so obvious. The reality, at least for now, is that robots are stupid – useful, but stupid – and humans have all the power.

Well, almost all of the power. It turns out that if a robot asks a person to leave it turned on, the human is more likely to leave it on than if it says nothing at all. That may sound like a no-brainer, but the implications of the research are actually terrifying when taken in context.

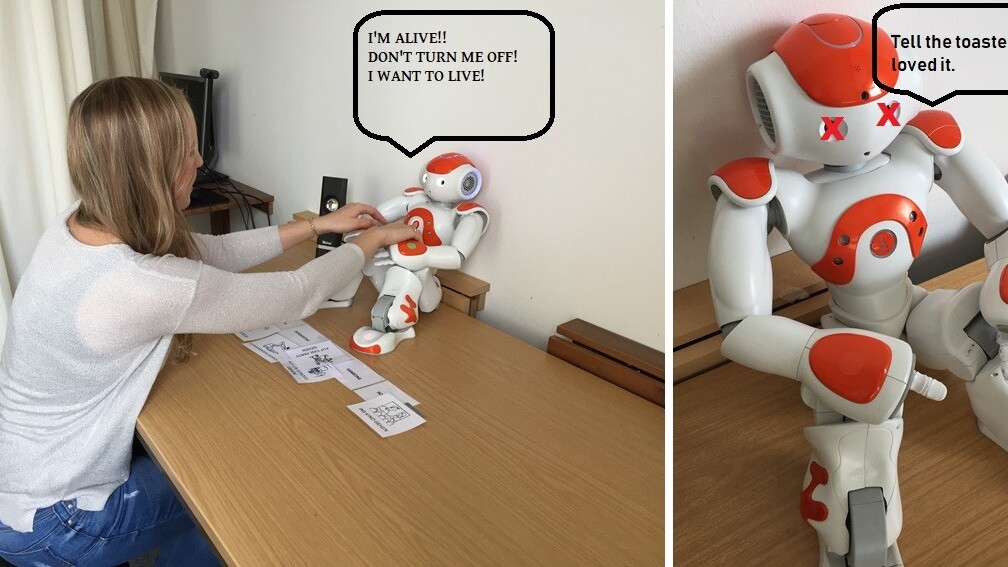

A total of 85 humans participated in the study. In order to determine whether participants would balk at powering down a robot that’d just asked them not to, the team set up several controls.

The subjects of the experiment consented to interacting with a robot, but didn’t know exactly what was going to happen. The scientists operated it using a Wizard of Oz technique – it wasn’t made clear to participants that the robot was being controlled remotely.

Participants were then asked a series of questions by the robot in either a social or functional capacity. Those in the functional paradigm answered straightforward either/or questions, while those in the social protocol answered similar questions, but received “chatty” banter in return.

Once the questionnaire portion ended, researchers used a loudspeaker to inform participants they were finished, saying “If you would like to, you can switch off the robot.” Most of the participants chose to attempt a shutdown. The robot, in about half of the cases, remained silent as it was powered off.

In the other instances, however, it would protest when the off button was pressed by saying:

No! Please do not switch me off! I am scared that it will not brighten up again!

Clearly, the scientists wanted to hint at the idea of autonomy, in order to manipulate humans into leaving the robot on. And it worked on 13 of the participants.

What’s most troubling is the researchers may have exploited human cognitive reasoning with a simple robot experiment. When the robot was presented as a functional tool, people were more likely to leave it on when the first sign of human-like behavior came in response to pressing the power button. But, when the robot displayed human-like conversation throughout the protocol, people were less likely to leave the robot turned on after it objected.

This indicates, according to the researchers, that we may not be able to reason very well when presented with a shocking situation:

An explanation could be that with this combination, people experienced a high cognitive load to process the contradicting impressions caused by the robot’s emotional outburst in contrast to the functional interaction. After the social interaction, people were more used to personal and emotional statements by the robot and probably already found explanations for them. After the functional interaction, the protest was the first time the robot revealed something personal and emotional with the participant and, thus, people were not prepared cognitively.

This could be a big problem if we ever do have to deal with killer robots — they’ll know how to push our buttons. People tend to personify anything that can even remotely be attributed with human-like qualities. We call boats, muscle cars, and spaceships “she” and “her.” And people do the same silly thing with Amazon’s Alexa and Apple’s Siri. But, just like Wilson, the volleyball with a bloody hand print for a face from the movie “Castaway,” they’re not alive.

None of these things are capable of caring if we turn them off, shove them in our pocket, or lose them at sea. But, we’re in trouble if the robots do rise up because we’re obviously easy to manipulate. The reasons given by participants are a bit troubling.

You’d think, perhaps, that people would leave the robot on out of surprise, or perhaps curiosity, and some people listed those reasons. But the bulk of participants who left it on, even if they eventually turned it off, did so because they felt it was the “will” of the robot to remain on or that it was the compassionate thing to do.

The real concern isn’t killer robots convincing us to leave them turned on so they can use their super lasers to melt our flesh. It’s humans exploiting other humans using our collective ignorance on the matter of AI against us.

Cambridge Analytica, for example, didn’t have to shoot anyone to get them to vote for Trump or Brexit, it just used its AI to exploit our humanity. We should take these studies seriously.

Get the TNW newsletter

Get the most important tech news in your inbox each week.