Google’s DeepMind team last week revealed a speedy new approach to training deep learning networks that combines advanced algorithms and old school video games.

DeepMind, the team responsible for AlphaGo, appears to believe machines can learn like humans do. Using its own DMLab-30 training set, which is built on ID Software’s Quake III game and an arcade learning environment running 57 Atari games, the team developed a novel training system called Importance Weighted Actor-Learner Architectures (IMPALA).

With IMPALA, an AI system plays a whole bunch of video games really fast and sends the training information from a series of “actors” to a series of “learners.”

Normally, deep learning networks figure things out like a single gamer traversing a gaming engine. Developers tell the computer what the controller inputs are and it plays the game just like a person with an actual gamepad would.

With IMPALA, however, not only does the system play the game 10 times more efficiently than other methods, but it plays a whole bunch of games at once. It’s like having 30 or more gamers learning how to play Quake with one “borg” brain gaining all the experience.

Here’s a human testing the DMLab-30 environment:

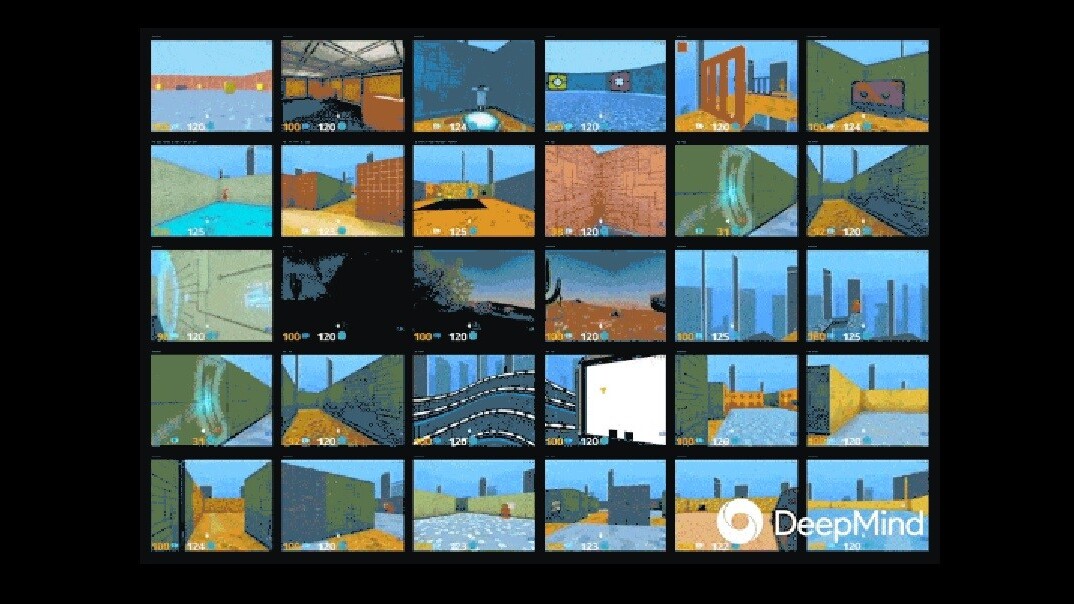

Now let’s check out a machine using IMPALA:

One of the biggest challenges faced by AI developers is the amount time and processing power it takes to train a neural network. Unlike traditional programming — where a smart person bangs out a bunch of code that eventually turns into a program — autonomous machines require rules they can experiment with in order to try and discover a way to deal with real world problems.

Since we can’t just build robots and let them loose to figure things out, simulations are used for the brunt of development. For this reason, deep reinforcement learning is crucial for tasks requiring contextual autonomy.

An autonomous car, for example, should be capable of determining on its own if it should speed up or slow down. But it should not be given a choice of whether to drive through the front of a convenience store. It learns what kind of decisions it should be making and how to make them in a simulation environment.

Another problem IMPALA solves is scalability. It’s one thing to tweak algorithms and tune things to shave a few minutes off of training time, but at the end of the day the requirements for successfully training an AI aren’t based on hours logged.

In order for current neural networks to achieve success rates high enough to justify their implementation in any autonomous machinery that could potential harm humans or damage inventories, they have to proccess billions of ‘frames’ (images) from the training environment.

According to the researchers “given enough CPU-based actors — the IMPALA agent achieves a throughput rate of 250,000 frames/sec or 21 billion frames/day.” This makes DeepMind’s AI the absolute fastest we’re aware of when it comes to these types of tasks.

And, perhaps more stunning, according to the IMPALA white paper, the AI performs better than both previous AI systems and humans.

We already knew DeepMind’s AI was better than us at games, but now it’s just showing off.

Get the TNW newsletter

Get the most important tech news in your inbox each week.