Tesla and SpaceX CEO Elon Musk, along with 115 other artificial intelligence and robotics specialists, has signed an open letter to urge the United Nations to recognize the dangers of lethal autonomous weapons and to ban their use internationally.

The letter was released at the International Joint Conference on Artificial Intelligence (IJCAI 2017) in Melbourne over the weekend. And although it sounds like a problem in the distant future, Musk – along with the likes of Google’s DeepMind founder Mustafa Suleyman and Jerome Monceaux of Aldebaran Robotics (which designed the Pepper humanoid robot) – is right to be worried about these weapons right now, and so should we.

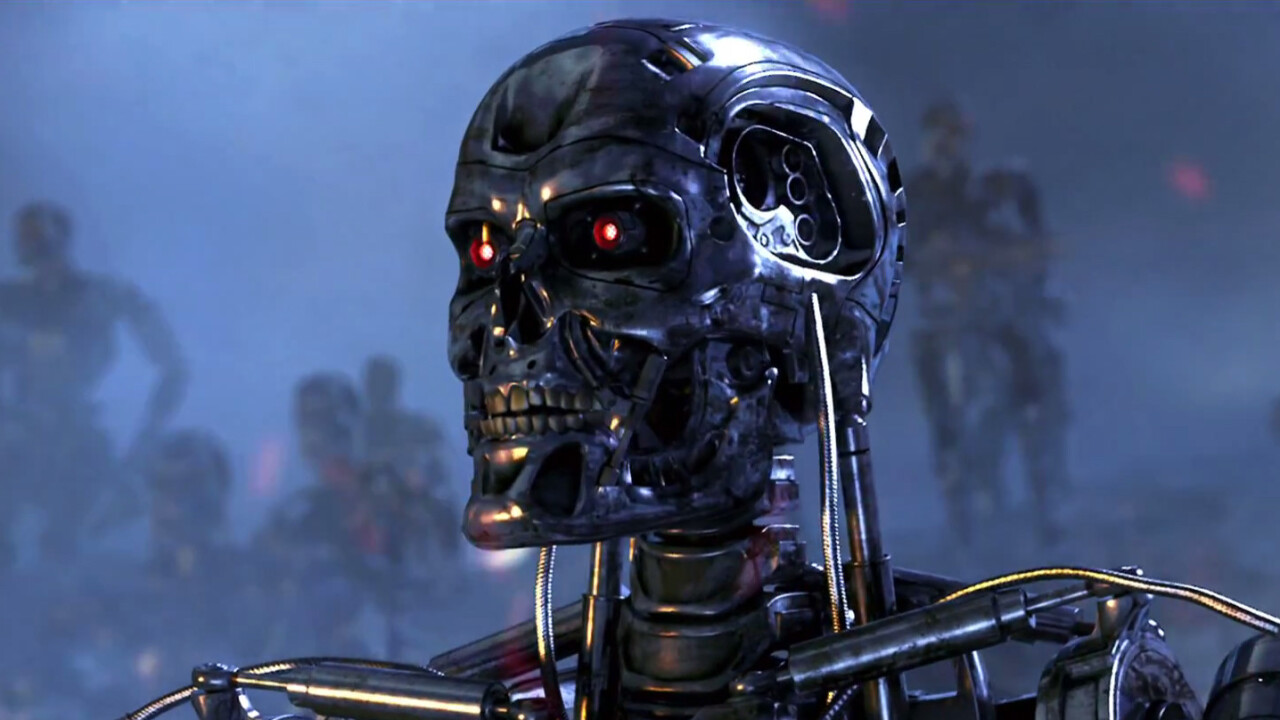

The Guardian noted that according to some experts, the fields of AI and robotics are both advancing so quickly that the reality of a war fought with autonomous weapons and vehicles could be upon us in a matter of years, rather than decades. As such, it’s crucial that we understand just how drastically these technologies could affect how governments think about going to war.

Add to that the dangers of autocrats and terrorists using these weapons against civilians, and you have a recipe for disaster. What’s more, if these devices ever come into existence, there’s a chance they could be hacked or fall into the wrong hands and be misused.

We aren’t too far from this nightmare as it is. There are already numerous weapons, like automatic anti-aircraft guns and drones, that can operate with minimal human oversight; advanced tech will eventually help them to carry out military functions entirely autonomously.

To illustrate why this is a problem, consider the UK government’s argument in which it opposed a ban on lethal autonomous weapons in 2015: it said that “international humanitarian law already provides sufficient regulation for this area,” and that all weapons employed by UK armed forces would be “under human oversight and control.”

Enabling a government to deploy unmanned and autonomous forces of destruction could encourage them to send out larger troops than before, as they wouldn’t have to worry about risking more of their countrymen’s lives.

Explaining his stance, signatory Yoshua Bengio, founder of Element AI said:

I signed the open letter because the use of AI in autonomous weapons hurts my sense of ethics, would be likely to lead to a very dangerous escalation, because it would hurt the further development of AI’s good applications, and because it is a matter that needs to be handled by the international community, similarly to what has been done in the past for some other morally wrong weapons (biological, chemical, nuclear).

He makes a great point. If governments are allowed to build and stockpile autonomous weapons unchecked, it could lead to the best minds in AI and robotics being drawn into developing killer robots, and away from developing the kinds of solutions that our societies need the most.

With the current threat of nuclear warfare, Musk & Co.’s message couldn’t be more timely. The sooner international leaders begin discussing the future our rapidly developing technologies could drive us into, the better for all of humanity.

Get the TNW newsletter

Get the most important tech news in your inbox each week.