Today at WWDC, Apple brought machine learning to Photos to help you find, discover and share your images in a more intuitive way than ever before. The features borrow some of the best features from Google Photos, like re-surfacing memorable events, creating albums based on events, people and places, and using deep learning to help find images in a more intuitive way.

The new algorithm uses advanced computer vision, a group of deep learning techniques that brings facial recognition to the iPhone. Now, you can find all of the most important people, places and things in your life in with automatically sorted albums. It’s essentially facial recognition that works on places and objects as well.

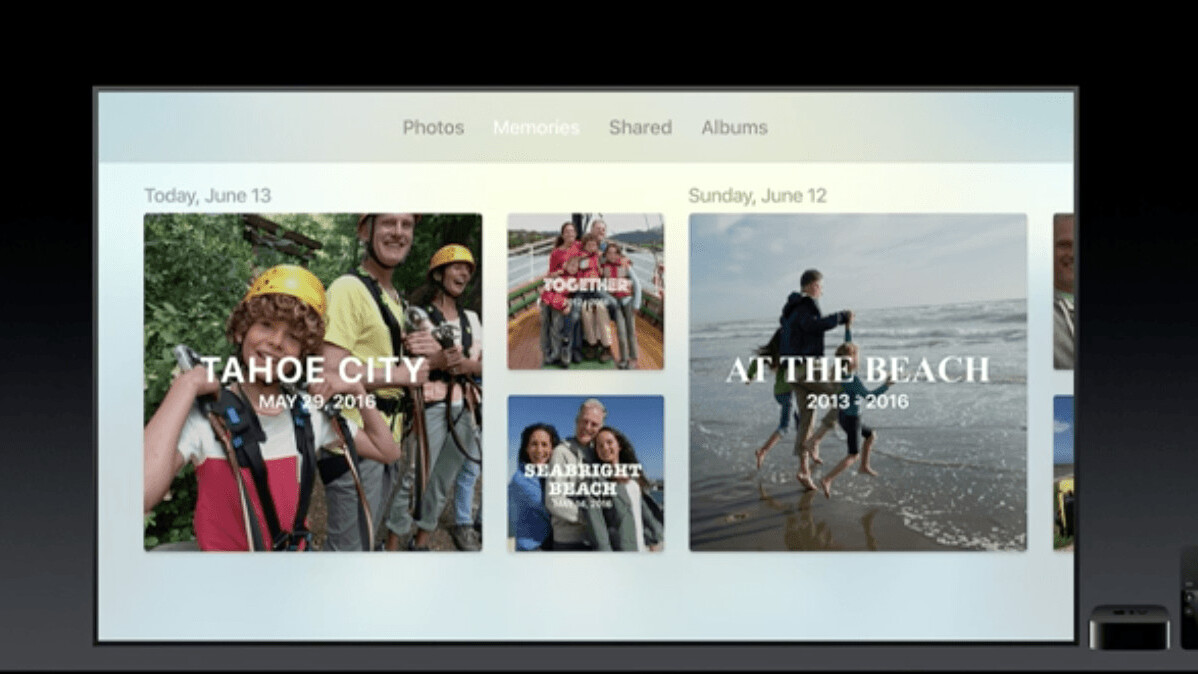

Photos now offers up new ways to re-surface forgotten photos too — much like Google Photos. Now, instead of periodically sorting through old photos, Apple brings these memories to life with a new feature called… wait for it… ‘Memories.’ Inside Memories, Apple pre-sorts photos and video based on specific trips, events, or your favorite people into albums like ‘last weekend’ or ‘best of 2016.’

Overall, the new features should greatly improve a problem that nearly all of us have: taking a lot of photos and really having no idea what to do with them. For most of us, these photos sit in Photos, Google Photos, or on Facebook and we rarely come back to them. Being able to re-surface images based on advanced machine learning and facial recognition should be the kick right in the nostalgia that most of us need to dust off those old photos and revisit some of favorite moments.

Get the TNW newsletter

Get the most important tech news in your inbox each week.