President Donald Trump reopened the US government last Friday after his 35-day-long attempt to compel members of Congress to fund a wall along the southern border ended in abject failure.

By all accounts, except perhaps the President’s own, the longest shutdown in modern US politics was a complete catastrophe. According to experts, it’s lingering effects may never be fully understood. Especially when it comes to the gaps and delays caused by a lack of data – the people’s data.

The most visible and immediate harm caused by the shutdown came in the form of Federal employees forced to work for more than a month without pay. Other well-known problems include the poor state of our national parks and flight delays.

The Congressional Budget Office estimates the shutdown cost the nation $11 billion dollars, with $3 billion of that irrecoverable.

But these aren’t the only problems Trump’s shutdown caused. The agriculture industry was disrupted and academics reliant on the people’s data had to put their research on hold.

Data drought

When Trump first cracked Forbes’ “World Billionaires” list in 1989, data was something stored in manilla folders and on punch-cards. If you wanted access to government data, you submitted a request and waited a few weeks.

Thirty years later, data is most often described as “oil” or “blood” by the people who rely on it to “fuel” their systems. Researchers, small businesses, investors, and federal agencies use government data to perform day-to-day functions and plan long-term strategies.

During a government shutdown, the people have limited access to government data. This, of course, is data that belongs solely to the people and, specifically, has been designated such by the Open Government Initiative.

A decade ago, President Barack Obama saw the need to give US citizens transparent access to government data and directed various government agencies to provide data-sets of relative information to the people, which could then be accessed via a central portal: Data.gov.

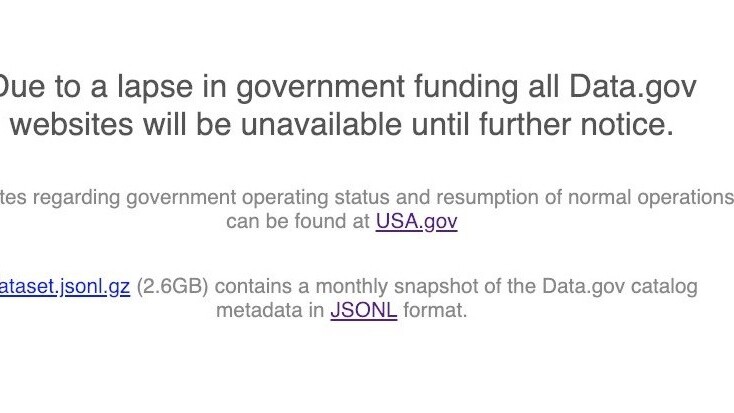

Data.gov and many other portals were either taken offline or recieved no updates during the government shutdown. The government shutdown didn’t take away all the data. In fact, Data.gov, as mentioned, is just a portal – there’s no actual data-sets stored there.

Q: If https://t.co/N8W38QiMnJ is having an issue or does not list the data, should I assume the data does not exist?

A: No, please only be sad after a full audit.

Resources:

– Search Engines

– https://[agency].gov/data

– https://[agency].gov/data.json

– Librarians— Rebecca Williams (@internetrebecca) January 9, 2019

But some databases, such the National Oceanic and Atmospheric Administration’s (NOAA), were completely offline. Others, including the US AID Developmental Data Laboratory (used for healthcare research), remained accessible but weren’t updated.

It’s impossible to know all the ways that limited or no access to government data-sets affected the nation. For example, it’s likely that thousands of students across the country weren’t able to complete assignments.

That might not sound like a big deal, but imagine for a moment if public libraries closed during a shutdown. For students working with datasets — which, at the university level, is most of them — this is is just as problematic.

Taught a 6 hour class in SQL programming today and I recommended https://t.co/QbbJXhlAcQ as a source of practice datasets. The site is closed due to the govt shutdown. Enough already! I might be inconvienced but real people are suffering. TSA, etc…

— Geoffrey Root (@GeoffreyRoot) January 20, 2019

Of course, academia isn’t the only entity affected by a data drought. Researchers and AI developers use government datasets to train machine learning models. These kinds of models are used to predict weather patterns, understand climate change, and to forecast trade in numerous industries.

According to Ira Cohen, co-founder and chief data scientist for Anodot, these people could be in for a double-whammy if they’re reliant on datasets that are down or not being updated.

“They could be screwed twice,” he told TNW, “once because they might not have access to the datasets they need and again because there’s also a gap in the data after the shutdown ends.”

Mind the gap

Cohen explained that, depending on the particular dataset, some information could be lost forever. For example, if a machine learning model uses data from NOAA’s dataset, and that data is no longer available, there’s no way to make up data to fill in for it. If there’s no data from December and January, for example, researchers could have to, hypothetically, skip those months.

TNW talked to several data experts and, without fail, the refrain seems to be that nobody knows what the long-term effects of the data drought will be. Cohen warned us the effects could result in datasets that will be perpetually incomplete, robbing researchers and data scientists of information that modern AI would otherwise exploit for insights.

At a minimum, the 35-day shutdown delayed countless research projects and caused any businesses working with the people’s datasets to spend man hours and/or money finding alternatives.

Unravelling the damages

Part of the problem is that you can’t quantify what delays in accessing data cost research. Students and university faculty members likely just move along to another assignment or project when datasets become unavailable.

Another issue is that any company that relies on the people’s data is probably not chomping at the bit to let their customers and clients know they’re hamstrung by the White House’s lack of foresight.

Alex Howard, an open government advocate, told FedScoop:

More than 5 years after [the 2013] government shutdown showed that public access to public data would be at risk during a shutdown, it’s infuriating that there is still no backup plan. Shutdowns are a known risk. The federal government should ensure that 21st century civic infrastructure like websites, data archives, and digital services are resilient against political threats as well as natural disasters.

Perhaps the biggest obstacle in determining the effects of closing off access to public datasets comes in the form of uncertainty over the future. The datasets that were taken down are all slowly coming back online as Federal workers who were furloughed return and websites that weren’t updated become available again.

This is good news for everyone who was stuck waiting for access. But all indications point toward the increasingly likely possibility of another shutdown in a little over two weeks.

21 days goes very quickly. Negotiations with Democrats will start immediately. Will not be easy to make a deal, both parties very dug in. The case for National Security has been greatly enhanced by what has been happening at the Border & through dialogue. We will build the Wall!

— Donald J. Trump (@realDonaldTrump) January 26, 2019

Some experts believe we’re out of the woods. There are plenty of pundits arguing that the President won’t risk another costly shutdown because, as GOP Senate Majority Leader Mitch McConnell likes to put it: “there is no education in the second kick of a mule.”

Republicans and those who continue to retain faith in the President’s border wall proposal believe the answer lies not in another shutdown, but in the White House declaring a national emergency.

But, as Elizabeth Goitein, co-director of the Liberty and National Security program at the Brennan Center wrote in The Atlantic, Trump has no case to declare a national security:

A president using emergency powers to thwart Congress’s will, in a situation where Congress has had ample time to express it, is like a doctor relying on an advance directive to deny life-saving treatment to a patient who is conscious and clearly asking to be saved.

Her point is that there’s little chance at all the President can successfully declare a national emergency to build his border wall. It’s hard to imagine a Federal judge will allow the President to circumvent Congress in a situation which, by the very fact he gave the government 21 days to ruminate on his offer, is not an emergency.

None of this is any consolation to the untold number of people affected by the sudden disappearance of datasets or data portals they rely on.

With Trump’s mercurial temperament and irrational negotiating tactics holding the nation hostage month-by-month, the shutdown hasn’t really ended yet. At least not for citizens, universities, and businesses that rely on the people’s data.

Get the TNW newsletter

Get the most important tech news in your inbox each week.