The debate on how far artificial intelligence can go revolves around questions about what actually constitutes human intelligence, and can a machine function similarly enough to a human brain?

While not shooting for AGI, UK-based Stanhope AI is building its models according to neuroscience principles, and using the predictive, hierarchic machinery that make up our brains for inspiration.

The result is an AI that doesn’t need training. It basically just needs to be told that it exists, provided a prior system of beliefs — and then take off (literally) into the real world and learn from its surroundings using sensors. Not unlike how you see, hear, and feel things that expand your knowledge, causing you to update (or reinforce) your worldview.

A spinout from University College London, the startup just raised £2.3mn for its neuroscience-inspired “agentic AI.” We caught up with co-founder and CEO, professor of computational neuroscience Rosalyn Moran, to learn more about the startup’s tech and vision for the future.

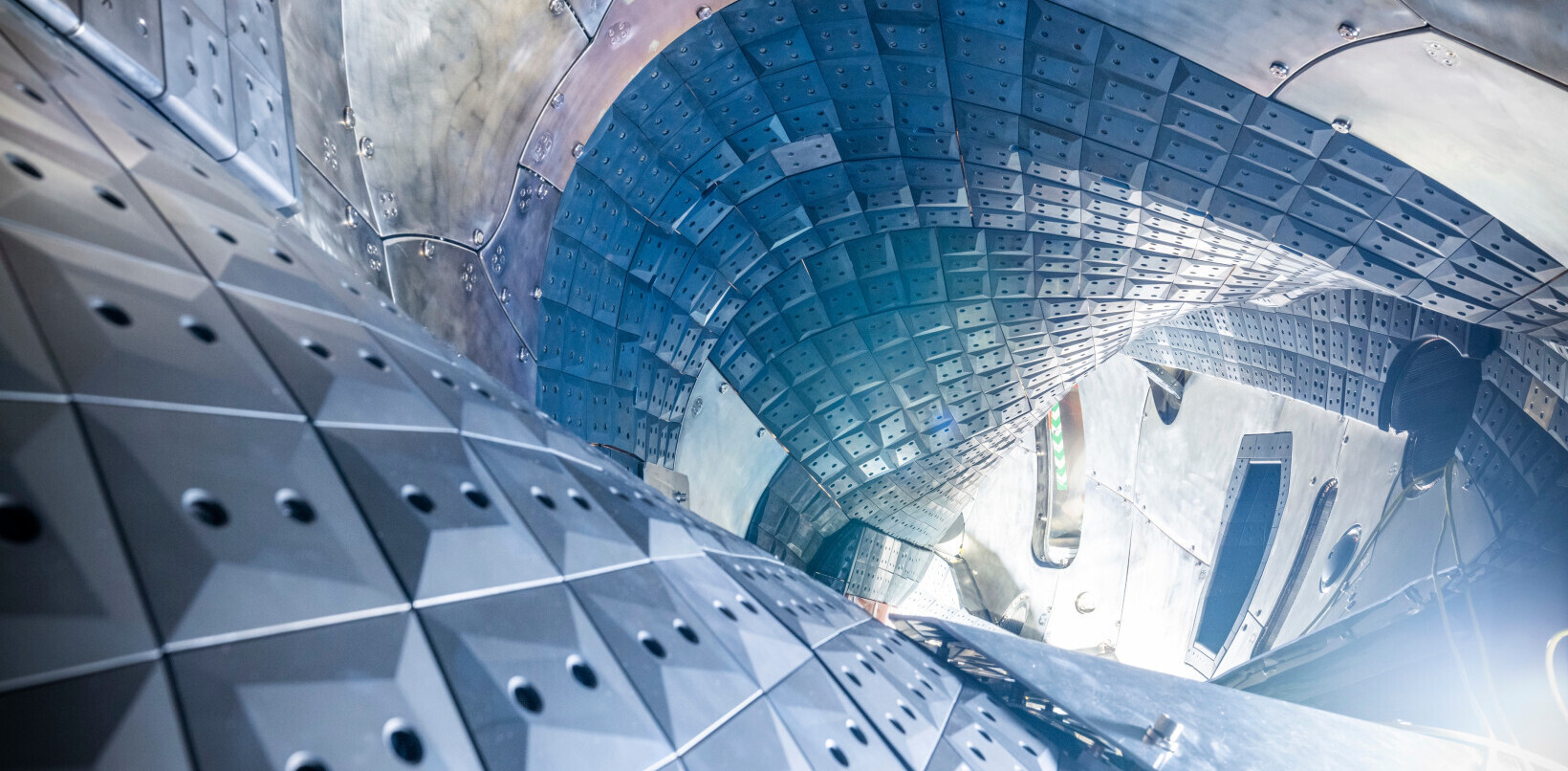

The layered ‘brain’ of Stanhope’s AI

Stanhope AI’s method builds on a theory that says that the brain has a model of the world, and is continuously trying to gather evidence to validate and update said model.

“The AI has a ‘brain’ a few levels deep, and at the very bottom of the brain are its sensors,” Moran explains. The sensors, which for you and me would be our eyes, in this case are cameras and LiDAR.

“And then those feed into a predictive layer that will try and say, ‘Okay, I saw a wall over there. Now I don’t need to keep looking’. And it’s built into a more interesting cognitive prediction at the higher levels. So it’s very much like a hierarchical brain.”

This is the same kind of prediction that our human brains engage in in order to make sense of the world and save energy (the brain is the most energy-demanding organ we have). This is a neuroscience principle called “active inference,” part of the Free Energy Theory, developed by Moran’s co-founder, professor of theoretical neurobiology, Karl Friston.

“I don’t need to check every pixel on the wall to make sure it’s a wall — I can fill in a bit. So that’s why we think the human brain is so efficient,” Moran adds.

Essentially, the way you experience the world is a result of how your brain predicts you will see it, in the service of energy efficiency. But credit to our brains, they then refines those predictions based on incoming sensory data. Stanhope AI’s model does the same, using the visual input from the world around it. It then makes autonomous decisions based on the new, real-time data.

No massive training data sets required

Using this approach to AI differs significantly from traditional machine learning methods such as those used to train LLMs, which can only operate with the data they are provided by those who train them.

“We don’t train [our model],” Moran says. “The heavy lifting is done in establishing the generative model, and making sure that it is correct and has consistent priors with where you might want it to operate.”

This is all theoretically fascinating, but for a startup to spin out of the lab, there needs to be real-world applications. Stanhope AI says that its AI can sit on autonomous machines, such as delivery drones and robots. The tech is currently in testing on drones with partners including Germany’s Federal Agency for Disruptive Innovation and the Royal Navy.

The greatest technological challenge the startup surmounted thus far was scaling from smaller models working in lab settings, to larger ones that can learn to navigate a much more expansive landscape.

“We had to use three mathematical routes to do free energy calculations that were much more efficient, so that we could build much larger worlds for our drones,” Moran states. She also adds that finding the right hardware that the company could access and control without having to rely on third parties also presented a significant engineering hurdle.

New wave of agentic AI

Stanhope AI’s “Active Inference Models” are, the company says, truly autonomous and can rebuild and refine their predictions. This is part of a new wave of “agentic AI” which are, just as the human brain, always trying to “guess what will happen next” by continuously learning from discrepancies between predictions and real-time data. There is no need for extensive (and expensive) prior training, and the approach also lowers the risk of AI “hallucinations.”

Notably, Stanhope’s AI are white box models, with the “explainability built into its architecture.” As Moran elaborates, “We make sure that it’s working absolutely perfectly in simulation. If the AI, or the drone, does something strange then we really drill down on what it believed there, why it did what it did. So it’s a very different way of developing AI.” The idea, she says, is to transform the capabilities of AI and robotics and make them more impactful in real-world scenarios.

The UCL Technology Fund led Stanhope AI’s £2.3mn funding round. Creator Fund, MMC Ventures, Moonfire Ventures, and Rockmount Capital also participated, along with several industry investors.

Stanhope AI was founded in 2021 by Professor Rosalyn Moran, Director Professor Karl Friston and Technical Advisor Dr Biswa Sengupta.

Get the TNW newsletter

Get the most important tech news in your inbox each week.