The Australian Federal Police recently announced plans to use DNA samples collected at crime scenes to make predictions about potential suspects.

This technology, called forensic “DNA phenotyping”, can reveal a surprising and growing amount of highly personal information from the traces of DNA that we all leave behind, everywhere we go – including information about our gender, ancestry, and appearance.

Queensland police have already used versions of this approach to identify a suspect and identify remains. Forensic services in Queensland and New South Wales have also investigated the use of predictive DNA.

This technology can reveal much more about a suspect than previous DNA forensics methods. But how does it work? What are the ethical issues? And what approaches are other countries around the world taking?

How does it work?

The AFP plans to implement forensic DNA phenotyping based on an underlying technology called “massively parallel sequencing

Our genetic information is encoded in our DNA as long strings of four different base molecules, and sequencing is the process of “reading” the sequence of these bases.

Older DNA sequencing machines could only read one bit of DNA at a time, but current “massively parallel” machines can read more than six trillion DNA bases in a single run. This creates new possibilities for DNA analysis.

DNA forensics used to rely on a system that matched samples to ones in a criminal DNA database and did not reveal much beyond identity. However, predictive DNA forensics can reveal things like physical appearance, gender, and ancestry – regardless of whether people are in a database or not.

This makes it useful in missing persons cases and the investigation of unidentified remains. This method can also be used in criminal cases, mostly to exclude persons of interest.

The AFP plans to predict gender, “biogeographical ancestry”, eye color, and, in coming months, hair color. Over the next decade, they aim to include traits such as age, body mass index, and height, and even finer predictions for facial metrics such as distance between the eyes, eye, nose and ear shape, lip fullness, and cheek structure.

Are there any issues or ethical concerns?

DNA can reveal highly sensitive information about us. Beyond ancestry and externally visible characteristics, we can predict many other things including aspects of both physical and mental health.

It will be important to set clear boundaries around what can and can’t be predicted in these tests – and when and how they will be used. Despite some progress toward a privacy impact assessment, Australian forensic legislation does not currently provide any form of comprehensive regulation of forensic DNA phenotyping.

The highly sensitive nature of DNA data and the difficulty in ever making it anonymous creates significant privacyconcerns.

According to a 2020 government survey about public attitudes to privacy, most Australians are uncomfortable with the idea of their DNA data being collected.

Using DNA for forensics may also reduce public trust in the use of genomics for medical and other purposes.

The AFP’s planned tests include biogeographical ancestry prediction. Even when not explicitly tested, DNA data is tightly linked to our ancestry.

One of the biggest risks with any DNA data is exacerbating or creating racial biases. This is especially the case in law enforcement, where specific groups of people may be targeted or stigmatized based on pre-existing biases.

In Australia, Indigenous legal experts report that not enough is being done to fully eradicate racism and unconscious bias within the police. Concerns have been raised about other types of potential institutional racial profiling. A recent analysis by the ANU also indicated that 3 in 4 people held an implicit negative or unconscious bias against Indigenous Australians.

Careful consideration, consultation, and clear regulatory safeguards need to be in place to ensure these methods are only used to exclude persons of interest rather than include or target specific groups.

DNA data also has inherent risks around misinterpretation. People put a lot of trust in DNA evidence, even though it often gives probabilistic findings which can be difficult to interpret.

What are other countries doing?

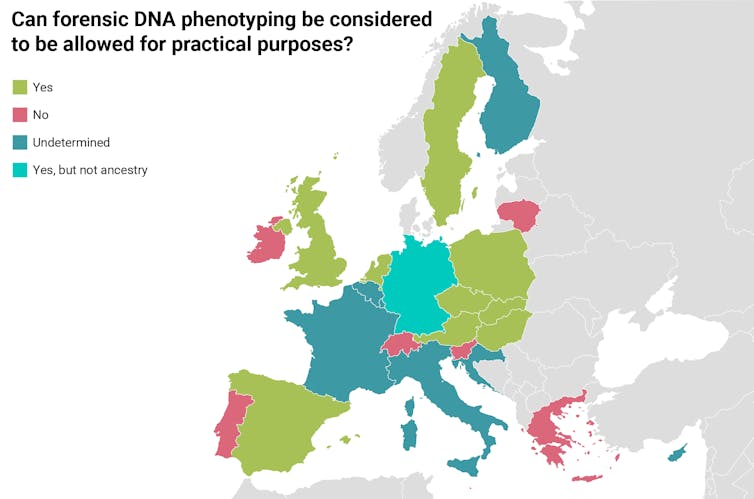

Predictive DNA forensics is a relatively new field, and countries across Europe have taken different approaches regarding how and when it should be used. A 2019 study across 24 European countries found ten had allowed the use of this technology for practical purposes, seven had not allowed it, and seven more had not yet made a clear determination on its use.

Germany allows the prediction of externally visible characteristics (including skin color) but has decided biogeographical ancestry is simply too risky to be used.

The one exception to this is the state of Bavaria, where ancestry can be used to avert imminent danger, but not to investigate crimes that have already occurred.

A UK advisory panel made four recommendations last year. These include the need to clearly explain how the data is used, presenting ancestral and phenotypic data as probabilities so uncertainty can be evaluated, and clearly explaining how judgments would be made about when to use the technology and who would make the decision.

The VISAGE consortium of academics, police, and justice institutions, from eight European countries, also produced a report of recommendations and concerns in 2020.

They urge careful consideration of the circumstances where DNA phenotyping should be used, and the definition of a “serious crime”. They also highlight the importance of a governing body with responsibility for deciding when and how the technology should be used.

Safeguarding public trust

The AFP press release mentions it is mindful of maintaining public trust and has implemented privacy processes. Transparency and proportionate use will be crucial to keep the public on board as this technology is rolled out.

This is a rapidly evolving field and Australia needs to develop a clear and coherent policy that is able to keep up with the pace of technological developments – and considers community concerns.![]()

Article by Caitlin Curtis, Research fellow, The University of Queensland and James Hereward, Research fellow, The University of Queensland

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Get the TNW newsletter

Get the most important tech news in your inbox each week.