Watch out IBM Watson, Google has its own kickass ‘Show and Tell’ AI and it’s getting pretty damn good at depicting what it sees in photos – and now everyone can use it.

Today, the tech giant announced it’s open-sourcing its automatic image-captioning algorithm as a model in TensorFlow for everyone to use.

This means anyone can now train the algorithm to recognize various objects in photos with up to 93.9 percent accuracy – a significant improvement to the 89.6 percent that the company touted when the project initially launched back in 2014.

Training ‘Show and Tell’ requires feeding it hundreds of thousands of human-captioned images that the machine then uses and re-uses when “presented with scenes similar to what it’s seen before.”

To learn smoother, Google’s impressive AI also uses a new vision component that allows for faster training and more detailed captions as it’s gotten much better at telling objects apart from each other. The researchers have also fine-tuned the system to perceive colors.

An image classification model will tell you that a dog, grass and a frisbee are in the image, but a natural description should also tell you the color of the grass and how the dog relates to the frisbee.

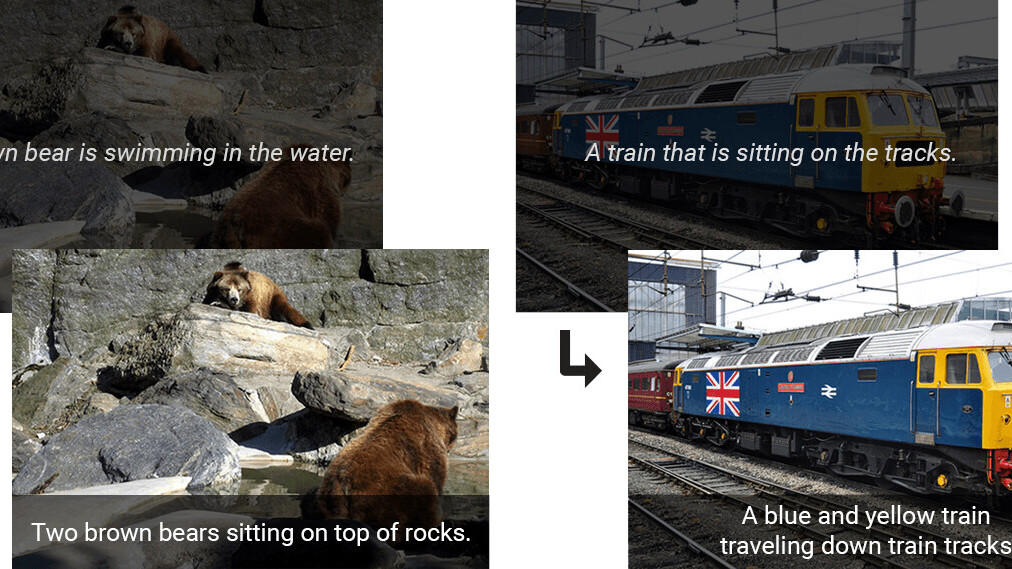

Here’s an example of an image that ‘Show and Tell’ was trained to caption:

The researchers claim that once the algorithm has processed a large enough sample of images and captions, it will be capable of accurately generating descriptions for scenes it has never seen before.

There’s a catch, though: You’ll have to train the AI yourself in case you want to use it – and you’ll need a mighty GPU for that. So you might have to wait a little longer before someone releases a trained version that you can try out.

Head to Google’s official announcement for more details.

via Engadget

Get the TNW newsletter

Get the most important tech news in your inbox each week.