Google has created a single API that will let Android apps be more contextual and location aware.

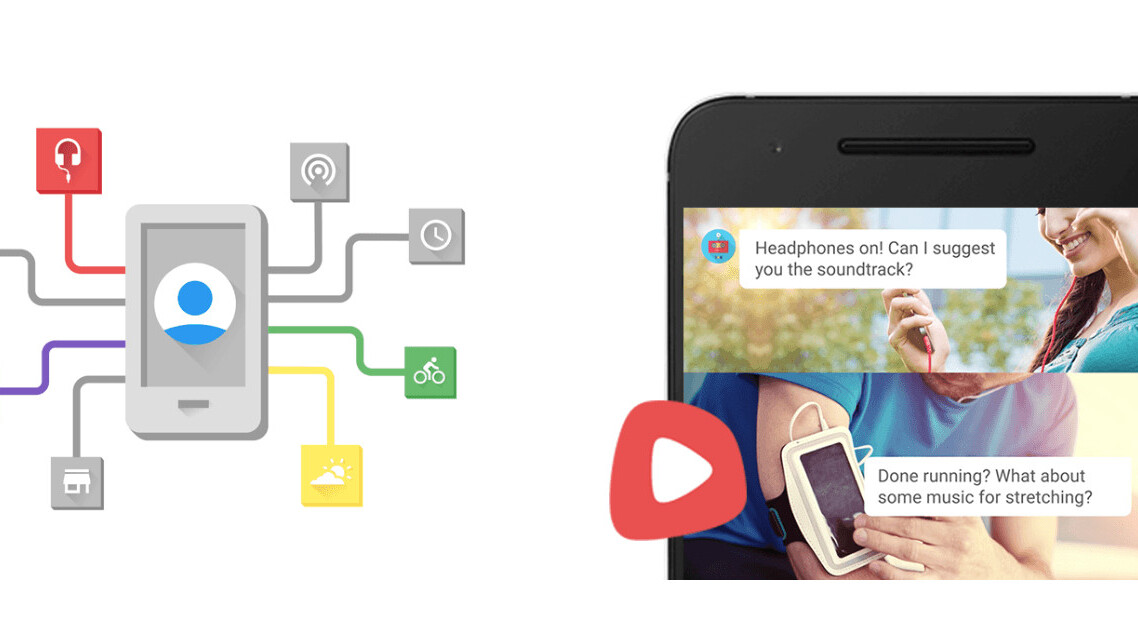

The Google Awareness API uses seven location and context signals (time, location, places, beacons, headphones, activity and weather) from your phone to help apps create custom behaviors. It also offloads much of the behind-the-scenes resources your phone would use for those signals to help with battery life, and lets developers create custom geofenced locations.

It’s a bit like Apple’s predictive app feature, which does things like suggest you open the Starbucks app when you’re near a Starbucks — but a bit more robust.

One API is really two

Within the Awareness API are two separate APIs that can be accessed independently; Fence and Snapshot.

The Fence API uses simple geofencing so developers can create geofencing to make app suggestions contextual. For instance, a developer who has created a dedicate workout music app could parse JSON location data to suggest you open your favorite cardio playlist when you’re near a gym.

Separately, the Snapshot API does the heavy lifting described above to help gather signal information from your device.

The Awareness API isn’t quite ready for the masses, but Google is taking applications for early access.

Get the TNW newsletter

Get the most important tech news in your inbox each week.