Leading into Google I/O, one session caught everyone’s attention. Google ATAP — the company’s skunkworks division tasked with creating cool new things we’ll all actually use — teased their session with talk of a new wearable that would “literally” blow our socks off.

Project Soli is that wearable, but it’s not the wearable you might think it is. It’s not a watch; it’s you.

Google ATAP knows your hand is the best method you have for interaction with devices, but not everything is a device. Project Soli wants to make your hands and fingers the only user interface you’ll ever need.

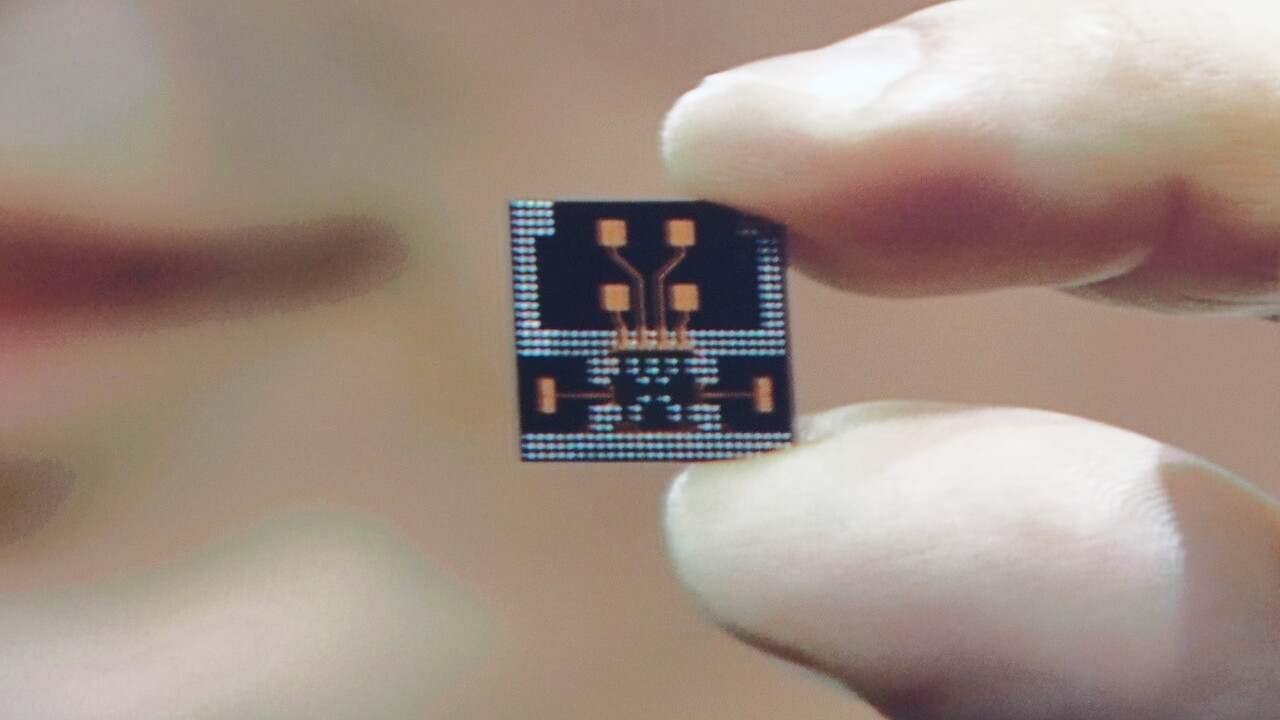

To make that happen, Project Soli is really a radar that is small enough to fit into a wearable like a smartwatch. The small radar picks up on your movements in real-time, and uses movements you make to alter its signal.

At rest, the hand is actually moving slightly, which end up as a baseline response on the radar. Moving the hand away from or side-to-side in relation to the radar changes the signal and amplitude. Making a fist or crossing fingers also changes the signal.

To make the signal make sense to an app or service, ATAP will have APIs that tap into the deep machine learning of Project Soli.

It’s still early days for Project Soli, but it’s got the crowd here at Google I/O excited. Rather than go hands-free, Project Soli makes your hands the UI — which may already be cooler than voice control ever was.

Read next: Everything Google announced at Google I/O 2015 in one handy list

Get the TNW newsletter

Get the most important tech news in your inbox each week.